Copyright (2000,2001)

Dr. Dean G. "Gordy" Fairchild e-mail

TABLE OF CONTENTS

"In network economics, more brings more." [Kelly, 1994]

Chapter 2 explained why hypercommunications exist economically by tracing the origins of the information economy and reviewing the new economic thinking that has resulted. Chapter 3 explains why hypercommunications exist technically by tracing the origins of communication networks and reviews the network economics that resulted. Chapter 2 showed that technical and economic components jointly shaped the economic foundation of hypercommunications. Similarly, Chapter 3 shows that technical and economic components jointly shape the technical foundation of hypercommunications.

The 1996 comments of Richard J. Shultz provide purpose and organization to Chapter 3, the second why chapter. Shultz details two stages of what he calls "engineering economics" that preceded the era of a "new economics" of telecommunications:

The underlying premise was the concept of natural monopoly which was both an article of faith and, to continue the metaphor, was assumed to be the product of immaculate conception. This was the era of producer sovereignty, where the idea of a telecommunications market was somewhat of an oxymoron. As far as customers were concerned, they were not customers in any meaningful sense. Rather, they were "subscribers" who were not active participants but passive subjects who were serviced, provided for, by the telephone company which had a monopoly on what became an essential service.

The second stage of "engineering economics" covers approximately the last 25 years. In this period, telephone companies were challenged by political authorities --- elected, departmental, and regulatory. But they did not question or challenge, for the most part, the fundamental precepts of classical engineering economics based on the idea of natural monopoly. Rather, they sought to supplant telco engineers as planners with social and political engineering. Such engineering was more akin to manipulation and logrolling. . . .

The "new economics" of Canadian telecommunications could not be more profoundly different from "engineering economics" in either form. To invoke a current cliché, the "new economics" represents a paradigm shift, not simply adjustments at the margin. In the first place, the concept of natural monopoly has lost virtually all meaning and relevance to contemporary telecommunications. . . .

Secondly the concept of a single, integrated telephone system has been "blown away", replaced by an abundance of market niches, dissolving boundaries, and a concentration on interoperability and interconnectivity.

The third major characteristic of the "new economics" is the downgrading of the status of the corporate telecommunication's engineer, at least in terms of the profession's classical domination of the sector. The marketing specialist has emerged as a driving force, not only in the development of individual services, but far more importantly in shaping and determining the very nature of the telecommunications firm.

All this reflects the most profound change, the collapse of both producer, and its erstwhile rival and successor, political sovereignty. . . . Once subject and serviced, the customer has been empowered and is now full citizen and in the driver's seat. For the first time, the "new economics" of telecommunications is the economics of consumer sovereignty. Telecommunications will, henceforth, be customer-driven and controlled. [Shultz, 1996, pp. 35-37]

Powerful network effects are one source of the dual myths of unlimited communications and an unlimited economic frontier. After introducing the economics of networks and considering the role played by network economics in establishing and denying the dual myths, section 3.1 will briefly consider the ongoing folklore of unlimited communications.

Network effects (often called externalities or synergies) are part of a new vocabulary of information economy terms accompanied by new economic thought. The new thought ranges from the almost unlimited "new economy" [Kelly, 1998] to the new, but limited "weightless economy" [Kwah, 1996, 1997; Coyle, 1997; Cameron, 1998] and, finally, to the limited, and well known "network economy" [Shapiro and Varian, 1998]. While there is a debate about what to call the information economy, there is general agreement that networks are playing an important role in creating a new economic model, or at least in revising the old one. Networks help speed information flow, promote innovation, and generate markets that behave differently from those in the industrial economy.

The debate about the modern economic vocabulary stems from differences of opinion concerning economic theory and network effects [DeLong, 1998, p.3]. Authors such as Kelly [1998] argue that economists are baffled by a new economic order rooted in the distinctive economic logic of networks. However, microeconomists like Shapiro and Varian claim that a new economics is not needed to understand technological change since economic laws do not change [Shapiro and Varian, 1998]. From a macroeconomic viewpoint, weightlessness or dematerialization naturally occurs as the physical economy grows into a virtual, network economy as production and consumption shift away from atoms and molecules and towards bits and bytes [Kwah, 1996].

Networks are important to the evolving U.S. economy, whether it is called new, network, or weightless. Nortel Corporation (a major manufacturer of hypercommunication hardware) reports on the enormous economic scope of networks in 1996:

Before beginning a discussion of the analog-digital distinction, which is critical to defining rural hypercommunications infrastructure, the International System of Units (SI) must be introduced. These prefixes and units form the weights and measures of hypercommunications. According to the FCC in 1996, "The SI is constructed from seven base units for independent physical quantities." The SI is a part of the metric system that has been adopted by the United States and most other countries. There are seven base units, a set of prefixes of magnitude, and a number of SI derived units that are useful in hypercommunications.

Table 3-1 shows the prefixes commonly used to show magnitude. This table is important in two ways. First, technological change in computer speeds, for example, is pushing the bottom of the table (the fractional portion) further down, to ever-smaller units. In 1980, computer time units were counted in milliseconds and microseconds, but now nanoseconds and picoseconds are increasingly common. This exponential shrinkage in processing times at the table's bottom is reflected in another way at the top of the chart by exponential growth in storage, bandwidth (capacity), and speed. For example, portable storage devices have gone from floppy disks that held 360 kilobytes (kB) to 1.2 megabyte floppies, on up to 8.5 gigabyte (GB) for two-layer double-sided DVDs or 17 GB for four-layer double-sided DVDs. This is a difference of 23,611 times, or a spectacular annualized growth rate.

Table

3-1: SI common metric prefixes.

| Multiplication Factor | Scientific Notation | Prefix | Symbol and Capitalization | US Name |

| 1,000,000,000,000,000,000 | 1018 | exa | E | quintillion |

| 1,000,000,000,000,000 | 1015 | peta | P | quadrillion |

| 1,000,000,000,000 | 1012 | tera | T | trillion |

| 1,000,000,000 | 109 | giga | G | billion |

| 1,000,000 | 106 | mega | M | million |

| 1,000 | 103 | kilo | k | thousand |

| 100 | 102 | hecto | h | hundred |

| 10 | 101 | deka | da | ten |

| .1 | 10-1 | deci | d | tenth |

| .01 | 10-2 | centi | c | hundredth |

| .001 | 10-3 | milli | m | thousandth |

| .000001 | 10-6 | micro | m | millionth |

| .000000001 | 10-9 | nano | n | billionth |

| .000000000001 | 10-12 | pico | p | trillionth |

| .000000000000001 | 10-15 | femto | f | quadrillionth |

| .000000000000000001 | 10-18 | atto | a | quintillionth |

[Sources: GSA, FED-STD-1037C, 1996, pp. I-12, 13; Chicago Manual of Style, 1982, p. 393, The Random House Encyclopedia, p. 1449]

Two of the seven SI base units, seconds (s) and amperes (A), are central to electronic hypercommunications. They are joined by several SI derived units given in Table 3-2.

Table

3-2: Electrical and other SI derived units.

| Item | Unit name | Unit symbol | Expression in other SI units |

| Frequency | hertz | Hz | s-1 |

| Electric capacitance | farad | F | C/V |

| Electric charge, quantity of electricity | coulomb | C | A· s |

| Electric conductance | siemens | S | A/V |

| Electric inductance | henry | H | Wb/A |

| Electric potential, potential difference, electromotive force | volt | V | W/A |

| Electric resistance | ohm | W | V/A |

| Power, radiant flux | watt | W | J/s |

Entries in the table are a few essential electric and electronic uses of SI units that are derived from combinations of other SI units. The most important unit in Table 3-2 is frequency.

Other than SI units, the most important units in hypercommunication are the digital units of bit (b) and byte (B). A bit is a single digit within a byte. A given byte theoretically may be of any length of bits. An older term, baud (Bd) was a measure of transmission over analog telephone lines. The baud rate measures the number of times a communication line changes per second based upon an encoding scheme for the French telegraph system developed by Baudot in 1877 [Sheldon, 1998, p.93; Bezar, 1996, p. 317]. When bits are encoded at line speed, unibit encoding is said to exist: two bits encoded per line speed is dibit encoding; three bits encoded is tribit encoding. Encoding is the changing of code as seen by user into code as transmitted over conduit such as copper wires.

3.2.1 Digitization and Digital

Bits are also known as binary digits expressed within a septet (byte with seven bits) or octet (byte with eight bits). The bit is the fundamental unit of digital communications and the source of the term digital. While a more complete explanation is found in 4.2.1 (as a preface to explaining what hypercommunications are), a simple definition of digital is data or information created (source domain) or transmitted (signal domain) in bits.

When the term digitization is used, it refers to converting analog information into bits in either the source domain or the signal domain. An example of the conversion of an analog source (such as a photograph) into a digital source would be the scanning of a photograph's continuously varying features into a digital file. The greater the need to precisely match the original hues and tones of the photograph, the greater the number of bits needed. Turning from the source domain to the signal domain is simple. The signal domain merely refers to whether the signal transmitted is analog (such as continuously varying sound waves) or digital (characterized by discrete pulses rather than waves).

There are several reasons digitization is important in both the source and signal domains. Often, a failure to understand the difference between analog and digital stems from confusing signal and source.

Digital sources are easily manipulated, can carry a greater volume of information in a smaller surface, at a lower per unit production cost. This is clearly seen when phonograph records are compared to CDs or DVDs. There was an evolution of analog media from primitive magnetic recording disks to Edison's single-sided phonograph technology and then into two-sided analog records. Each new analog medium was capable of storing more information. However, with the advent of digital forms such as CD and DVD, not only could more information be carried, it was more easily manipulated. An exponential growth in storage and transmission capacity, along with many media choices (optical or magnetic storage, for example) are available with digitized sources. A single DVD can carry 4.7 to 8.5 GB of data or up to fourteen times what a CD can [Sheldon, 1998, p.919]. CD's, in turn, carry many more times the information that a 33 RPM double sided stereo album. Even more important perhaps is the ability for many independent kinds of devices to use digital sources, the added simplicity of changing or reproducing bits, along with the ability of computers to process digital sources.

However, in the signal domain, digitization makes accuracy and the absence of errors in transmission more important than ever.

Over time, digital signal transmission developed although digitization of sources was still uncommon. Alec Reeves of ITT (International Telephone and Telegraph) developed a theory by which voice signals could be carried digitally in 1937 called PCM (Pulse Code Modulation). Development of transistors in 1947 at Bell Labs and integrated circuits at TI (Texas Instruments) and Fairchild permitted testing of PCM to begin commercially in 1956. In 1962, Bell of Ohio deployed PCM to carry traffic, and by the late 1960's, even some rural telephone co-ops began to use PCM for interoffice trunk (transport level) circuits. One reason digital transmission is important to rural subscribers is that PCM replaced analog circuits that amplified noise and distortion because analog signal noise is amplified with increasing distance. Instead of having to shout through static and crosstalk, telephone calls carried by PCM over even greater distances had substantial quality increases while carrier unit costs fell [REA, 1751H-403, 1.1-1.5].

The advent of computers made it necessary for digitization within the source domain. However, the benefits of combining the computer with communications were less evident forty years ago. While it was economical to convert parts of the telephone network (within the telephone company's transport level) to digital transmission, there was no need to do so for the local loop (the wire from telephone subscribers to the telephone company). Consequently, when advances in computer technology grew faster than local loop technology, a vast amount of information from digital sources had to be converted into analog form to travel over the telephone network for data communications to occur. The reliance on digitization of source and signal is an important way the hypercommunications model differs from the interpersonal and mass models of communication. Practical issues in signal and source conversion are returned to in 4.2.

Within the economics literature, there is considerable debate about what networks represent. For this reason, four conceptions of networks give a broad overview of what networks are before presenting the details of the engineering, communication, and economic perspectives later in Chapter 3. The four conceptions are: networks as fuzzy paradigms, macro networks, micro networks, and the debate in economics concerning network externalities.

The first conception of networks (and the conception in common use) is a fuzzy concept representing almost everything a business or a person does. Used in jargon in this first way, a network may be a noun, verb, or adjective describing a business paradigm, a physical communications network, a human community, or human interaction. Therefore, one problem facing economists who study networks is how to define them because the fuzzy conception is so broad that it has no special economic significance. For example, according to the Nortel Corporation in 1996:

Indeed, in another era, Jenny defined a system as "any complex or organic unit of functionally related units" [Jenny, 1960, p. 165] so that yesterday's system is today's macro network. A system (like a network) can be anything from a person to a political party to the entire world economy or the solar system, depending on the point-of-view of the analyst. Sub-systems form successive levels in a layer-cake of systems just as sub-networks make up levels of a network of networks (or internetwork).

Hypercommunications relies on a particular micro network or "a ubiquitous and economically efficient set of switched communications flows". [Lawton, 1997, p. 137] Crawford (1997) notes the importance of source and signal domain in networks when he states that:

In common use, the word architecture might appear to describe the physical form or engineering structure of a network. In the special case of communications networks, architecture has even been used to describe the economics of communication function (message content and nature of service provided) [MacKie-Mason, Shenker, and Varian, 1996]. In network engineering, the term architecture covers the relationship among all network elements (hardware, software, protocols, etc.) while the word topology connotes a physical (or logical) arrangement. Therefore, when architecture is used, it conveys a broader meaning than topology alone to include the network's uses, size, device relationships, physical arrangement, and connections.

A fourth network-related concept is when to adopt terms such as "network externality", "network effects", and "network synergies" to model the economics of networks. One reason there has been confusion in network economics has to do with use of the term externality as a catchall word to describe all synergies and effects of networks in the economics literature.

Externalities are an important reason network economics has become a specialized field within economics. Externalities describe both external economies and external diseconomies as Samuelson points out in 1976:

However, network economics is not merely the study of network externalities. Liebowitz and Margolis are critical of what they call the "careless use" of the term externality in the network economics literature. They prefer the broader term network effects because "Network effects should not properly be called network externalities unless the participants in the market fail to internalize these effects." [Liebowitz and Margolis, 1998, p. 1] The term synergy is also in wide use because of its interactive connotation.

Network economics examines how network effects arise in markets and the influence of those effects on supply, demand, and welfare. However, familiarity with the form and function of the hypercommunication network is a necessary foundation to the application of the economics literature to the hypercommunication problem. The discussion will return to a review of network economics in section 3.7 after more is understood about the hypercommunication network.

Finally, return to an ongoing theme about the sometimes opposite philosophies of the engineer and the economist. Engineers include relationships among the physical parts of any general network, (points and the lines that connect the points) when they consider a network's functional architecture. The actions of human users on any end of a hypercommunication network may be trivially considered in engineering design, but network engineering fundamentals are composed of hardware and software, not people and communication. Furthermore, the engineering of a network depends on a changing state of technical knowledge. Therefore, the architecture of the hypercommunication network is the product of technological change combined with how various micro networks interact with human communicators who behave under economic constraints. The engineering view of a network may end with the terminal or node, but the communications and economics views consider the sender and receiver as network elements too.

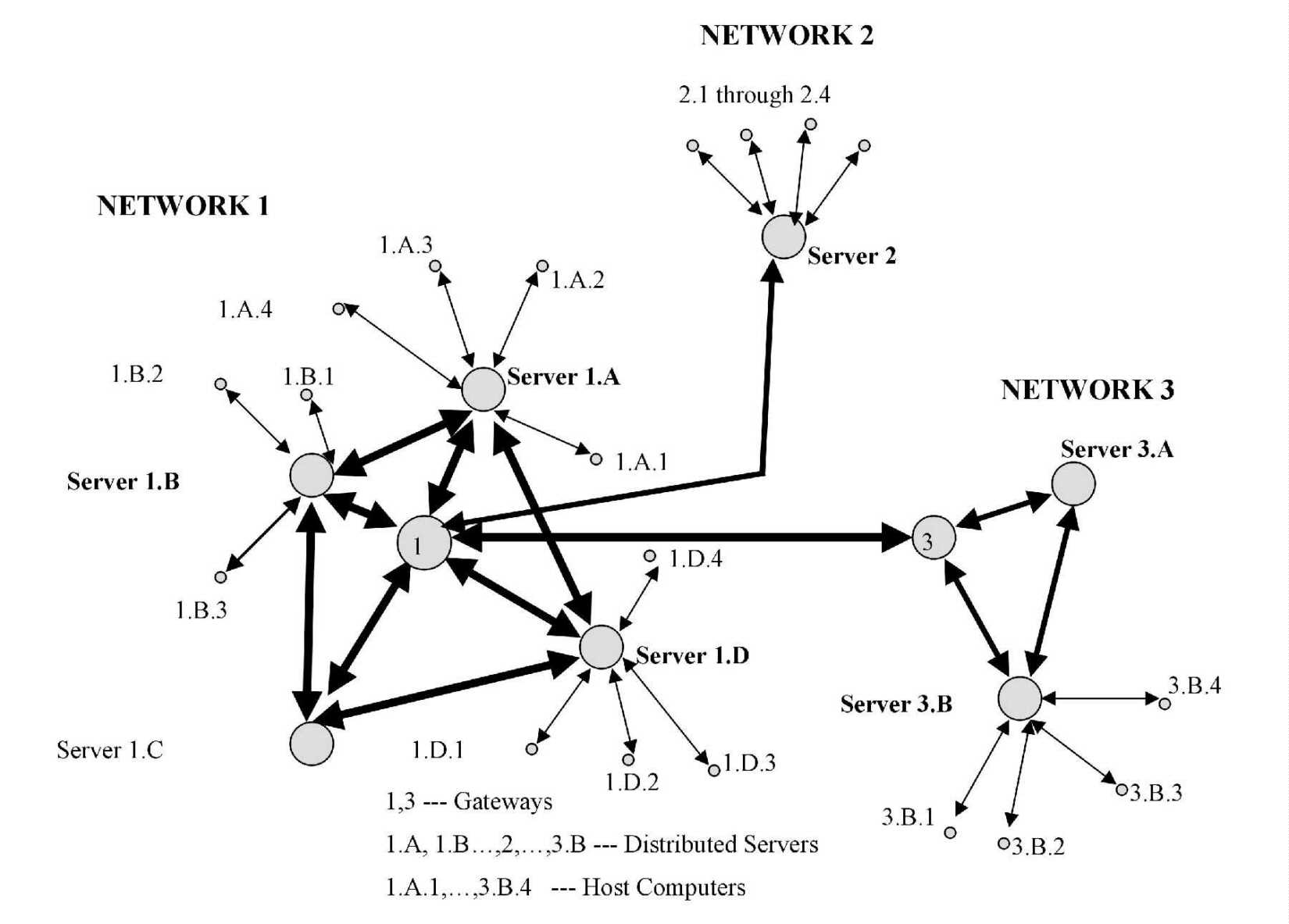

Finally, conceptions of networks are rooted in concrete examples. Hypercommunication network architectures are hybrids of several working scientific conceptions. First, there are the technical characteristics of the PSTN (Public Switched Telephone Network) that came from the engineers of Bell Labs (now Lucent Technologies) and the baby Bell lab (Bellcore, now Telcordia) which are covered in section 3.3. Next, some technical characteristics of computer networks are covered in 3.4 as they were developed by computer scientists and electrical engineers. These characteristics led to the evolution of six economic generations of computer networks in 3.5, as developed by professionals from the data communications field. Finally, the multidisciplinary operations research networking literature (3.6) has combined all three kinds of networks into the technical foundation of hypercommunication networking. These four sections acquaint the reader with some elementary technical properties of the hypercommunications network. Once these properties have been established, the network economics literature can be applied to the resulting micro network of hypercommunications in section 3.7.

Work in telephone engineering pioneered communication network architecture. This discussion will cover elementary telephone network fundamentals focusing on a simplistic view of the PSTN (Public Switched Telephone Network) as it traditionally worked to provide POTS (Plain Old Telephone Service). Today's urban PSTN (which provides enhanced services far beyond traditional POTS) is more detailed than the model presented here primarily because telephone networks have adopted computer network designs and operations research algorithms. Importantly, in certain rural areas of the U.S., the POTS PSTN is still the only network. A more detailed discussion of how the PSTN works is found in 4.3.2 (telephone infrastructure) and in 4.6 (the traditional telephony market) and 4.7 (the enhanced telecommunications market).

Two main aspects of the POTS PSTN will be considered here. First, the basic engineering elements and levels are identified and defined. Second, three groups of technical problems governing the reliability, QOS (Quality of Service), and operational costs and benefits are considered.

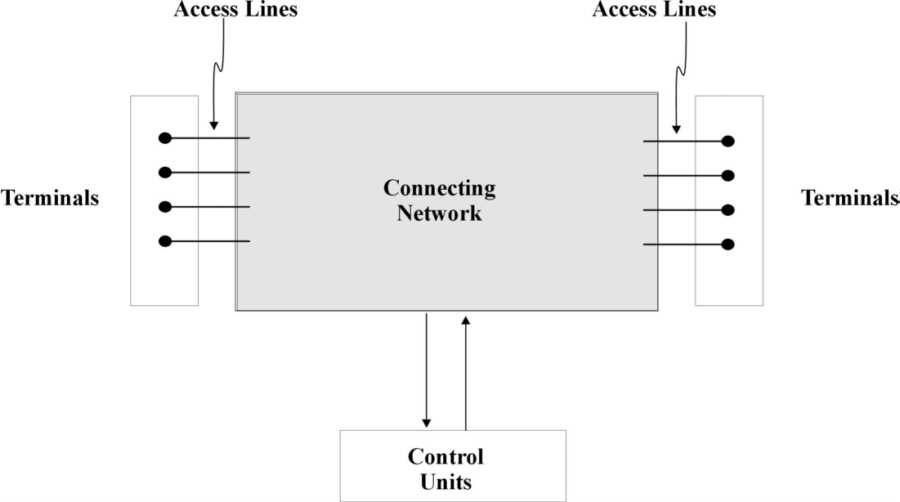

To understand the fundamentals of the telephone network, it is first necessary to understand its place in a more general telephone connecting system. Figure 3-1 [Adapted from Beneš, 1965] shows the gross structure of the connecting system: local and remote terminals (telephones), the connecting network (a hierarchical layer of switches and transport equipment), and a control unit. As depicted here, the connecting network is a single entity, though over time it has become an efficiency hierarchy of sub-networks.

Figure 3-1: Gross structure of the telephone connecting system.

The telephone connecting system is "a physical communication system consisting of a set of terminals, control units which process requests for connection, and a connecting network through which the connections are effected" [Beneš, 1965, p. 7]. Technically, the network and the system are two different entities because two terminals (telephone stations) can be connected without a network. The control units provide the intelligence necessary to allow many telephones to be efficiently connected to what would otherwise be a dumb network.

The separation between a

terminal and the network is behind the advantage of having a hierarchical

network within a connecting system: dramatic efficiency. Suppose there

were n terminals that needed to be connected together. Without a network, ![]() connections would be required between the n terminals to allow a total

of n x (n-1) possible calls. When the telephone system was first invented,

this is exactly what was done. According to Oslin, "Early telephones were

leased in pairs; there were no exchanges." [Oslin, 1992, p. 221] This historical

fact introduces a fundamental property of hypercommunication networks:

direct physical connection between every pair of terminals is neither necessary

nor efficient for communication between all users to occur. A telephone

network has the property that all n terminals can be connected for a cost

dramatically below the cost of stringing

connections would be required between the n terminals to allow a total

of n x (n-1) possible calls. When the telephone system was first invented,

this is exactly what was done. According to Oslin, "Early telephones were

leased in pairs; there were no exchanges." [Oslin, 1992, p. 221] This historical

fact introduces a fundamental property of hypercommunication networks:

direct physical connection between every pair of terminals is neither necessary

nor efficient for communication between all users to occur. A telephone

network has the property that all n terminals can be connected for a cost

dramatically below the cost of stringing ![]() sets of wires between all pairs of telephones.

sets of wires between all pairs of telephones.

The reduction in connections is accomplished two ways, first through line consolidation and second through routing hierarchies. Line consolidation simply means the "servicing of many phones by fewer telephone lines or circuits" [Bezar, 1995, p. 46]. The number of telephones that are connected to the network (terminals) exceeds the number of connections from those terminals to the connecting network. For example, a farm may have five telephone extensions on one telephone line (access line) connected to the connecting network. The number of terminals connected to the PSTN exceeds the number of access lines because of line consolidation.

Routing hierarchies provide even greater reductions in the total number of connections. In the telephone network hierarchy, a local central office (serving a particular set of telephone exchanges) is connected to a local interexchange network or to a toll network. Rather than string a pair of wires between every pair of Florida's 8,025,917 access lines to yield 32,207,667,832,486 paired connections, the market has established over 1200 telephone exchanges (nodes) through which any single telephone subscriber may reach any other subscriber. Furthermore, additional line consolidation takes place for every level of the network.

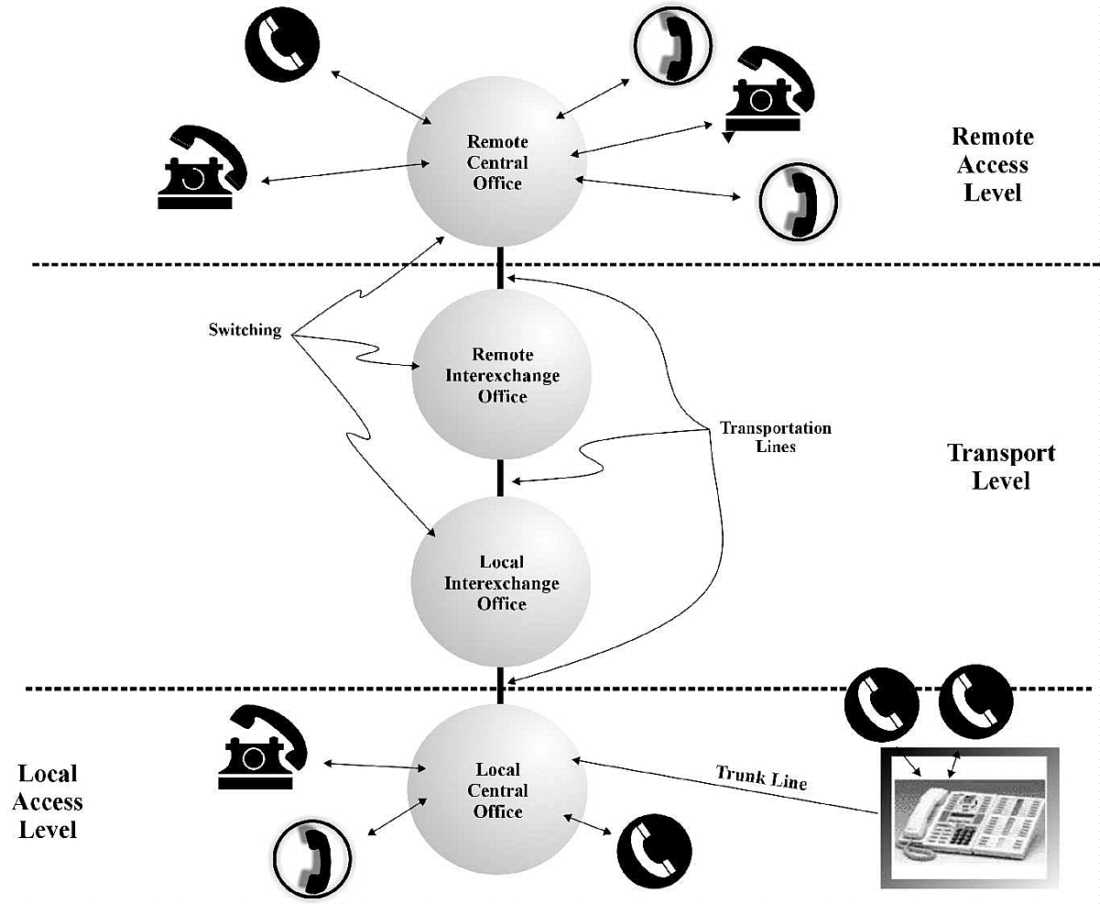

Four chief activities take place within the PSTN hierarchy: access, signaling, transport, and switching as depicted in Figure 3-2. Assume that a call originates from the bottom of Figure 3-2 at a telephone (perhaps in Key West) to a remote telephone (in Pensacola), at the top of the figure.

Figure 3-2: PSTN routing hierarchy.

The telephone set (or terminal) may connect locally in two ways: lineside or trunkside. Lineside telephones are directly wired via a single access line to the local central office (as on the lower left-hand side). Trunkside telephones are first connected to a PBX (Private Branch Exchange or private telephone network typically located in a business) and then connected via a trunk line (a multiple access line) to the local exchange. The first level of a call occurs at the local access level where a dialtone signal is heard and numbers are dialed. The local central office switches and transports the call to a local interexchange (perhaps in Miami). The call then travels to a remote interexchange (possibly Tallahassee). Finally, the call reaches the Pensacola exchange's central office and then a telephone connected to it. The trip the call takes from the Key West exchange to the Pensacola exchange occurs at the transport level because it passes through the multi-level connecting network. The route of the call within the local and remote access levels does not depend on the entire network. Signaling is used at the local access level to establish the call, helps route the call through the transport level, and causes the remote telephone to ring. As telephone technology progressed, more and more calls were converted from analog to digital format over the transport level and then re-converted to analog format at the remote access level. Furthermore, many models of telephones became available (from numerous manufacturers) and the system was engineered so they could each connect into the system.

Such a network hierarchy has costs and revenues at each level of operation. At the access level, the cost of building and maintaining wires to and from each telephone in the local area served by each central office is incurred by the ILEC (Incumbent Local Exchange Carrier). Typically, a fixed monthly local access charge per access line (regardless of usage level) is assessed to cover access level costs because they tend to be highly fixed. The cost of transport lines and out-of-area switching must be covered by transport level charges. These charges have a fixed component, but vary primarily by distance and time. Finally, there is a cost to receiving calls from out-of-area which is compensated in the form of termination charges on a per call basis.

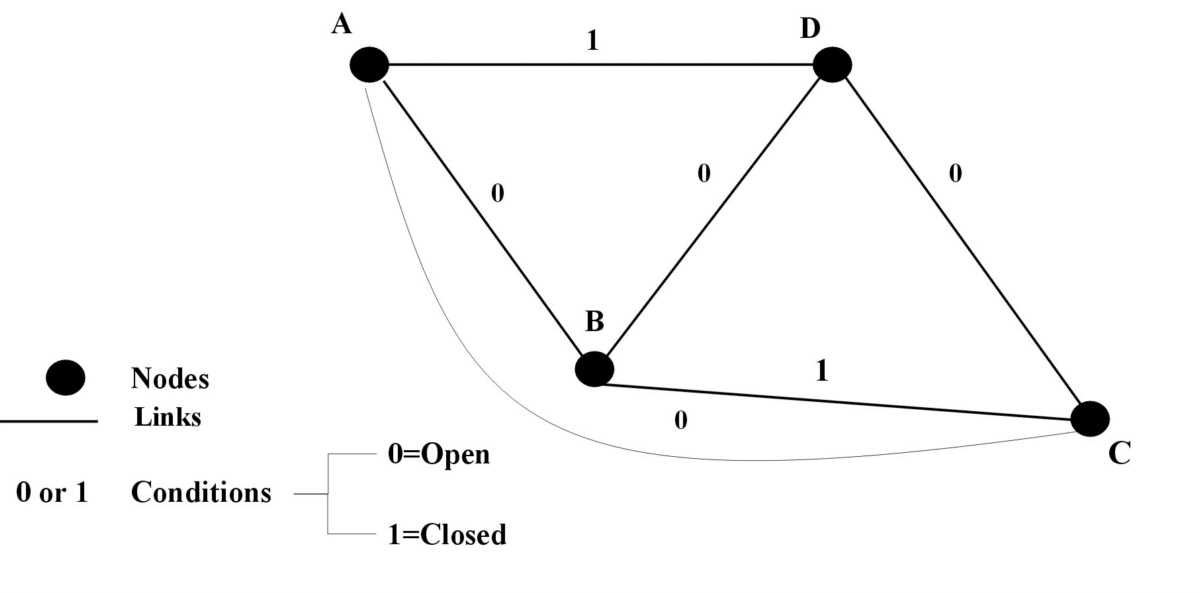

The connecting network is an "arrangement of switches and transmission links allowing a certain set of terminals to be connected together in various combinations, usually by disjoint chains" (paths) [Beneš, 1965, p. 53]. Two distinct activities are performed: nodes switch and links transport. Figure 3-3 depicts a stylized telephone connecting network. Stripped of the individual access lines, trunks, and telephones of Figure 3-2, Figure 3-3 serves a simplistic representation of the PSTN POTS network. Figure 3-3 shows only transport-level elements once the call has been received by a central office [Beneš, 1965].

Figure 3-3: Simultaneous representation of structure and condition in a telephone network at any level of the switching-transport level hierarchy.

Points A, B, C, and D are CO nodes (switches), representing a point where a large set of terminals (telephones) access the PSTN together. AB, AC, AD, BD, and CD are generally called branches of the network. AB and BC (or AD and DC) are instead called links if for some reason a call cannot travel directly through branch AC. The numbers above each line refer to the condition (or traffic load) of the network. In Figure 3-3, zero represents an open crosspoint and one represents a closed crosspoint. Thus, at any instant, a telephone network's structure and the traffic load combine to allow a fixed number of available paths (branches and/or links) for a call to travel. The number of paths available depends on total network capacity, paths occupied by ongoing calls (combinatorial complexity), and the probability that ongoing calls will hang up (or new calls will occur) at any moment (randomness). For example, assuming no new calls other than those shown in Figure 3-3, a call from A to C could be completed directly using branch AC, or by using the path ABDC (combination of links AB, BD, and DC).

In a more general telephone network, some specialized nodes may be for transport only (used only to connect other nodes together and not connected to terminals), or may be spots where transport connections arise or are terminated (inlets or outlets). Network engineering becomes immediately more difficult as the number of inlets, outlets, specialized nodes, links and branches expands. As networks grow in size, controlling units must be able to calculate all open links and branches, all free nodes, and all unbusy inlets and outlets.

The purpose of the controlling unit introduces a second point about telephone network engineering. The telephone system has three important properties: combinatorial complexity, definite geometrical (or other) structure, and randomness of many of the events that happen in the system. These properties are particularly important because a telephone call (unlike a telegraph message) requires a continuous open circuit from origin to destination for communication to occur. Therefore, underlying the network's design are mathematical algorithms regarding three kinds of representative problems: combinatorial, probabilistic, and variational. Each problem results in specific costs to each level of the network.

The combinatorial problem concerns the "packing problem" or set of possible paths a call may take given the set of available paths, nodes, inlets, and outlets. A finite number of possible paths exist to take a call through the transport level from the Key West CO to the Pensacola CO. Not all of these are necessarily the "best" path for telephone network efficiency. Additionally, each area's access level has less transport capacity than the number of access lines. For instance, Key West subscribers are limited by the number of total outgoing transport lines, and Pensacola subscribers are limited by the number of incoming transport lines. Line consolidation and routing hierarchies occur throughout the connecting system, inside and outside the connecting network.

An important network design feature that establishes constraints for the packing problem concerns how line consolidation occurs in the network. One kind of switching network uses space division switching (where each conversation has a separate wire path) while another kind of switching network uses time division switching (where each conversation has a separate time slot on a shared wire path). Similarly, the transport networks are of two kinds. Space division switching's counterpart in transmission is FDM (Frequency Division Multiplexing) where each conversation occupies a different frequency slot. Time division switching's transmission counterpart is TDM (Time Division Multiplexing) [Hill Associates, 1998, p. 5.6]. By converting voice conversations into digital form, more conversations can be packed into a given switch using time division switching while similarly more conversations can be transported digitally using TDM.

The core probabilistic problem concerns traffic circulation (probabilities that requests for service, dialing, call completion, or hang-up will occur from terminals under instantaneously changing conditions). The most probable behavior of all users before, during, and immediately after a call has to be calculated for all possible links between nodes. Estimates of circuit availability within transport and access levels are obtained by using probability distributions of typical statistical traffic patterns given average and peak calling. Those estimates, in turn, are used as input for variational problems. Conversions from analog to digital and back again also use probability distributions and sampling to calibrate equipment for the optimal balance of quality and efficiency.

The core variational problem concerns optimal routing through the network, given the solution sets to the combinatorial and probabilistic problems. For example, a Key West to Pensacola call could possibly be routed through a variety of intermediate hops to reach International Falls, Minnesota, and then go to Tallahassee (instead of Orlando and then Tallahassee). However, because of the distance sensitivity and fixed cost of hopping the call from one interexchange node to another, that route would not be the cheapest. Variational problem parameters could exclude some of these possible routes while leaving others available (perhaps only at peak times) to minimize transport costs while simultaneously maximizing call capacity.

Beneš contrasted the connecting network and the controlling unit of the system:

The network fundamentals presented so far introduce the third and final point about the traditional telephone network: economic issues. As the PSTN evolved, the economics of the network began to depend more on the historic structure and regulation of the telephone business than it did on the engineering of the network. Vogelsang and Woroch call the current setting "a complex dance of technology, regulation, and competition" [Vogelsang and Woroch, 1998, p.1]. Historically, it is argued that the telephone network architecture created inescapable economic constraints so that the telephone system was a natural monopoly.

Two interrelated issues are central to analyzing whether fundamental telephone network technology caused a particular market structure or vice versa. One issue is whether a system orientation or a network orientation is optimal. Another issue concerns whether telephone architecture inherently creates powerful economies of system, scope, and scale defining the traditional POTS PSTN as a natural monopoly. An important underlying concern is the impact of constantly changing technology on the equation. The issue of whether the telephone network is (or ever was) a natural monopoly is returned to in 5.4.

A few details of PSTN technical efficiency summarize this section. First, telephone networks are end-to-end open circuits. Once established, a wireline telephone call takes a dedicated circuit-switched path from caller to recipient. The call is subject to congestion only upon set up. Second, the engineering philosophy behind the traditional telephone network evolved from emphasizing a unified single system into a hierarchy of networks. Access lines that link both the calling and answering party to the network are known as the "local loop" because they connect individual users to the edge of the network at CO nodes. Third, calls are switched through nodes and transported over links that take advantage of line consolidation, digitization, routing hierarchies, and other engineering efforts to maximize call volume while minimizing costs.

A second influence on hypercommunication network design comes from work in computer network engineering and design. Computer engineers design networks based on the uses, components, scales, communications distance, network architectures (logical and physical relationships among elements), topologies (logical and physical arrangements), speed, and reliability of data networks. Not surprisingly, the broad and fast-changing field of computer networking makes a technical summary of the economic underpinnings of networking technologies an impossible task.

Nonetheless, this section will attempt that task. The specialized economics of hypercommunication networks differ in important ways from the broader field of network economics. To see this point (and to understand that some economists have misapplied macro network models to the hypercommunication case) some technical fundamentals of computer networking are needed. In this way, some idea of the economic benefits and costs (especially positive and negative externalities) of computer networks can be seen. In spite of anecdotal evidence to the contrary, computer networks are a production technology with limits and constraints. Due to the fast-changing and complicated nature of data communication, it is harder to grasp the economic ramifications of computer network technology.

Several technical characteristics of computer networks will be made in section 3.4 before the introduction of six economic generations of computer networks in section 3.5. Along with a comparison to the POTS network, four components of computer networks are outlined in 3.4.1. Common uses for data communication are considered and different technical services performed by computer networks are the topics of 3.4.2. Four technical objectives (each with important economic ramifications stemming from underlying combinatorial, probabilistic, and variational constraints) that face computer network engineers are covered in 3.4.3. Finally, in 3.4.4, the hierarchical seven-layer OSI protocol stack (representing sub-tasks of network communication sub-tasks) is sketched to unify the introductory points under one model.

3.4.1 Four Components Distinguish Computer Networks from the PSTN

Due to its complexity, the computer terminal has become a part of the network itself, in contrast to the traditional telephone. Today, individual PC's have more processing power, speed, storage, and memory than the largest computer of thirty years ago. In contrast, the telephone has not kept up with the computer's evolving complexity and it remains a simple device. However, the telephone transport network is becoming increasingly similar to an advanced computer network because it is also composed of computers. Another fundamental difference between the computer and telephone network is that while all telephones function relatively equivalently, not all computers do. The many brands and models of computers are differentiated by changing technologies such as memory, processing speed, and operating system. However, with each new research breakthrough in computer technology, the task of data communication becomes more complicated because new protocols are needed to enable different kinds of computers to communicate. In addition to dramatic technological changes in the terminals at the end of a computer network, the other important difference with the POTS lies in switching. The telephone transport network has traditionally relied on physical circuit switching while computer networks rely on packet switching and virtual circuit switching.

Four components (hardware, software, protocols, and conduit) distinguish increasingly technologically advanced generations of computer networks from their telephone counterparts. Each distinguishing component has itself evolved into a complex structure as computers became increasingly sophisticated compared to the telephone. Hardware includes computers and peripheral equipment that collect, analyze, print, display, store, forward, and communicate data. While most hardware devices are outside the scope of this work, some hardware such as modems (4.2.2), enhanced telecommunications CPE (4.7.1), and private data network CPE (4.8.1) are covered later.

Software represents the operating systems, programs, and applications that ensure hardware will function alone and in concert with the network. A particular set of hardware and software configured to operate together is known as a platform. Protocols may be thought of as standards or rules governing hardware-software interaction. More precisely, protocols are a formal set of conventions that govern format and control of data communication interaction among differing platforms and hardware devices [GSA Federal Standard 1037C, 1996, p. P-25]. Software is typically proprietary and licensed for use by the software developing company. Protocols are typically non-proprietary but are used to constrain software development within standards governing the many tasks of data communication and computer operation. Conduit represents the transmission media that tie hardware to the network. Conduit includes guided media, known also as wireline conduit (such as wire and cable) along with unguided media, known also as wireless conduit (such as radio and microwave). The choices of conduit available to rural areas are often limited due to physical limitations in transmission distance, weather, and electromagnetic factors.

3.4.2 Computer Network Uses and Service Primitives

Computer networks may be classified according to their uses and service primitives. Common uses include: electronic messaging (e-mail), sharing resources (CPU, printers, local conduit, and long conduit, databases, files, applications), and the transfer, reduction and analysis of information (file transfer, automated reporting and controls). Businesses use networks for collaborative BackOffice functions (manufacturing, transportation, inventory, accounting, payroll, and administration) and for customized FrontOffice uses (sales, ordering, marketing, and direct interaction with customers and stakeholders). In production agriculture, networks of remote sensing equipment may be used to monitor field conditions and even to operate irrigation equipment. Agribusinesses use computers to monitor prices, weather, livestock herd statistics, records about individual trees, and a host of other variables.

The development of the Internet has fostered uses such as real-time interaction (broadcast text, chat, voice, video) and multimedia to support online entertainment, education, and shopping. From an economic standpoint, a particular computer network may be application-blind or application-aware depending on whether the network is designed according to its use or the use designed according to the network [MacKie-Mason, Shenker, and Varian, 1996, p.2]. IBM coined the term e-business to describe a new business model that depends heavily on using the latest generations of computer networks. See 4.9.9 for further discussion of e-agribusiness and e-commerce.

From a technical or engineering standpoint, the application-aware uses are based on application-blind service primitives. The type of services (service primitives) that is available in a network is another important technical feature. The distinction among services has become especially important to recent network optimization. Primarily, the focus is on the difference between connection-oriented and connectionless services. Protocols supporting different kinds of services (techniques of communication) have evolved in the data link, network and transport layers which treat data differently as it flows through the protocol stack and in conduit. These differences are important to summarize because each can create different economic repercussions, especially with regard to pricing.

Each service type uses a different combination of protocols, stack layers, data units, and network optimization decision rules. Depending on the service type, combinatorial, probabilistic, and variational constraints in each of the four technical network objectives may tighten or relax. Furthermore, larger networks carry a blend of traffic from several service types, complicating network engineering. The service types are shown in tree form in Figure 3-4 [Jain, 1999, p. 1B-13].

Figure 3-4: Types of services or techniques used to move data from one destination to another through a computer network.

A connection-oriented protocol sets up a logical end-to-end path (virtual circuit) to the remote host through the network before streaming data is sent. Some setup time is needed to establish the connection through the entire network. Congestion in the network can prevent the establishment of a path. Data are sent in packets (segments) that do not need to carry overhead (extra address bit information) through a virtual circuit from sender to receiver, allowing more data per packet than with a connectionless service.

Virtual circuits differ from telephone network circuits (voice pipes) because they are logical paths through packet switched networks rather than physical paths through circuit switched networks. Hence, multiple virtual circuits are able to share a single physical path. Virtual circuits can be permanent PVCs or switched SVCs. A SVC is a temporary logical path through the packet switched network while a PVC is a permanent logical path. ATM and frame relay are connection-oriented services used to connect many WANs, distributed networks, and inter-networks. The Internet's TCP protocol is a connection-oriented protocol as well. These topics are covered in more detail in 4.8 and 4.9.

Connectionless (datagram) traffic does not need to set up a path to the remote host in order to transmit bursty data. Instead, the sender creates packets that contain both the data to be sent and the address of the recipient. Hence, in relation to connection-oriented packets, connectionless packets contain "overhead" because the address data crowd out data that would otherwise have been transmitted in the same unit. The connectionless orientation frees network paths so everyone may continuously use them because each packet is considered independent of those before or after. The network sends each packet in a series of hops from one routing point (intermediate node) to another and on to the final destination based on network layer routing protocols.

Connection-oriented traffic is suited for real-time applications while connectionless is not. However, a more intelligent network is required to transmit connection-oriented traffic. Within both connection-oriented and connectionless services, reliability further distinguishes service traffic. Reliable packets are those that will be automatically retransmitted if lost. Typically, reliable connection-oriented traffic uses sequence numbers to prevent out-of-order or duplicated packets, but a byte stream method also can ensure reliability. Reliable connectionless traffic resorts to return transmission of a positive acknowledgement and lacking that retransmits missing packets.

3.4.3 Technical Network Objectives

Engineers who design computer communication networks face four dynamic and simultaneous technical objectives. The core engineering problems are to simultaneously and dynamically map each technical objective onto the four network components (hardware, software, protocol, and conduit) at the sending, intermediate, and receiving ends of computer communication. Each technical objective has the familiar set of combinatorial, variational, and probabilistic constraints introduced in the discussion of the telephone network.

The first technical objective is sending computer rate control. The sending computer tries to maximize the data rate (in bits per second) of the data it sends. The sending data rate itself is a function of the conduit's bandwidth (capacity), the conduit's signal-noise ratio, and the encoding process. Therefore, both the sending computer and the network set parameters on the sending computer's data rate using control parameters based on their own combinatorial, probabilistic, and variational constraints and on conduit capacity.

The second technical objective is signal modulation rate maximization. Conduit design and coding schemes (based on known physical laws such as the Nyquist Theorem and Shannon's Law) are used to maximize the rate at which the conduit transmits the data signal. Bits of data must be converted into pulses to travel over conduit. However, conduit can carry a limited amount of data sent as a signal at a maximum modulation rate (baud rate). To transmit data as high and low voltage electric pulses that can be carried by the conduit, bits are encoded by the sending computer into a signal sent at particular number of cycles per second or baud. More precisely, the sending computer (or other hardware) converts data or text frames into bits and then into signals (electrical pulses sent at a certain number of cycles per second).

The capacity of a particular type of conduit, its signal-noise ratio, and the distance it can carry a signal are within the domain of electrical engineering. Constraints on speed such as attenuation, capacitance, delay distortion, and noise depend on the length, shielding, and type of conduit. The physical electromagnetic limitations of the conduit have changed with new wiring (and wireless) technologies so that they are less restrictive than they once were. However, even today, this second technical objective is often the most binding constraint of the network communications problem, especially in rural areas.

The third technical objective (actually a group of objectives) is network optimization. Except in the direct point-to-point case (where a sender is linked to a receiver over one uninterrupted link), computer communication requires intermediate computers, hardware, and conduit paths and links between the two computers that are shared with other users. Network optimization is the simultaneous balance of two primary performance objectives: minimization of delay and maximization of throughput rate. This balance is achieved at an overall network and an individual connection (or message) perspective. Every network path (data pipeline) between two computers (along with intermediate hardware devices) is subject to delay and throughput constraints. On an intuitive level, Comer and Droms (1999) suggest that delay depends on the data pipeline's length, while throughput depends on the pipeline's width. Both depend on the number of intermediate nodes or hops.

Delay, the time it takes a bit to cross the network, is measured in seconds or microseconds (ms). Not counting operator delay (delay due to human behavior), delay may be of three types: propagation, switching, and queuing. Propagation and switching delays are fixed (do not depend on the level of use), while queuing delay is related to throughput. When the network is idle queuing delay is zero. However, queuing delay rises as the network load (ratio of throughput to capacity) rises.

Throughput, a system capacity constraint, often popularly synonymous with bandwidth, is measured in bits per second (bps). The physical carrying capacity of conduit establishes a ceiling rate, an overall throughput that cannot be exceeded even under the best circumstances. Effective throughput recognizes there are physical capacities to intermediate hardware devices (hubs, routers, switches, and gateways) which produce a second, lower ceiling for best case transmissions between two particular points. The effective throughput actually achieved in a particular transmission depends on the physical layout of the network, data coding (data rate) and network rate control algorithms in addition to physical link protocols and conduit capacity.

Utilization may be thought of as the product of delay and throughput, or the total amount of data in transit. A high utilization is known as congestion, while a node with a high queuing delay is known as a bottleneck. Network optimization tries to lower fixed delay and increase capacity through design. Given a particular design, network optimization uses network rate control (traffic shaping) to monitor incoming traffic, while dropping or rejecting packets that exceed effective throughput. Traffic shaping's goal is use estimates of combinatorial, probabilistic, and variational properties in utilization patterns to maximize efficiency (the number of successful messages) and speed, while minimizing costs and avoiding congestion and bottlenecks. Additionally, network optimization (through simultaneous interaction of design and traffic shaping) seeks to lower utilization to avoid global congestion and local congestion in bottlenecks.

In computer network optimization, routing, packing, and the range of combinatorial, probabilistic, and variational problems similar to the telephone network are encountered. Intermediate hardware devices are used to enforce solutions to network optimization. Furthermore, computer network design protocols enable intermediate devices and computers to communicate across platforms, software, and continents. Hence, in addition to the optimizing the physical or logical topology of the network, computer network engineers are concerned with the compatible interaction of hardware and software.

The fourth technical objective is flow control or the receiving computer's need to prevent the incoming pulses from overwhelming its ability to decode those pulses into bits. This can be because the sending computer's send rate exceeds the receiving computer's receive rate or because the sending application is faster than the receiving application. The receiving computer's objective is to avoid becoming overwhelmed by too fast or large a flow. In addition to such flow control activities, the receiving computer may need to acknowledge receipt or request error correction.

These four objectives are more difficult to achieve because there are many manufacturers of hardware and conduit. Additionally, software companies are typically not in the hardware or conduit business. Therefore, except in their earliest history, computer networks have not been designed as part of a uniform, centrally controlled universal system such as the original PSTN Bell system. Much original research on computer networks did come from institutions such as AT&T's Bell Labs, the U.S. Defense Department, IBM, Hewlett-Packard, and Intel where the general systems approach was emphasized. However, as networks and computing power grew, data communication evolved from a centrally planned systems approach into a sub-task approach, based on an innovative marketplace comprised of a mix of small and large vendors.

3.4.4 OSI Model of Hierarchical Networking Sub-tasks

Another important technical characteristic of computer network design is the OSI (Open Systems Interconnection) model, around which standards and protocols have been developed to foster compatible data communications among software and hardware products offered by competing vendors. To reduce the inherent complexity of studying data communication across a network, the United Nations International Standards Organization (ISO) created seven layers of data communication (each representing a distinct sub-task), known as the OSI reference model. Scientists, vendors, and users formed committees to propose and establish protocols and standards for each layer. For each layer, more than one standard exists because different networking needs could best be achieved by an "open system" rather than a closed proprietary system.

Standardization helped to prevent market failure that would have resulted from an otherwise inevitable delay as software and hardware vendors each tried to develop a single uniform way of networking. According to Comer and Droms (1999), a protocol is an agreement about communication that specifies message format, message semantics, rules for exchange, and procedures for handling problems. Without protocols (and even with them), messages can become lost, corrupted, destroyed, duplicated, or delivered out of order. Furthermore, Comer and Droms argue that protocols help the network distinguish between multiple computers on a network, multiple applications on a computer, and multiple copies of a single application on a computer. More detail about protocols is given in 4.5.2 and 4.9.6.

The OSI reference model has been criticized as a dated, theoretical model that took various standards bodies a decade to develop. Some associated standards are theoretical in that they are not yet implemented or never will be. Markets seem capable of implementing the most useful protocols, while discarding others. The seven layered OSI protocol stack is meant as a reference model of the sub-tasks involved in networked computer communications, and not as a description of reality or a definitive taxonomy.

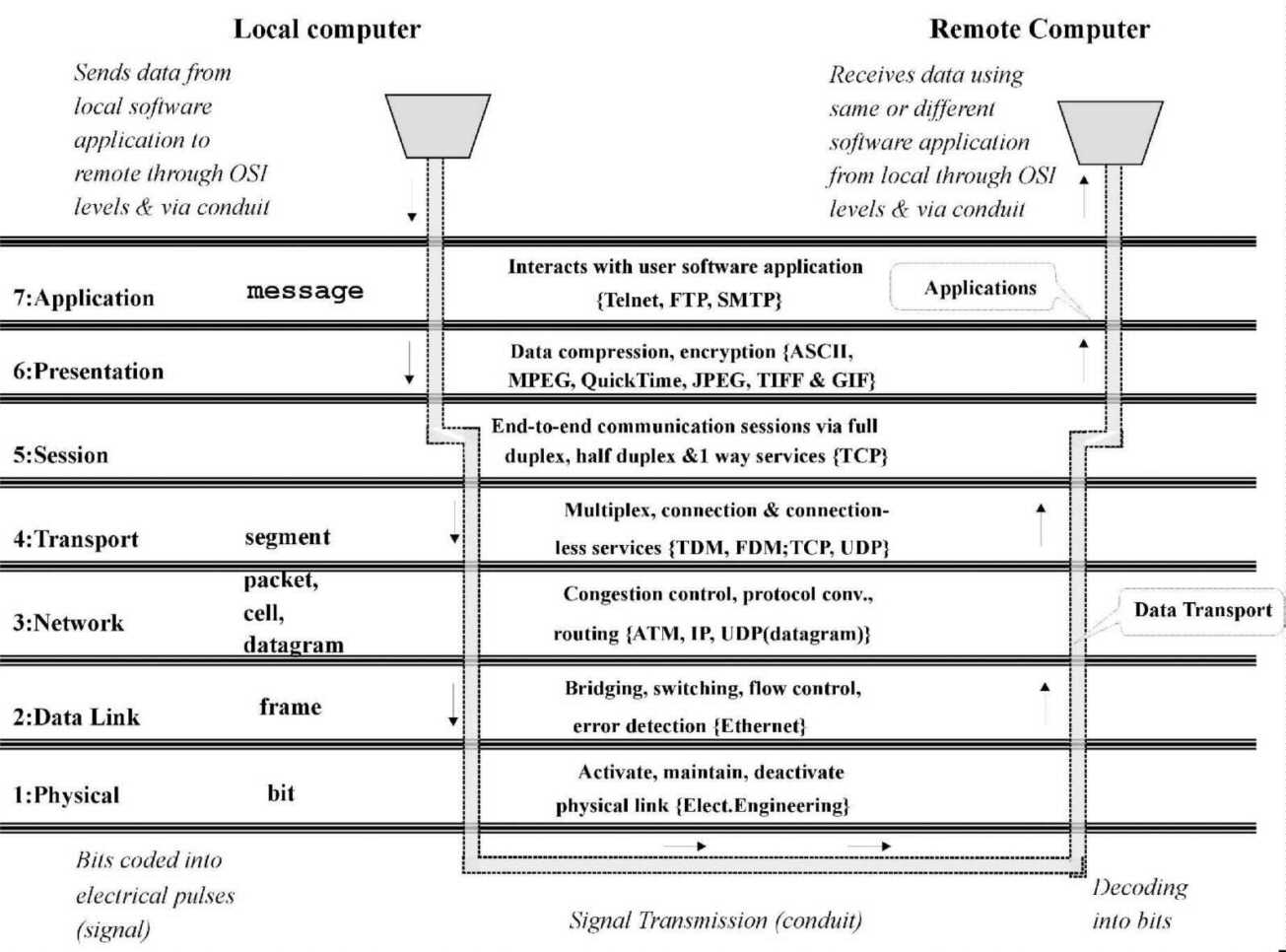

Figure 3-5 shows the OSI model's hierarchical protocol stack. Data from local computer software applications are handed off down the layers until they are transmitted to the remote computer. At the remote end, the process is reversed sequentially so the remote user's software receives the data [Jain, 1999; Covill, 1998, Sheldon, 1998, Socolofsky and Kale, 1991].

Figure 3-5: OSI model of networking layers.

The process of data communication begins in the OSI model when a software user at a local machine sends a communication to a user (of the same or different software) at a remote machine. The local software application communicates downward to the application layer (layer seven) of the protocol stack in units of data called messages. Each layer communicates with the layer directly below it, creating a new data unit to be passed on. Therefore, the message is converted, in turn, into a segment, cell, packet, or frame. At layer one, frames are turned into bits. Bits are then encoded into electrical pulses and sent as signals over conduit (bottom of Figure 3-5).

Upon receipt at the remote end, the pulses are decoded into bits, frames, and packets as they are passed from layer one on up to layer seven. At that stage, the application layer (layer seven) sends a message to the software application used by the remote user and data communication has occurred. Importantly, the receipt of the message by the remote device is the technical engineering standard for successful data communication (machine-machine communication). The economic importance of that communication may or may not depend on whether a human operator at the remote end can process the data into useful information. In some cases, the remote computer processes and reduces the incoming data into useful information before a human operator sees it. In other cases, the efficiency and effectiveness of human-human communication is at risk if the sender causes the local application to send too much or too little data for human-human communication to occur.

At each layer in Figure 3-5, the technical sub-tasks accomplished in that layer are outlined, followed in brackets with commonly implemented protocols that operate in that layer. The transport-session layer boundary differentiates the upper layers (application layers) from the lower layers (the data transport layers) as shown on the left. On the sending end, each layer uses a different unit (envelope) to carry the original data (and the overhead it and other units add) to the next layer. On the receiving end, the overhead corresponding to each layer is sequentially stripped off to provide guidance on how that layer should handle the remaining data and overhead, until the data alone passes from the application layer to the software. In this sense, each layer in the receiving computer's stack gets the same data that was sent by the corresponding layer in the sending computer's stack.

The remaining lower layers (data link, network, and transport) help engineers with two technical objectives, to maximize the bits sent by the sending computer, while preventing the signal from swamping the receiving computer's constraints. It is noteworthy that the establishment of protocols helps to conserve engineering talent because an engineering study of each computer at every point on a network is unnecessary. Lower level (data transport) protocols accomplish flow control, error checking, and the grouping of bits into addressable envelopes (frames, packets).

The objective of overall network optimization does not map to any single OSI level. This flexibility can bring important externalities to the network. For example, without any market exchange, an advance in a data compression protocol (accomplished in layer six) can enhance overall network functionality by effectively reducing the number of bits that need to be encoded for transmission in layer one. This in turn reduces the bits, frames, and packets transported downward by layers five through two at the local machine and upwards from layers one to six at the remote machine. The protocol stack creates economic complements at one layer and they multiply through the network. This kind of interrelationship is one of the hallmarks of the more recent network generations.

Each network generation uses the OSI model differently in attempting to simultaneously achieve the four technical networking objectives. Those differences will be briefly explored during the discussion of the generations beginning next in 3.5. Very generally, the layers map onto the four network engineering objectives as follows. The physical layer directly represents the objective of maximizing the rate at which the signal travels though the conduit, the data rate. Results from electrical engineering were used to establish protocols to code bits (data) into electrical pulses (signals) to be transmitted through the conduit. Based upon the conduit's physical capacity, coding and signal technologies work with physical layer protocols to help speed up the data rate. The data rate objective is a function of bandwidth (conduit carrying capacity, the signal-noise ratio, and encoding).

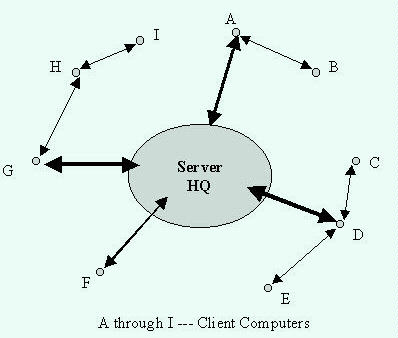

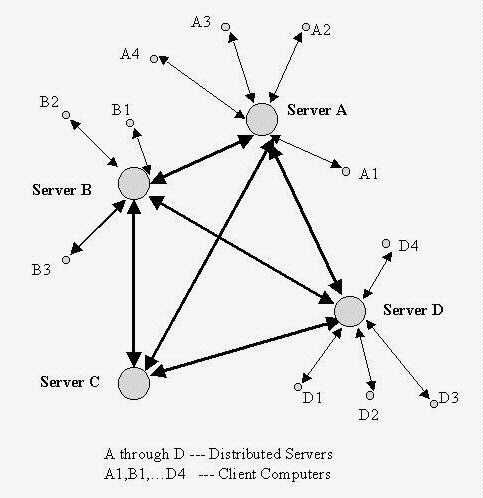

Six economic generations of computer networks reduce the crazy quit of details from section 3.4 (four network components, four engineering objectives, seven OSI layers, service primitives, and the many uses of computer networks) into six eras. Each economic generation broadly summarizes the technologically evolving underpinnings of computer networking. It is important to note that the computer networks actually found in Florida agribusiness are hybrids of more than one generation, with countless inter- and intra-generational varieties. Readers who require a more technical (and engineering-oriented) treatment may consult Socolofsky and Kale (1991), Sheldon (1998), Comer and Droms (1999) or a variety of corporate sources such as Cisco (1999), Novell (1999), or Lucent-Ascend (1999).

Babbage and others are credited with early ideas that resulted in the first computer, the post WWII ENIAC. Mainframes, especially those developed at IBM, dominated the computing world until 1971, when Marcian Hoff invented the microprocessor at Intel. In 1975, the same year Sony launched the Betamax video recording standard, Bill Gates showed that the BASIC programming language could operate on a microprocessor. By 1980, Intel was able to place 30,000 transistors on a chip that ran far more rapidly than the original microprocessors did. In addition in 1980, IBM entered the PC market, hoping it would capitalize on the mainframe market share it enjoyed. IBM chose Intel and Microsoft as vendors. IBM made what many would call the worst business mistake of all time when it failed to obtain exclusive rights on Microsoft's software or Intel's hardware.

There are six inexact economic generations of computer networking: time-sharing with dumb terminals, centralized networks, early peer-to-peer LANs and later client-server LANs, client-server WANs, distributed networks, and inter-networks. Each generation represents a simplified model of a complicated technical network. Additionally, while each generation optimizes combinatorial, probabilistic, and variational problems, these classes of network optimization problems are modeled differently than in the telephone network. For example, computer networks can choose "store-and-forward" message or packet switching algorithms instead of real-time, always connected circuit-based switching algorithms of the telephone network. Data, instead of conversations are being transmitted over a computer network. Therefore, technical constraints on a given sized computer network are inherently more flexible than on a similarly sized telephone network. The constraints and optimization objectives each vary according to the size and generation of computer network under discussion, its users, and the specific type of data transmitted.

A variety of network scales, architectures, topologies and communications distances traversed by computer networks are necessarily included in a single economic generation. Within each generation, a variety of software, hardware, and conduit have been implemented to loosen network constraints. The discussion will go from simplest to most complex and earliest to most recent. Two points should be noted. First, there can be considerable variation within generations due to technological innovation. Second, variation between generations can be subtler than portrayed.

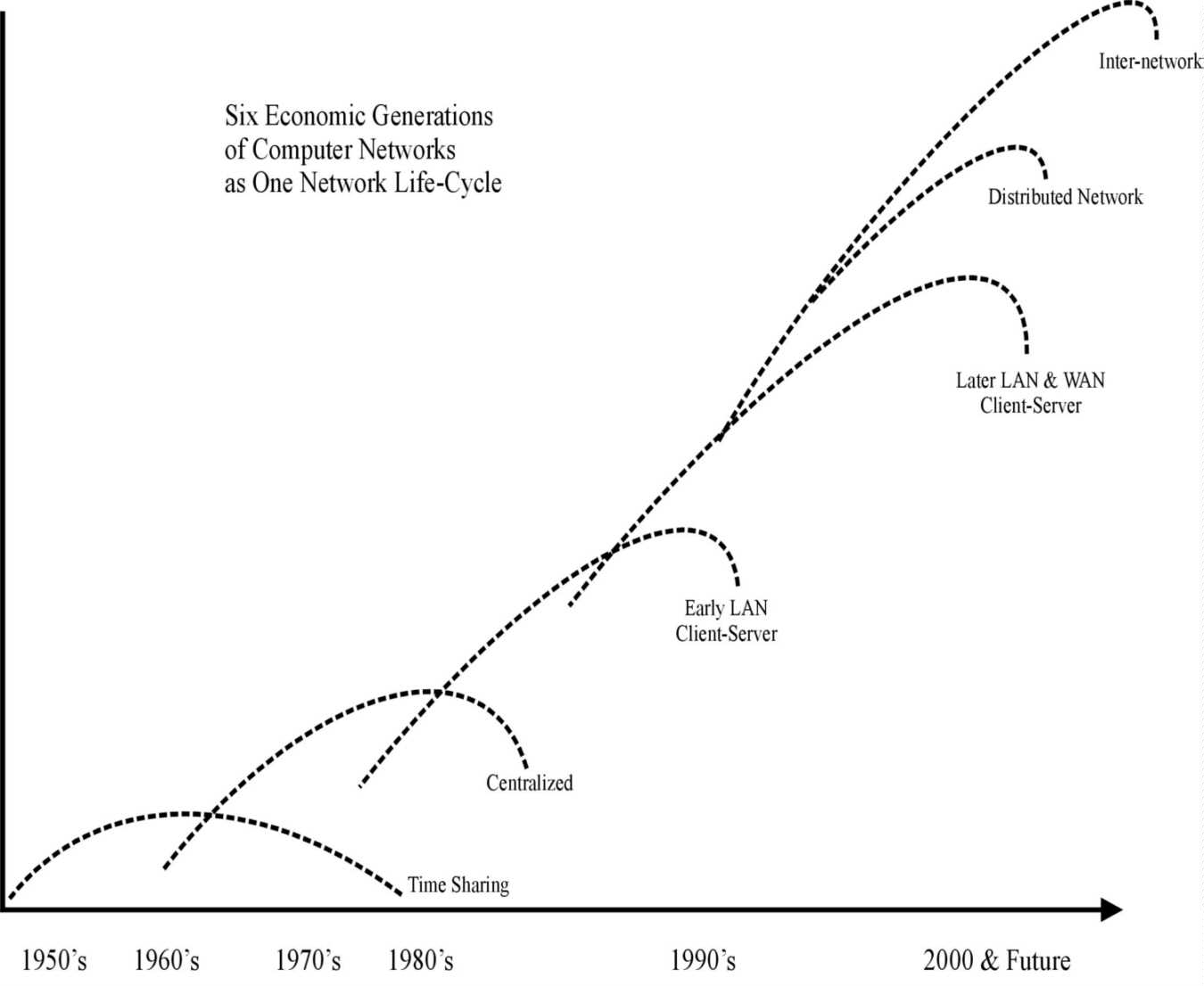

Figure 3-6 makes use of a product life cycle to frame the rough historical era of each generation. Unlike animal generations, however, the gestation period is shortening at an increasing rate as a function of technological change. All six generations share three characteristics. First, each generation does not die, but becomes part of its successor. Thus, while dumb-terminal, centralized, time-share networks are ancient history (relative to computer history at least), a popular modern form of inter-network uses outsourced application servers in a thin client star network.

However, this introduces the second point, that the quality and characteristics of networking goods are not necessarily comparable through time. Today's thin client may not have disk space or run applications locally, but is capable of displaying high-level graphics that would have swamped the CRT screens and 300 baud modems of three decades ago. Third, the s-curves in Figure 3-6 depend on successive generations, each with new physical limits [Afuah, 1998, pp. 120-125]. New physical limits ushered in by a specific generation are often backward compatible with preceding generations.

Figure 3-6: Six economic generations of computer networks plotted as life cycles in time.

3.5.1 Time-Sharing Networks and Dumb Terminals

Time-sharing networks are the first economic generation of computer network. These networks are throwbacks to the time when computers were expensive, large machines that required their own controlled atmospheres and experts. Time-sharing network hardware consisted of a single mainframe computer and a number of individual links to dumb terminals. Dumb terminals had no memory or storage capabilities so they were slower at sending and receiving data than the single mainframe they were linked to. Printing of text and graphics could be done only at the mainframe. Data flowed from a dumb terminal's keyboard over primitive conduit to the mainframe where computations were performed and print jobs were executed.

The terminal's screen could display text responses, but special keyboard control characters had to be used to prevent the display from scrolling faster than the terminal operator could read it. When receiving input from remote terminals, transmission was asynchronous so that the mainframe on the other end received characters one at a time as they were typed, serving to hamper the maximum data rate. Most data entry was in the form of punched cards that had to be taken to the mainframe to be read by special card readers. Asynchronous transmission protocols were developed to include a start bit, stop bit, and optionally, a parity bit for each character adding to the amount to be transmitted.

Computer engineers of the time concentrated on developing faster central processors and more programming flexibility instead of on improving data communication. Data communication was performed via punched cards and magnetic media instead of over physical networks. The early lack of emphasis on data communication was partly because early networks that needed long connections (outside the building where the mainframe was located) relied on noisy analog telephone local loops and analog connecting networks. Another reason was that data rates were 300 bps or slower.

Time-sharing networks were often not normally owned by the companies that used them. Instead, they were early examples of outsourcing, where access to the network, connection time, and processor time were each billable items. Current examples most similar to time-sharing networks include POS (Point-of-sale) terminals at gas stations and stores or ATMs that perform specific tasks (such as credit card verification or cash withdrawal) using extremely simple terminals that share a central processing system.

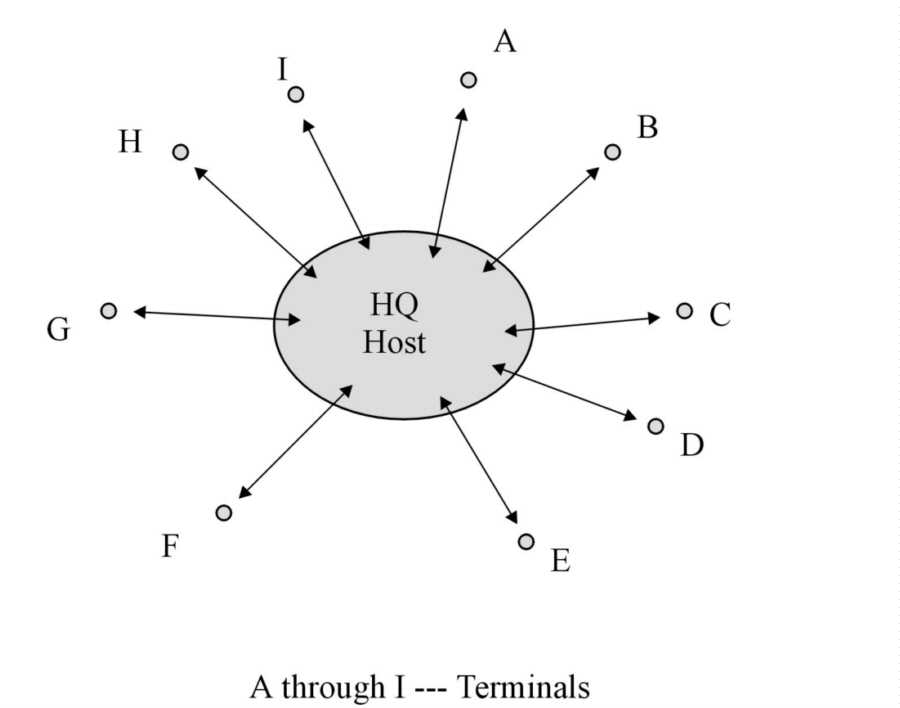

Centralized networks are the second economic generation of computer networks, as shown in Figure 3-7. While similar to time-sharing networks, there were several important differences. The first difference between time sharing and centralized networks was that the central computer (or minicomputer host) was typically owned by one company instead of shared. As time passed from the 1960's into the late 1970's, scaled-down mainframes (minicomputers) became more affordable for large and then medium-sized firms. Second, centralization differed from the previous time-share network in that up to 100 concurrent users could share overall computing capacity through remote batch processing and limited local processing.

Figure 3-7: Generic example of a centralized computer network.

The main processor (or host computer) was centrally located (at headquarters, HQ) with remote and local terminals (A through I in the figure) connected via direct links. The term centralized means that databases and the processor were kept at the host location. While later centralized networks featured remote microcomputers linked to a mainframe or minicomputer, the bulk of capacity still rested in the central host computer.

Communication volume became more synchronous (full duplex) and bi-directional (half duplex) than it had been under the simplex time-sharing regime. The centralized configuration permitted some terminal-to-terminal message traffic and local printing of jobs. Centralized computing also allowed for some limited storage and computing on some of the remote microcomputers attached to the network. Centralized computing replaced time-sharing and dumb terminals when mainframe prices fell and 8086 and 8088 PCs first became available so that an organization could own (rather than rent) its computer network. Each terminal had a limited ability to share resources of the host.

Centralized connections were accomplished locally via coaxial cable or via low-speed dial-up (dedicated or on-demand) for long connections. Conduit originally used in these systems could transmit over telephone lines at 300-1200bps to remote terminals while early coaxial cable reached local machines at a faster data rate. The original centralized networks were stand-alone networks that restricted benefits and ownership of the network to a single organization at one or more locations.

Under centralized computing, flow control and error detection were especially important because dial-up connections and local conduit were subject to interference and noise. A single interruption due to noise or interference in a large and lengthy transmission could require that the entire contents be retransmitted, often with no better results. File and application sharing began to heighten the need for data communication.

Centralized networks were never phased out of many organizations. Often, as an organization upgraded computers, a minicomputer-based centralized network remained operational as a secure, separate "legacy" network. Newer PC-based network designs relied on more user-friendly software to accomplish simpler tasks. The cost of reprogramming existing specialized centralized networks to function on less-powerful microcomputer networks was high.

Examples of centralized computers include large retailer POS cash registers, airline reservation systems, and teller and loan officer systems in banks. To enable the host to be shared among all users, message switching was used instead of circuit switching. Under early message switching schemes, the host was able to "store and forward" instructions from terminals and messages from terminal to terminal, so as to avoid congestion upon transmission (such as "busy signals") in the telephone network.

3.5.3 Early LANs: Peer-to-Peer Networks

Early LANs (Local Area Networks) are the third economic generation of computer network and would evolve into the first of two client-server generations. LANs are used to connect computers to a network within a single office, floor, building or small area. The scientific basis for LANs is the locality principle that states that computers are most likely to need to communicate with nearby computers rather than distant ones [Comer and Droms, 1999].

LANs began to be used in the early to mid 1970s to allow groups of computers to share a single connection in a larger centralized network. LANs are owned and readily managed by a single organization. They are useful to an organization seeking to share hardware, applications, data, and communications within a local area. Many LANs were peer-to-peer networks where most stations on the network had equal capacities for processing and display.

However, the sharing of a single link among multiple computers required a way to prevent two or more computers from transmitting at the same time (collision). Several arbitration mechanisms called MACs (Medium Access Controls) were developed within the OSI data link layer to avoid interruption of one computer's transmission by another's. Early LANs used ALOHA and slotted ALOHA, low efficiency protocols with collision rates of at least 26% [Jain, 1999]. The way collisions are handled differs by protocol and protocols are implemented in topologies. The IEEE and ANSI (American National Standards Institute) created standard 802.3, Ethernet which recently became (and continues to be) the most popular LAN standard.

LANs are connectionless services so that once a computer gains access to the network, it puts packets on the network, but has no assurance that the distant computer gets them. LANs allow unicast, multicast, and broadcast messages so that a single transmission may be sent to a single network user, a subset of users, or all users simultaneously. LANs connect stations to the network including computer workstations, printers, and other hardware. Users could then share resources within the LAN. LAN protocols use the physical and data link layers of the OSI protocol stack.

LAN topologies define how network devices are organized logically. Figure 3-8 shows three early LAN topologies: local bus, ring, and star. All three are connectionless services. Bus topologies (which work with early Ethernet standards) use a short dedicated connection (AUI cable) to a single shared conduit. Original Ethernet wiring was thicknet, a heavy coaxial cable (10Base-5) with a maximum segment length of 500 meters as measured by the length of conduit, not by the direct distance.

Later, Ethernet cabling was thinnet, a thinner coaxial cable (10Base-2), routed directly to a BNC (T-shaped) connector on each station. Thinnet's capacity was several times that of thicknet's with a maximum segment length of around 186 meters (607 feet). 10Base-2 has very specific limitations to the total number of stations on a network. A maximum of 30 stations per segment are allowed, with trunks up to five segments long (two of which must be for distance) for a total trunk length of 910 meters (3,035 feet). Long trunks needed repeaters (devices that amplified the signal). Thinnet is cheaper than thicknet, adding to its popularity.

Figure 3-8: LAN topologies.

Thinnet and thicknet were expensive and the larger the network, the greater the likelihood of collisions due to delay. Repeaters helped extend maximum segment length by boosting signals, bridges helped filter traffic to avoid congestion and collision. Switches further increased throughput and design performance. However, these extra devices were expensive until the 1990's. That expense, combined with relatively high cable costs is why LANs are local.

Of the three LAN standards, Ethernet's bus topology has the advantages that fixed delay is almost zero, it is a simple protocol, and stations can be added without shutting the network off. However, it allows no traffic priorities, and (while better than ALOHA and slotted ALOHA) has a high possibility of collisions, which seriously hurts throughput at high utilization.