Chapter 2

Foundations of the Information Economy: Communication, Technology, and Information

IT (Information Technology) producing industries (i.e. producers of computer and communications hardware, software, and services) . . . play a strategic role in the growth process. Between 1995 and 1998, these IT-producers, while accounting for only about 8 percent of U.S. GDP, contributed on average 35 percent of the nation's real economic growth.

In 1996 and 1997 . . . falling prices in IT-producing industries brought down overall inflation by an average of 0.7 percentage points, contributing to the remarkable ability of the U.S. economy to control inflation and keep interest rates low in a period of historically low unemployment.

IT industries have achieved extraordinary productivity gains. During 1990 to 1997, IT-producing industries experienced robust 10.4 percent average annual growth in Gross Product Originating, or value added, per worker (GPO/W). In the goods-producing sub-group of the IT-producing sector, GPO/W grew at the extraordinary rate of 23.9 percent. As a result, GPO/W for the total private non-farm economy rose at a 1.4 percent rate, despite slow 0.5 percent growth in non-IT-producing industries.

By 2006, almost half of the U.S. workforce will be employed by industries that are either major producers or intensive users of information technology products and services. [United States Department of Commerce, "Executive Summary", The Emerging Digital Economy II, June 1999]

Both Chapter 2 and Chapter 3 address why networked hypercommunications have originated from the digital information economy just mentioned. Chapter 2 explores three interactive foundations (communication, information, and technology) of the information economy. It ends with examples of a reality gap between technical hype and economic likelihood in the new economy that also depends on the characteristics of networks. However, an understanding of networks, the technical infrastructure and economic infostructure of the information economy, must wait until Chapter 3.

2.1 Introduction: the Continuing Relevance of Economics

Chapter 2 uses economics to produce three foundations of the information economy: communications, technology, and information. As a science, economics is often defined as the study of how society allocates scarce resources to cover humankind's unlimited wants. Economics involves core concepts such as constraints, relative prices, incidence of taxation, symmetry of regulation, and market power. While economics originated as "political economy" in industrial age Europe, the field's core is not out of date in a global information economy. However, the information and service orientation of the international marketplace, combined with business demand for practical inventions amid the hype of high-tech, has led to an increasing diversity of economic thought. It is argued that the times necessitate a re-examination of the artificial boundary between the order of conventional economics and the chaotic complexity of the marketplace [See Giarini and Stahel, 1989, p. 121]. At the same time, Liebowitz and Margolis warn that economists must be wary when:

So taken are we with these new technologies that we tend to treat these new inventions as sui generis, so different in essentials that we cannot even speak of them in the same terms we have used in the past. [Liebowitz and Margolis, 1995, p. 1]

To those who view economics as a static, mathematically verbose creature with rare applicability outside academic problems from the industrial age, the hypercommunications market is a trying testing ground for economic theory. It is said in the popular, financial, and trade media that converging communications technologies are so new, fast changing, and different from anything ever known before, that they defy standard economic analysis.

According to this view, using economics to study agribusiness hypercommunications is doubly irrelevant. First, some argue that conventional economics has lost its relevance to the information economy. Second, some argue that the information economy is not relevant to agriculture. For example, of the first argument Rawlins writes in 1992:

Classical economic theory is largely irrelevant to the early stages of a new information industry. Economics assumes that resources are finite and that there is enough time for markets to reach stability. Three things are wrong with this picture: information is not finite, there is no single stable point--there are many, and there is little time to reach stability before there is another major change. [Rawlins, 1992, node 29]

This argument appears to leave room for economics in later stages of the information industry, but an anti-economics vaccine prevents "later" from happening because exponential improvements in synergistic technologies never end; they lead only to new iterations of increasing growth. Perhaps (even in the new information economy) economics could at least serve in a supporting role via capital formation and financial market theories. However, again economics appears to be passé, because while "capital will remain important as a risk softener", knowledge "has become more important to continuous improvement," while financial markets "matter less and less to the economy" [Rawlins, 1992, node 29].

On the second irrelevancy, Rawlins, a computer scientist, expresses the popular view that standard economics (a synonym for classical in his writing) is relevant only to sectors such as agriculture that are separate from the information economy.

Standard economics applies to finite-resource markets like agriculture, mining, utilities, and bulk-goods. Such economics has little to say about information markets like communications, computers, pharmaceuticals, and bioengineering. These markets require a large initial investment for design and tooling, but enormous price reductions with increasing market growth. This growth is further compounded by positive feedback: with increasing market growth the production process gets more efficient, therefore returns increase. [Rawlins, 1992, node 29]

Apparently, conventional economics is useless in the brave new world of the information economy but still matters on the farm or in the food processing plant. Somewhat paradoxically, the information economy's power to alter economics does not make it to the agricultural sector. Presumably, this is because phenomena such as large initial investment in research, price decreases with increasing market growth, and positive feedback occur in Silicon Valley, but not in the San Joaquin Valley. New diagnostic and application techniques (Khanna, 1999) are but one suggestion of how high technology benefits production agriculture. Biotechnology, smart foods, and information about the safety, nutritional, and organic characteristics of food are examples of the importance of knowledge and information to agriculture as a whole. There are many more examples, such as the information and communication needs of horizontally and vertically integrated agribusiness firms.

The discussion of the relevance of standard or conventional economics has ushered in a vocabulary debate within economics itself about what to call the information economy and how to practice a more relevant economics. To some economists and many Wall Street analysts, a new, weightless economy where information goods and knowledge services are produced and traded via technology networks has already replaced the traditional economy. Thus, a "new" economics [Kelly, 1998] or a "weightless" economics [Kwah, 1996, 1997; Coyle, 1997; Cameron, 1998] naturally replaces conventional economics. However, other economists suggest that the information economy is a "network" economy that requires extension of traditional theory to new problems [Shapiro and Varian, 1998].

A body of economic thought is developing on new information age issues ranging from Internet economics to the economic role of technological change. Lamberton writes that economics will have to change.

The structural and behavioral changes conveyed by the term Information Age require the economist to leave the shelter of his Ouspenkian 'perpetual now'. The economics that survives will no doubt be less amenable to mathematical precision, and its policy counterpart will need to be more tolerant of the role of judgement. [Lamberton, 1996, p. xiv]

However, widespread use of IT and hypercommunications are helping to erase many of the reasons that the market does not correspond to economic theory. Perhaps (armed with better information) it is the economy that is catching up to economic theory, instead of economists (armed with new economics) who are catching up to the economy. Lags and adjustment periods are shorter while information and productivity are enhanced in a communication-driven information economy that better corresponds to theory.

Few would dispute that the view of the typical firm in the information economy has changed from the industrial age economic model. Until recently, many economic problems were cast from the point of view of a factory manager who produced goods in a manufacturing plant. Now, similar and different economic problems are cast from the point of view of an entrepreneur who produces services in an information facility. While many agribusiness problems are much like those of the classical factory manager, agribusiness is increasingly confronted with the high-tech entrepreneur's problems as well.

The technician-entrepreneur at the helm of a high-tech firm needs to respond quickly to a constantly changing environment. As an individual, the entrepreneur's focus may be fixed on technology rather than directly on consumer needs. Every technical detail of an operation may be etched in such a CEO's strategic thought, to the exclusion of tactical ideas about finance, marketing, or hypercommunications. Such an entrepreneur may be striving for greater technical efficiency without adequately considering price, demand, or other economic variables. It is tempting for firms operating in the information economy (agribusinesses included) to imagine that technical expertise alone is most important.

However, firms in the information economy still meet competitors in markets as they did in the industrial age. Furthermore, economic theory typically tries to predict market rather than individual behavior. Interestingly, in markets for all kinds of goods and services worldwide, relative prices still seem to matter. An agribusiness manager may not understand the hypercommunications market, and a hypercommunications entrepreneur may have no clue about the citrus market, but both understand their bottom lines. For this reason, in spite of the contention that it cannot keep up with an economy that is increasingly based on communications, technology, and information, economics has hardly lost its relevance. Economics may be more relevant to businesses whose objectives have been forever altered by the new realities of the information economy. New, fast changing, and different as hypercommunications are, old fashioned economics is at work in the form of constraints, relative prices, regulation, profits, costs, and market power within the information economy.

Throughout the information economy, (especially in hypercommunications) the views of engineers and computer scientists have resulted in new technologies, promising infant products, and exponentially growing high technology firms. This chapter will highlight technical aspects of production that are necessary for operation in the information economy. However, economic and marketing perspectives are also covered because non-technical is sometimes overlooked when the discussion centers on new hypercommunication technologies. The economic and marketing perspectives are essential to firms hoping to understand how hypercommunications fits in with existing business strategies. No single view is sufficient.

Chapter 2 opens with three fundamental concepts that provide the origins for the information economy, which serve as the foundation of hypercommunication networks. The first three sections present these concepts: hypercommunications (2.2), technology (2.3) and information (2.4). Rather than use narrow definitions, broad conceptualizations identify the inherently economic context of the information economy and the dominant role of hypercommunications. Then, section 2.5 examines limitations of what has been popularly described as the unlimited cyber frontier. It will be seen that the information economy does not lack constraints. Instead, it has different limits than the traditional manufacturing economy. These economic, behavioral, financial, and technical constraints set the tenor of the information economy and rein in super-optimistic predictions about new hypercommunication services and technologies. Section 2.6 is a short summary. Chapter 3 will cover the unique economic and technical properties of networks, and relate them back to the limitless cyber frontier notion. Taken together, Chapters 2 and 3 provide an answer to why hypercommunications are economically and technically important to agribusiness.

2.2 Communication, the First Foundation

In this section and the next two (2.3 and 2.4), the three foundations of the information economy, communication, technology, and information (defined broadly in Table 1-1 in Chapter 1) are conceptualized. Braithwaite (1955, p. 56) describes the process of defining something as being a logical construction of the idefiniendum (thing to be defined) in terms of the meaning of another (the definiens). In a highly technical field such as hypercommunications, there is an enormous burden to define terms. For each of the twelve essential terms from Table 1-1, the word or phrase definiendum cannot be easily defined with a definiens of a few sentences. Terms such as communication, technology, and information require a longer definiens because they are conceptually deeper than technical jargon terms or acronyms such as those presented in the glossary.

As Cohen and Nagel pointed out, "the process of clarifying things really involves, or is a part of, the formation of hypotheses as to the nature of things" [Cohen and Nagel, 1934, p. 223]. Perspectives from economics, communications, and computer science are spliced with a taxonomic treatment of the hypercommunications jargon. Therefore, useful definitions are conceptualizations that recognize each perspective in the synergistic whole. However, such conceptualizations are longer than telegraph-style definitions.

This section argues that communication is a process that includes models that are hypotheses as to the nature of things. To this end, hypercommunication and communication are defined and conceptualized in five ways. First, a literal definition of hypercommunication is presented (2.2.1). Next, communication is conceptualized through two traditional communication models the interpersonal model and the mass model (2.2.2). Third, a new hypercommunication model is compared with use of the interpersonal and mass models in standard telecommunication (2.2.3). Fourth, a comparison of telecommunication and hypercommunication by elements is made (2.2.4). Fifth, and finally, hypercommunication can also be conceptualized through the taxonomy of hypercommunication services and technologies (2.2.5) that will be used in Chapter 4.

2.2.1 Literal Definition of Hypercommunication

What are hypercommunications, or what is hypercommunication? Alan Stone discusses one "true", or "pure ideal" of hypercommunications in 1997:

Virtually any person who considers the future agrees that the world is in the process of major social and economic changes and that telecommunications is a driving force of those changes. If that is the case, the study of telecommunications is not simply the examination of one more sector, like pulp and paper, clothing, or automobiles. Nor is public policy for telecommunications just one more branch of public policy studies, like civil rights, airlines, or education. If the experts' projection of the future of telecommunications is a correct one, the sector will be the leading one in shaping our social, economic, and political futures. No reasonable person would attempt to predict the future with precision, but we can certainly surmise certain probable trends--the nearly uniform considerations of the experts do portend a dominating future for communications--domination so extensive that we call the sector hypercommunications. [Stone, 1997, p. 1, italics his]

A breakdown of the term hypercommunication into prefix and root gives further clarification. The prefix hyper- is defined as meaning "over, above, more than normal, excessive" [Webster's New World Dictionary, college ed., 1960, p. 714]. The opposite of hyper- is hypo-, signifying "under, beneath, below, less than, subordinated to". Thus, the status quo of communications is hypocommunications, below or beneath the developing world of hypercommunications.

2.2.2 Two Traditional Communication Models: Interpersonal and Mass

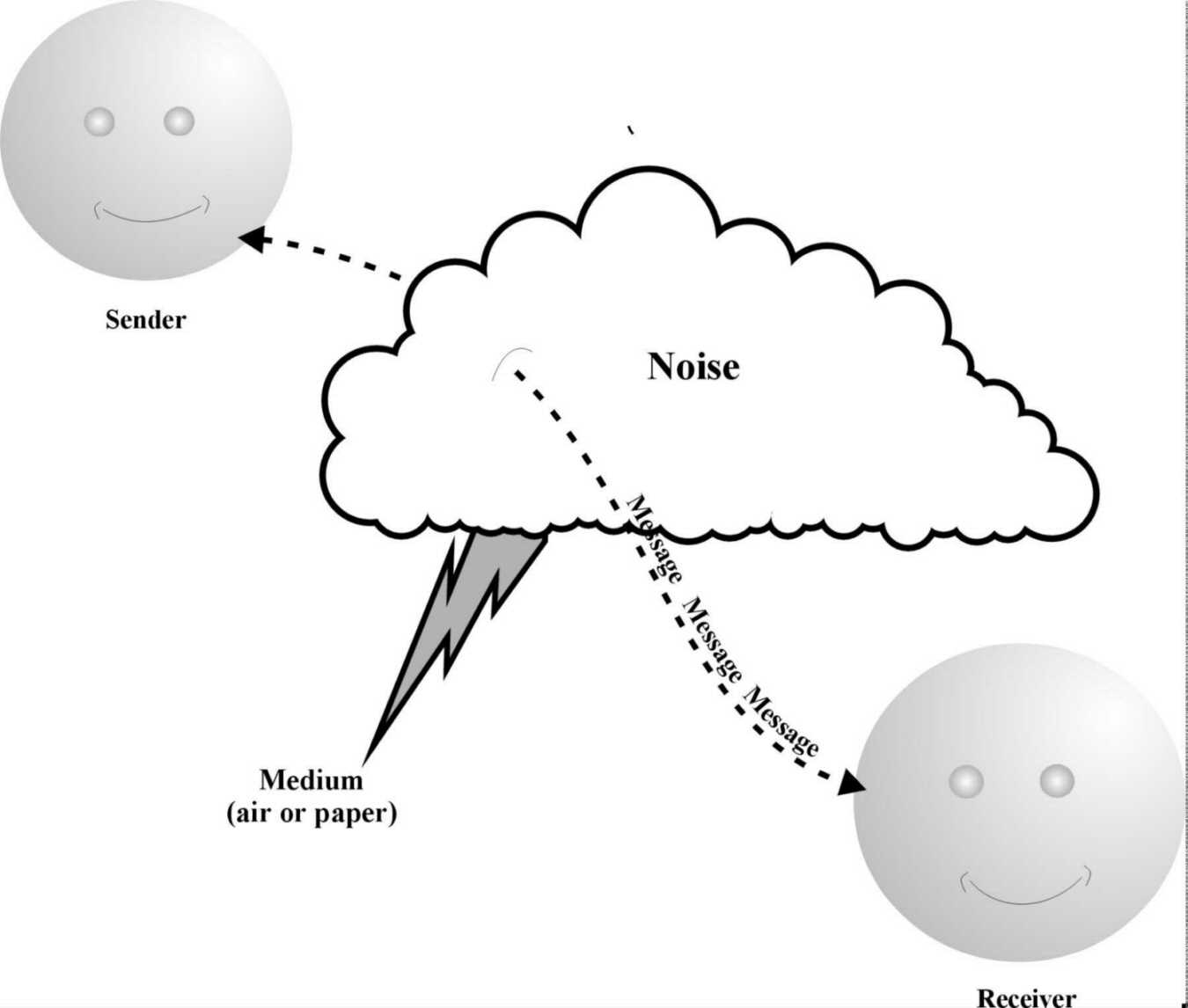

Communication signifies the transmission of a message from sender to receiver through a medium, subject to noise. As Figure 2-1 shows, a simple spoken or written message is transmitted one-way through the medium of air to a receiver through noise, represented by a cloud with lightning. The basis for the interpersonal model is from the science of communication, a field that studies interpersonal communication by voice, sign language, writing, gestures, physiology, and body language.

Historically in interpersonal communication, a message was either spoken or written. It was then delivered in person to a single receiver or group of receivers by voice through the medium of air or by letter, subject to the noise of chattering, interruption, and lack of attention. Interpersonal messages can be one-way or two-way, typically depending on the custom and sociology called for by the setting. For example, oral argument before the Supreme Court of the United States is two-way if and only if it pleases the Court. A final property of interpersonal messages was that written messages could be preserved verbatim (unlike spoken messages).

As language developed from simple grunts and gestures into Chomsky's modern transformational grammar, messages became more complex. As society became more specialized, so did communication. It took on non-personal forms as well. Storytelling, speechmaking, song, dance, painting, sculpture, and other kinds of expression apparently preceded the written word. Patrons of the arts began to exchange goods in return for various messages of artistic expression or entertainment works. When written, messages became more complex, subject to interpretation, and were necessary for governments, religions, and town commerce. Written messages became valuable enough that markets developed for paid messengers, and later, postage. In 1776, Adam Smith wrote that the postal service was "perhaps the only mercantile project which has been successfully managed by, I believe, every sort of government" [Smith, 1937, p. 770].

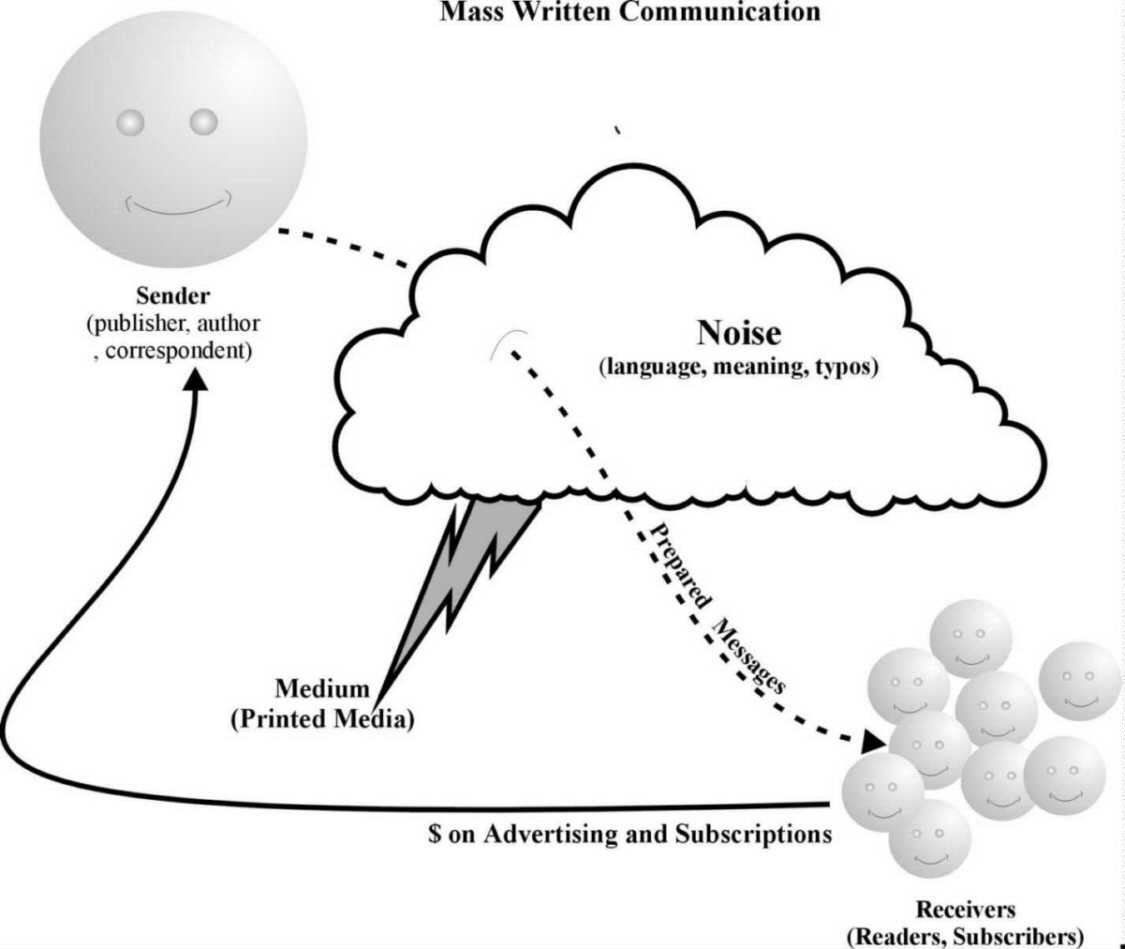

The invention of the printing press allowed mass production of written communication so that bibles, other books, newspapers, and magazines became relatively inexpensively produced forms of mass communication. The printing press added a new level of complexity to communication. The interpersonal communication model of Figure 2-1 has become a mass communication model shown in Figure 2-2.

The elements of communication changed when mass written communication became technically feasible. The sender became a specialized communicator such as a publisher, writer, or correspondent who created prepared messages. The message could be duplicated and sent (through the medium of paper or newsprint) to a group of recipients rather than a single person or small group. The receiver in the interpersonal communication model became a group of readers or subscribers. Communication began to be purchased per copy or by subscription. Then, advertising (the paid transmission of a message from a sender to a target group) came to exist alongside editorial writing. Because of the high cost of entry into publishing, there were now inherently more receivers (readers) than there were senders (publishers). The roles of editor, correspondent, reporter, and scientific writer as gatekeepers were established at this time.

2.2.3 The Hypercommunication Model Succeeds Telecommunication

As technology developed, telecommunication evolved. The prefix tele comes from the Greek where it meant "far off" or "distant". Telecommunication differs from both interpersonal communication and mass written communication by the medium and form of the message. However, telecommunication continued to follow either the mass communication model or the interpersonal model depending on the medium used.

The first form of telecommunication was telegraph, followed by telephone, radio, and finally, television. The telegraph, telephone, and two-way radio were each closely modeled after the interpersonal model. Television and broadcast radio followed the mass communications model insofar as they were unidirectional, mass-produced, and subject to gatekeepers. While telecommunications has come to have a wider definition than telegraph, telephone, and television, new technological realities have spawned hypercommunication. Unlike telecommunication, hypercommunication is a blend of both the communication models shown in Figure 2-1 and 2-2.

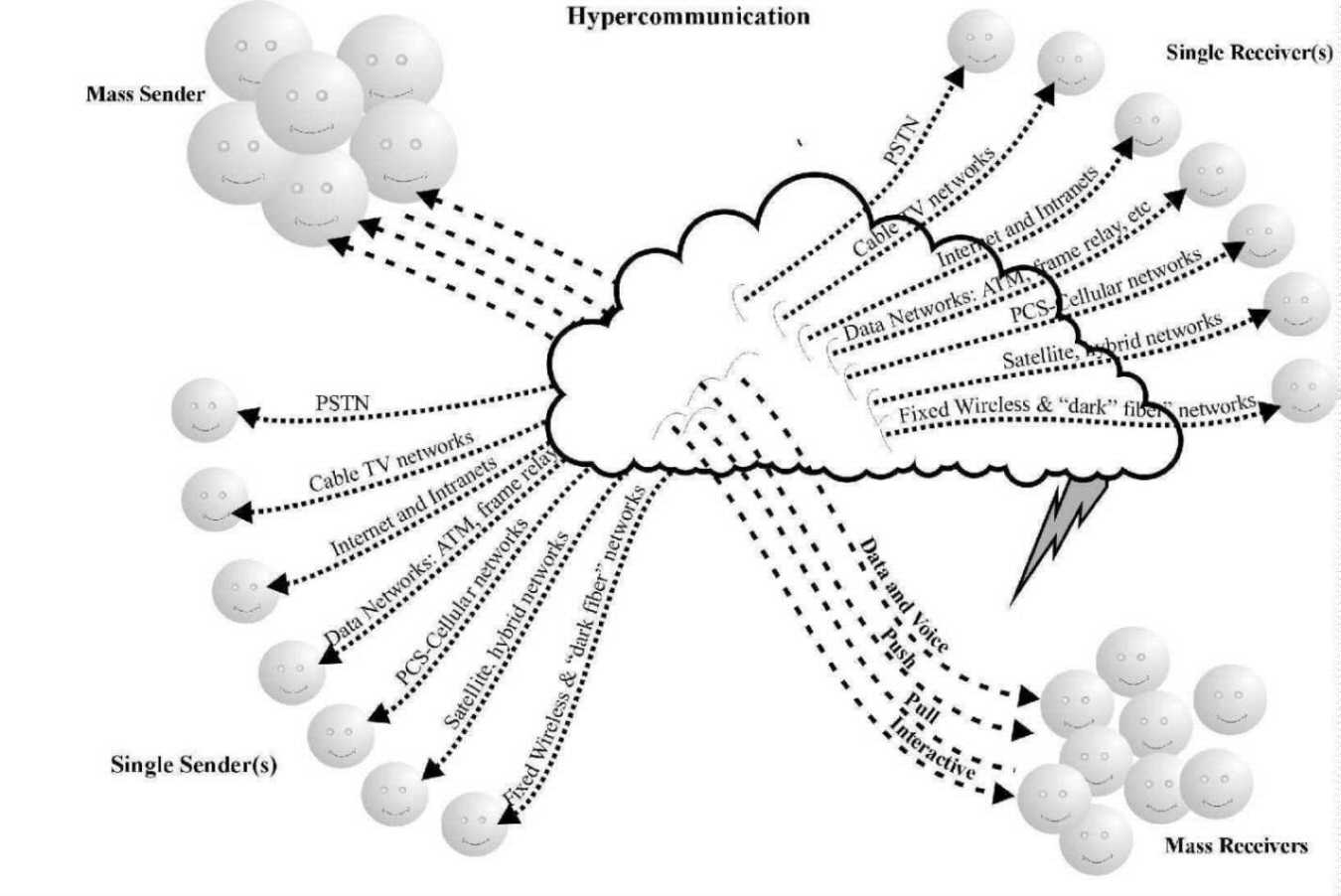

Open interconnection and networking (which came originally from data communication) are the enabling technologies of hypercommunication. The new hypercommunication model shown in Figure 2-3 is a synergistic combination of Figures 2-1 and 2-2, resulting in an array of new communication elements. In Figure 2-3, the interpersonal model, the mass model, and telecommunication are combined with data communication.

Additionally, positive network externalities are enhanced through open standards interconnection to yield a single mesh structure, a hypercommunication network. Telecommunications (already an ingredient which is the product of other, separate ingredients) is of course a major part of the hypercommunications pie. However, the finished product, hypercommunication is unlike telecommunication because it is an open system featuring unique positive (and negative) synergies among the networked parts.

There are three important ways the generalized hypercommunications model of Figure 2-3 differs from its predecessor models. First, hypercommunication is based on a common, interconnected network that consists of the full set of old transmission networks along with some new high technology networks. Second, hypercommunication allows for both old and new kinds of messages to be sent and received over that common network. In general, the networks and message types mesh so that each message type can travel from sender to receiver though one or all of the networks. Third, hypercommunication is based on new technologies that have redefined senders, receivers, distance, and noise so that the social and economic relationships of communication are synergistically more powerful than before.

The first way hypercommunication differs from its predecessor models is that it is a unified mesh of networks rather than a set of separate unconnected networks. In Figure 2-3, the hypercommunication model translates the old concept of communications media into a new concept of an open, interconnected communication networked medium carrying many message types. Previously disparate networks are shown by the lines moving from the lower left to the upper left of the diagram. These include the PSTN (Public Switched Telephone Network), cable TV networks, the Internet and other data networks, together with a variety of wireless networks (broadcast TV and radio, cellular, etc.). Before the advent of the hypercommunication model, each network was a separate medium generally based on the interpersonal or mass model. Both technology and deregulation now allow these previously separate networks to interconnect so that interpersonal and mass communication blends with the Internet and other new technologies. The hypercommunication model enables single senders to transmit messages (of any type) to single or mass receivers through the integrated mesh structure. Similarly, hypercommunication allows mass senders to transmit content to an audience of mass receivers or to transmit customized interactive content to individual receivers.

A second difference between the hypercommunication model and its predecessors is it permits many message types to be created and carried through the mesh of networks. In Figure 2-3, message types are shown going from the upper left to lower right. Message types may be generally based on content (voice or data) or directionality (push, pull, or interactive). Voice messages include two-way telephone calls, conference calls, automatic calling, audio webcasting, voice mail, and voice e-mail. Data messages include numbers, text, binary computer code, video, and graphics. The voice-data distinction itself is a vestige of a fading distinction between analog and digital networks. Voice communication traveled on analog networks and data traveled on digital networks. In reality, currently almost all voice messages are transmitted digitally as data.

Push messages include text and binary streams, graphics, and other content automatically sent to a single receiver or (more typically) to groups of receivers. Push messages may be voice, video, facsimile, text, graphics, or a mix. Push messages include subscribed webcasts, e-mail auto-responses, news crawls, quotation services, or they can be annoying automatic teledialing, junk faxes, and unwanted spam. Video streams include webcasting, TV and cable programming, live video auctions, video conferencing, and webcam transmissions. Pull messages are one-way, often invisible to communication users. Examples of pull messages include caller ID, call blocking and Internet cookies. Interactive messages are two-way and "conversational" in nature. Examples include interactive websites, telephone conversations, and video conferencing.

A third way the hypercommunication model differs from its predecessors is that it involves new roles for both senders and receivers, along with a host of new technological and economic characteristics. Technological details are best left until Chapter 3, but consider a few key economic differences between hypercommunications markets and telecommunications markets. Begin by noting the corners of Figure 2-3 and the box at the bottom: mass senders, mass receivers, single senders, single receivers, and Intranets. Mass senders and mass receivers are terms used to symbolize the role of the mass communication model in hypercommunication. Single senders and receivers symbolize the role of the interpersonal communication model in hypercommunication. The concept of an Intranet symbolizes communication within an organization. Hypercommunications use the same network for mass and interpersonal communicators.

For single senders and receivers, the hypercommunication model of Figure 2-3 offers several differences from telecommunication. First, hypercommunication rests on an interconnected mesh network so that telephone customers, cable TV customers, and ISP customers have new communication choices that do not depend on the monopolistic structure of the telecommunications carrier market. Second, the term "single" (synonymous with small) provides less of a barrier to access and entry under the hypercommunication model due to deregulation, interconnection, and the role of the Internet. The fixed and variable costs of interpersonal and mass communication have fallen dramatically in the integrated hypercommunications network. Distance and geographical boundaries are also less of a barrier. For the cost of a computer and peripherals, small firms and single individuals can create and transmit hypercommunications messages on a global scale because costs are dramatically lower.

For mass senders and mass receivers, the hypercommunication model differs from telecommunication in several ways. First, there are more types of messages. Second, there are more kinds of receivers (receiver as a device and an audience member) than under traditional telecommunication because the network interconnects previously separate media. Third, distance is less of a barrier to communications owing to digitization. Fourth, the direction, timing, and latency of hypercommunications transmission are more variable than those of traditional telecommunications transmission are. Fifth, because it is based on computer hardware, software, and the digitization of information, the hypercommunications model permits senders and receivers to store, copy, summarize, and re-use information in an unprecedented way.

2.2.4 Comparison of Telecommunication with Hypercommunication

A definition of communication by elements underscores both the immense potential of hypercommunication and the inherent differences between telecommunication and hypercommunication. Legally, there are over 100 definitions of communication in current use according to Ploman (1982), so while any definition will be imperfect, more detail is necessary. A reasonably detailed definition of modern communication covers nine elements in Table 2-1:

Communication is an (A) information processing activity or exchange process involving: (B) signal transmission of (C) message types (text, voice, video, data, content), (D) from a sender or senders through (E) space and (F) time (real time, live streaming, and delayed streaming), using a (G) transmission network to (H) a receiver or receivers or an audience (one person, several people, millions of people), (I) subject to noise and incompatible standards.

The exchange of information among people, organizations, or devices is an information processing activity or exchange (A) requiring that information (to be defined in 2.4) be input, patterned, processed, and coded into message form. Technology (to be defined in 2.3) permits element (B) transmission (or delivery) of hypercommunication signals using the PSTN, the Internet and other computer networks, wireless networks, or broadcasting networks.

The variety of message types (C) has already been introduced. With both telecommunication and hypercommunication, a given message may be stored, copied, and re-used, while in other cases copyright laws, technology itself, or other barriers prevent storage, copying, or re-use. Hypercommunication makes copying, changing, and retransmission of messages easier and cheaper than was possible with traditional telecommunication. Technical material in Chapters 3 and 4 will highlight the greater range of message types hypercommunication offers over telecommunication.

The sender (D) may be a person, business, organization, or government, if the sender's identity is known at all by the audience. Technology and deregulation enable a larger number of senders to inexpensively hypercommunicate than to telecommunicate. Open protocols and inter-networking allow a larger number of senders to join a larger common network than is possible with telecommunication.

Pricing of telecommunication traditionally depended on whether the mass model or interpersonal model was followed. Interpersonal telecommunication (such as telephone) was often priced based on distance through space (E). Mass telecommunication was supported by advertising and subscription. Improved technologies allow messages to travel farther through space over a digital networked infrastructure at a relatively lower cost.

Unlike telecommunication, hypercommunication offers choices concerning communications time and timing (F). Delivery can be instant (real-time), delayed, or archived. A message can be re-sent until the recipient is available or the sender can be notified automatically when the message is received.

The differences between telecommunication networks and the hypercommunication network (G) have been introduced already. Unlike the radio and television networks of telecommunications, no single organization could "own" the entire hypercommunication network. This is because architecture is open and the emphasis is on interconnection. Chapters 3 and 4 will discuss many technical reasons that the hypercommunication network differs from telecommunication networks.

The audience (H) may consist of one person or many, with message delivery being push or pull, instant or delayed, simultaneous or non-simultaneous. The audience may pay for the message through direct subscription, indirectly though exposure to advertising, by carrier access costs, or not at all.

Noise (I) has always meant electrical interference, static, crosstalk, or other barriers to clearly hearing, seeing, or understanding a message. Now, it may also include a host of hardware and software factors such as network failures, congestion, and operator error at both ends that prevent delivery of messages on time, or at all. Finally, incompatibility of standards is the ultimate form of noise because communication is prevented from occurring at all, or is considerably delayed--so that the marginal cost outweighs the marginal benefit.

2.2.5 Specific Hypercommunication Services and Technologies

An operational definition of the hypercommunications sector is given in Chapter 4 where specific categories of services and technologies are presented. These (now partially separate) telecommunication and data communication sub-markets are converging into a hypercommunications sector. In the four hypercommunications sub-markets, services must be separated from the wireline and wireless technologies that provide them, sometimes a difficult task. Hypercommunication services are provided to customers by carriers or content providers using technologies that are often invisible to customers.

The first sub-market includes traditional telephony services, local and long-distance calling, basic signaling (dial tone and ringing), coupled with traditional telecommunications technologies (switching, circuits, and local loops). In the past, these services had been closely regulated monopolies, but the 1996 TCA (Telecommunications Act) and other legislation has encouraged deregulation and competition. Real-time voice conversations messages occur between sender and receiver in traditional telephony or POTS (Plain Old Telephone Service), as traditional service is known in technical jargon. A technical overview of the POTS PSTN will be found in Chapter 3.

The second sub-market includes newer enhanced landline and wireless telecommunications services. Services in this category range from caller ID, call waiting, and PCS to elaborate CTI (Computer Telephony Integration) systems offering agribusinesses hundreds of options of connecting telephones and computers. These services are supported by enabling software and hardware transport technologies such as AIN, DS-100 switching, and SS7 signaling, and electromagnetic carrier waves. PBXs (Private Branch Exchanges) and other computer and telephone hardware and software must be purchased by the agribusiness to take advantage of many enhanced services. Enhanced services were originally developed by local telephone monopolies or ILECs (Incumbent Local Exchange Carriers) but now are also available from competing ALECs (Alternative Local Exchange Carriers) authorized under deregulation. Enhanced services are based on existing traditional services and are interconnected with traditional service networks (especially the PSTN). Enhanced services have been less regulated than their traditional counterparts, but the TCA affects these services almost as profoundly. Enhanced services and technologies send voice, signaling data, limited text, and paging messages.

The third sub-market includes private data communication and networking services such as: Intranets, frame relay, ATM (Asynchronous Transfer Mode), and SMDS (Switched Multimegabit Data Service). Computer and networking technologies such as routers, cabling, and other CPE (Customer Premises Equipment) must be purchased by agribusinesses using private data services. Bandwidth and equipment are available from ILECs, but because this category is largely unregulated, services are also available from ALECs and ISPs. Furthermore, firms in the conduit and hardware businesses often strategically partner with hypercommunication carriers to enable one-stop shopping. This is the only hypercommunication sub-market almost entirely made up of business customers. Currently, most messages are digital data communications, including the exchange of text and binary files. However, technical convergence is so rapid that private networks are becoming flexible enough to include all message types. Chapter 3 contains technical and economic foundations of computer networking.

The fourth hypercommunication sub-market is the broad Internet sector. This area includes Internet access and bandwidth, e-commerce, and Internet QOS (Quality of Service). The Internet has increased awareness of transmission variables (such as speed, capacity, and delay) in every hypercommunications category. The Internet differs from private networking because of circuit ownership, protocols, and ubiquity. Internet access is a more open architecture than private networking services because a portion of the loop is through an ISP connection to a public backbone rather than exclusively dedicated for private use. Internet technologies substantially overlap other hypercommunications categories to include IP telephony, live two-way Internet video, and webcasting. Messages types carried by the Internet include familiar forms such as e-mail, web page content, and file transfers. Internet messages also include less familiar forms like voicemail, Internet telephony, live video feeds, interactive chatting and cyber shopping. Many connections to the Internet are still made via narrow-band modems via the PSTN to ISPs. However, data transmission technologies used in private networking (frame relay, ATM, DSL, and broadband) provide greater speed and convenience for Internet access, increasing availability and lowering cost. The Internet has successfully resisted most regulation.

All four sub-markets rely on a variety of wireline and wireless transmission technologies to operate. Additionally, protocols and standards are needed to help hypercommunications firms and their customers avoid the deadweight loss of searching for technical specifications to enable widespread interconnection. Protocols and standards (such as TCP/IP and SS7 signaling) evolve from scientific agreement, governmental edict, or from industry bodies and competition. Protocols and standards allow the broadest possible market to be formed as well as allowing pricing and definition of market services.

Three points conclude this section on economic conceptualizations of hypercommunications. First, hypercommunications categories are asymmetrically regulated. There are various federal, state, and local government agencies (such as the FCC and FPSC, Florida Public Service Commission) with regulatory control. These regulators affect what services will be available, where, at what price, and with what kinds of taxation or subsidy. However, regulation does not apply uniformly across every hypercommunications category thereby distorting individual categories and the entire hypercommunications sector. The telephone and Cable TV markets (even with recent deregulation) are far more regulated than the Internet access market for example. Government is also involved with anti-trust enforcement and legislation covering market structure, conduct, and performance in hypercommunications as in other industries. The recent joint action of the federal Department of Justice and numerous state Attorney Generals against Microsoft is a current example, while the breakup of AT&T's Bell System by the federal courts is an historical example. Chapter 5 is dedicated to policy and regulation.

Second, hypercommunication can often be quantified for economic and technical analysis. Hypercommunication can be characterized by traffic shape, capacity, delay, speed, and other quantitative analyses. Chapter 3 covers the technical and economic foundations of networks, the source of these measures. Chapter 4 covers the bandwidth, data rate, throughput, and a number of other quantitative measures known as QOS (Quality of Service) variables that are unique to hypercommunication.

The third environmental factor, the qualitative side to hypercommunication, is important economically and can be easily forgotten if hypercommunication is viewed from a pure engineering perspective. A particular message may be considered invasive or undesirable even when flawlessly sent and received in physical terms. The concepts of a message, medium, noise, attention, and a receiver are likely to be looked at differently by a network engineer's technical objectives than from a business, economics, or communications orientation. Additionally, hypercommunication has important social implications with economic repercussions. Issues of security, fraud, privacy, and freedom of paid and non-paid speech will have to be addressed for markets to function.

Now that hypercommunication has been conceptualized as the first foundation of the information economy, it is time to conceptualize the other two: technology (2.3) and information (2.4).

2.3 Technology, the Second Foundation

Technology, the second of three foundations of the information economy, is an important catalyst for the entire information economy, not just for the hypercommunication sector. Using economic and technical literature, this section defines and examines technology generally to better understand the economic roles it plays in the information economy. Coverage of specific network technologies (and related economic precepts) will be found in Chapter 3. Specific hypercommunication technologies are discussed in Chapter 4.

Technology, (from the Greek, technologia), means: "1) the science or study of the practical or industrial arts, 2) the terms used in science, art, etc. 3) applied science" [Webster's New World Dictionary, college ed., 1960, p. 1496]. To a firm, technology "is a basic determinant of a company's competitive position" [Ashton and Klavans, 1997, p. 8]. Technology includes current product features and performance, "the capacity, yield, quality, and efficiency of production processes". Technology also "helps determine the unit costs of making and delivering products and the nature of capital investments", while serving as "the source of new products and processes for future growth". Finally, technology is "a valuable intelligence focus" that "can be a direct source of business revenue" [Ashton and Klavans, 1997, p. 8]. Used strategically by business, technology has "the potential to create or destroy entire markets or industries in a short time" [Ashton and Klavans, 1997, pp. 8-9]. More broadly, others argue that technology comprises all problem-solving activities including how people and organizations learn, and the stock and flow of knowledge [Cimoli and Dosi, 1994].

The organization of section 2.3 follows the roles played in the information economy by technology as identified in Table 2-2. First, it is important to understand how sources of technological change are best identified and modeled in economics. There are four chief schools of thought or research agendas: induced innovation, evolutionary theory, path dependence, and endogenous growth [Ruttan, 1996; Homer-Dixon, 1995]. Ruttan argues that while each "agenda has contributed substantial insight into the generation and choice of new technology", the lack of co-operation and fresh results has caused the foursome to reach a "dead-end" [Ruttan, 1996, p. 2].

2.3.1 Research Agendas in the Economics of Technological Change

Differing views about the sources and economic implications of technological change are responsible for considerable differences in economic thought among the four agendas. Differences range from minor adjustments in conventional microtheory in the induced innovation school to the "paradigm shift" of the evolutionary agenda, to the completely "new" economics demanded by path dependence theorists. The endogenous growth agenda has brought macroeconomic thought into the microeconomics of technology. Auerswald et al. argued in 1998, "macroeconomics is ahead of its microeconomic foundations" because of the endogenous growth agenda's macroeconomic models of technological change in production. The term mesoeconomics refers to the application of macroeconomic endogenous growth factors of production such as human capital, technical knowledge, and other non-conventional inputs and outputs to microeconomics.

The first agenda, the induced innovation literature, argues that technical change is a "process driven by change in the economic environment in which the firm finds itself" [Ruttan, 1996, p. 1]. According to Christian, "Models of induced innovation describe the relationship between" production (summarized by factor-market conditions and "the evolution of the production processes actually used") and "the demand for the finished product" [Christian, 1993, p. 1]. The relative scarcity of resources and changes in relative prices induce or guide technological change under this view.

Work in induced innovation has concentrated in several areas. One analytical strain consists of macroeconomically oriented growth theoretic models [Kennedy, 1964, 1966; Samuelson, 1965]. These models were developed to examine stable shares of aggregate factors, in spite of intensive substitution of capital for labor in the U.S. economy. The demand-pull strain holds that changes in market demand lead to increases in the supply of knowledge and technology. Micro and macro studies of demand-pull focused on how the location and timing of invention and innovation were stimulated by demand [Griliches, 1957, 1958; Schmookler 1962, 1966; Lucas, 1967]. A supply-push orientation holds that changes in the supply of knowledge lead to shifts in the demand for technology.

Rounding out the induced innovation work is a fourth strain, factor-induced technical change, based on Hicks' idea that "a change in the relative prices of factors of production is itself a spur to innovation and inventions" [Hicks, 1932, p. 124]. Along with Ahmad's [1966] paper, the Hicksian ideal that relative prices matter launched iterations of microeconomic models. Economic historians (Habakkuk, 1962; Uselding, 1972; David, 1975; Olmstead, 1993) and agricultural economists (Hayami and Ruttan, 1970, 1985; Thirtle and Ruttan, 1987; Olmstead and Rhode, 1998) have worked in this fourth area. Microeconomic models were used to show empirically that exogenous changes in relative prices induced innovation, moving firms away from costly inputs toward relatively cheaper ones.

The second agenda, the evolutionary perspective, stems from the recognition by Alchian (1950) and other economists that the behavior and objectives of firms are more uncertain and complicated than received theory allows due to the inevitability of mistakes. The genesis of this approach is credited to Schumpeter's later (1943) recognition that synergistic feedback between R&D and innovation could allow certain firms to influence demand [Freeman, Clark, and Soete, 1982]. At the firm level, "the crucial element is full recognition of the trial-and-error character of the innovation process" [Nelson, Winter, Schuette, 1976, p. 91].

Evolutionary models use a "black box" behavioral theory of the firm and its larger operating environment. The "black box" represents the firm's decision objectives and rules in a search for technological modifications that begins in the neighborhood of existing technologies. Alterations in conventional microtheory (bounded rationality among heterogeneous agents), collective interaction, and continuously appearing novelty create an economic world of emergent, unstable dynamic phenomena [Dosi, 1997]. The firm itself relies on historical "routines" and "decision rules" rather than "orthodox" profit maximization or other global objective functions [Nelson and Winter, 1982, p. 14]. Cimoli and Della Giusta argue that "the standard statistical exercise of fitting some production function" could still be done under the evolutionary approach. However, "the exercise would obscure rather than illuminate the underlying links between technical change and output growth" [Cimoli and Della Giusta, 1998, p. 16].

The third agenda, the path dependence model arises from the idea that technological change depends on network effects and sequential paths of development [David, 1975; Arthur et al., 1983]. Under this view, many candidate technologies interact with random events in the early history of a new technology. The result is a winning technology (not necessarily the optimal one) that locks in an economic path, down which future technological iterations march. Under conventional thought, well-behaved technologies have diminishing marginal returns in individual factors, constant returns to scale for all factors together, and stable equilibria.

Unlike conventional convex technologies, under path dependent theory the networked products, organizations, and markets can produce multiple equilibria, globally increasing returns to scale, and disobey the "law" of decreasing marginal returns. Increasing returns "act to magnify chance events as adoptions take place, so that ex-ante knowledge of adopters' preferences and the technologies' possibilities may not suffice to predict the 'market outcome' " [Arthur, 1989, p. 116]. Path dependencies can "drive the adoption process", causing domination by "a technology that has inferior long-run potential" [Arthur, 1989, p. 117]. Contributions from the path dependent school to network economics are covered in Chapter 3.

The fourth agenda originates in the endogenous growth macroeconomics literature as advanced by Romer (1986, 1990). Ideas about the non-convexity of technology from endogenous growth have seeped into micro thought through ideas such as "knowledge is assumed to be an input in production that has increasing marginal productivity" [Romer, 1986, p.1002]. Nobel Laureate Robert Solow demonstrated in the 1950's that knowledge was a motor for economic growth [Solow, 1956, 1957]. Based on Solow's results, a "narrow" version of endogenous growth holds that the main source of productivity increase (output per worker or dollar of capital) depends on the progress of science and private sector R&D expenditures. A "broad" version posits an "indirect relationship between technological improvement and economic activity" based on learning by doing or reorganization of the production process [DeLong, 1997, p. 12].

Table 2-2 shows the roles that technology plays in the information economy. The coverage given each role differs, emphasizing those where hypercommunication and agribusiness are most influenced. Each of the four research agendas is used in the discussion. However, conventional economic models are used to establish each topic.

The discussion is separated into roles because technology is often used with only one of these meanings in mind. When economists, engineers, or agribusinesses discuss the role of technology in a firm or industry, they are often discussing different concepts. Robert Solow admitted the truth of this among economists in 1967, "the economic theory of production usually takes for granted the 'engineering' relationships between the inputs and outputs and goes from there" [Solow, 1967, p. 26]. However, "new" economists argue that analyses of technology require that technical and economic relationships in the industry and within the firm be considered in addition to interactions among them.

| Sec. | Role | Topics |

|---|---|---|

| 2.3.2 | Production: technical aspects | MPP and MRTS, technical factor interdependence, technical factor substitutability, returns to scale and scale neutrality, returns to scope |

| 2.3.3 | Production: economic aspects | Returns to size vs. returns to scale and size neutrality, non-homothetic technologies and modifications in production economics, economic interdependence of factors, factor bias and augmentation |

| 2.3.4 | Managerial | Internal, allocative, and dynamic efficiency, operational, tactical, and strategic flexibility, other dimensions (scope and system) measurement of technical change |

| 2.3.5 | Supply | Decreasing cost industries, technology treadmill, technology as public good (technology spillovers) |

| 2.3.6 | Demand | Demand shifts, diffusion of innovation, pro-competitive, anti-competitive, and neutral effects |

| 2.3.7 | Technology-Information linkage | Composite functional relationship between technology and information |

2.3.2 Technology and Production: Five Technical Aspects of Invention

The first major role played by technology in the information economy is the technical efficiency of a new production technology. In conventional economics, a firm's production function encloses what Varian calls the "production set", or "set of all possible combinations of inputs and outputs that are technologically feasible" [Varian, 1987, p. 310-311]. Beattie and Taylor define a production function as:

a quantitative or mathematical description of various technical possibilities faced by a firm. The production function gives the maximum output(s) in physical terms for each level of the inputs in physical terms. [Beattie and Taylor, 1985, p. 3]

However, a discussion of the technical and engineering roles of technology in the information economy (even in the realm of agribusiness) requires a broader perspective than the production function of conventional economics for three reasons. First, new technologies (IT, networks, and biotechnologies) often do not behave along traditional economic lines. Second, the conventional view of the agribusiness firm differs from the emerging reality of the transgenic firm (Baarda, 1999) because implicit and explicit vertical and horizontal integration takes unconventional forms. Third, the economic and technical literature offers many helpful new approaches.

One of the benefits of conventional economics is that, when practiced well, it can simplify complex systems into elegant models. A variety of details (covered in this chapter and the next) such as time compression, information overload, communication, information, and network externalities have received specialized treatment in the economics literature. However, such important tangencies to mainline economic thought lack wide audiences. The results are hard for agribusinesses to use unless presented in a recognizable form such as the production function. Importantly, while some arguments concerning the usefulness or existence of the production function will be presented, the production function (when considered more broadly than it often has been) retains enormous value in analyzing technology's economic and engineering roles. Perhaps the value stems from the fact that the concept behind a production function is understood by economists, farmers, and engineers. Possibly, the production function's continuing utility comes from being a common baseline against which the roles of new technologies can be compared.

2.3.2.1 Technical and economic distinction

The distinction between technology's direct role in altering the technical side of production (covered in this section) and technology's indirect role in altering the economics of production (to be covered next in section 2.3.3) could not be more important. That distinction is often at the heart of the disparate philosophies of the engineer and the economist regarding the impact of technology. Understanding this economic-technical distinction makes economics more consistent with the information economy in three ways.

First, on a micro level, it shows that Schumpeter's early distinction between innovation and invention need not be a demarcation criterion for economics [Schumpeter, 1934, Vol. 1, p. 84]. The early Schumpeterian view was that invention (unless it directly produced innovation) was an "economically irrelevant" experimentation in technical feasibility. Innovation (which did not require invention) included economic feasibility and economic efficiency in addition to mere technical efficiency. Path dependent and evolutionary economists argue that such an arbitrary distinction has lead conventional economics to faulty analyses of weightless technologies, production teams, and inter-firm alliances of the information economy.

Ironically, Schumpeter's early (1934) thought is sometimes used by conventional economists as a demarcation criterion while his later (1943) chapter on how capitalism's "process of creative destruction" was "evolutionary" germinated the sprouts of the evolutionary school. It has been pointed out that the irony arises from the fact Schumpeter himself edged away from Marshall's view of technical change as continuous, later believing that technological change often occurred in discrete, revolutionary bursts [Moss, 1982, p. 3].

The diversity of innovation (according to Schumpeter) heightens the irony that some economists would prevent the consideration of discrete or bursty "inventive" technological changes in favor of mathematically well-behaved, continuous "innovation". Rensman notes (1996) that Schumpeter enumerated five kinds of technological innovations:

1) a new good or new quality of good, 2) a new method of production, 3) opening of a new market, 4) discovery of new resources or intermediates, and 5) a new organizational form. [Rensman, 1996, p. 1]

Some of these can hardly be considered continuous phenomena.

To bring invention into the domain of economics along with innovation, "new" economists argue that there are six kinds of technological inventions: the five innovations mentioned above, plus basic or theoretical research. The first five are goal-oriented activities, with clear economic incentives. Both self-employed individual inventors (the rule in Marshall's day) and organizationally employed R&D teams (part of the modern R&D function) engage in such goal-oriented invention. However, the sixth, pure theoretical research is often undertaken with an epistemic value or evolutionary organizational value in mind rather than (or in addition to) conventional objectives. The tendency to exclude "inventive" production from economics ignores the employment of inputs in the production of research and invention and ignores how Schumpeter defined technological innovation.

On this basis, Perrin (1990) suggests that there is a difference between "pre-adoption" and "post-adoption" research into the economics of technology. Homer-Dixon adds that the economic-technical distinction includes ingenuity (the generation of practical ideas) and the dissemination of productive ideas [Homer-Dixon, 1995, p. 587]. Thus, part of the rationale behind an economic-technical distinction in production aligns with Schumpeter's early invention-innovation idea and part relies on the technical and inventive stages within an overall process from idea generation to dissemination.

A second way understanding the economic-technical distinction improves the consistency of economics with the information economy is by highlighting the apparent fragility of two notions. First, economic efficiency requires mathematical determinism and integrability. Second, economic efficiency always guarantees technical efficiency. A deterministic worldview, if enforced through well-behaved primal or dual technologies, can rule out entire classes of economically relevant technologies. When economic efficiency is defined solely in neat, tautological fashion by the mathematics of static, structurally fixed markets, (where identical firms maximize profits or minimize costs given well-behaved single commodity output production functions and perfect certainty), it implies technical efficiency. Indeed, the generality and elegance of the duality approach simplify empirical work while providing what Silberberg calls theoretical "soundness" [Silberberg, 1990, p. 285]. However, as Pope and others have recognized, "not all problems seem to be capable of being studied using duality" [Pope, 1982, p. 350].

Multi-product production, non-conventional inputs, network effects, and ever-shorter decision periods (all a result of new technologies) can make economics appear inconsistent with the times. The evolutionary and path dependent agendas question whether standard production and cost functions are useful in analyzing many kinds of technological change to begin with. They contend that economic concepts of the firm and production need to be broad enough mathematically and technically to acknowledge the changes that IT, hypercommunications, and biotechnology bring. This is underscored by Williamson: "The firm as production function needs to make way for the firm as governance structure if the ramifications of internal organization are to be accurately assessed" [Williamson, 1981, p. 1539; quoted by Cotterill, 1987, p. 107].

However, because of advances in computer technology, conventional mathematical constructs and their successors may become even more useful in understanding core technical-economic distinctions. Even if duality and conventional mathematical economics are passé, as some "new" economists argue, [Kelly, 1994] a theoretical cocoon with equal or greater elegance would seem necessary to replace them. Until this occurs, modifications in the general idea of a production function (and optimization of profit, cost, or something else) may give economics a view of the economic-technical distinction that better reflects non-conventional technologies and network effects. Indeed, along with new views of the firms and supply chains that constitute markets, mathematical extensions of conventional economic models may be more important than ever. In 1963, Morgenstern saw the importance to economic problem solving that technologies of the "new" economy (computers and combinatorial economic software) would have. He noted that technology itself would "continuously generate new problems of a mathematical nature" to bring the unlimited penetration of mathematics into economics. This led him to note the impossibility of "any 'limits' to the use of mathematics" (in economics) [Morgenstern, 1963, p. 29].

A third way that understanding the economic-technical distinction makes economics more consistent with the information economy is by revealing a broader view of efficiency than that of the economist or engineer alone. According to Kevin Kelly, Peter Drucker has argued that the productivity problem for each worker in the industrial economy was how to do his job right (most efficiently). Kelly argues that in the new economy, "productivity is the wrong thing to care about" because the "task for each worker is not 'how to do his job right', but 'what is the right job to do?' " [Kelly, 1997, p. 14]. Evolutionary and path dependent theorists would argue that standard economic theory (from which production functions are taken) is so simplistic that "it does violence to reality" [Arthur, 1990, p. 92]. Under Kelly's twelfth rule for the new economy, the law of inefficiencies, he writes: "Wasting time and being inefficient are the way to discovery" [Kelly, 1997, p. 14].

Yet new ways of looking at technology within economics are (as Arthur notes when discussing increasing returns) "not intended to destroy the standard theory", but to "complement it" [Arthur, 1996, p. 3]. The decision rules and routines of the evolutionary theorists "are close conceptual relatives of production 'techniques'" [Nelson and Winter, 1982, p. 14]. In an e-business setting, such as amazon.com for example, the routines that Nelson and Winter say "replace the production function" presumably include such technological efficiencies as high-tech commerce servers and software [Nelson and Winter, 1982, p. 14]. Amazon.com may be a highly efficient firm in a technical sense, but as of January 2000, it has yet to make a profit. The question of whether a firm or industry can be economically but not technically efficient requires a modified view of production that considers the technical-economic distinction with a broad view of efficiency.

2.3.2.2 Conventional production: simple technology, MPP, MRTS

The simplicity of the conventional production function enables understandable first-order comparisons of technical and economic efficiency to be made. In the conventional perspective, improved technology helps a producer to produce more output with the same amount of inputs to become more technically efficient. It is another matter to say whether firms that adopt progressive technologies are also economically efficient. Each kind of efficiency can be thought of as a matter of degree in applied work where "revealed efficiency" is used to compare "overall" efficiencies among firms [Paris, 1991, pp. 287-306].

The production function "identifies the maximum quantity of a commodity that can be produced per time period by each specific combination of inputs" [Browning and Browning, 1989, p. 168, italics mine]. The concept of decision period or length of run is central to the analysis. Using Hicks' two inputs, capital (K) and labor (L), Persky names four decision periods (VSR, SR, LR, VLR) that are important to engineers, economists, and agribusinesses alike:

In the very short run (or market period), the quantities of both inputs are fixed. In other words, K and L are both parameters.

In the short run, the quantity of one input is fixed, and the quantity of the other input can be varied. In other words, either K is a parameter or L is a parameter. We usually take K as the parameter.

In the long run, the quantities of both inputs can be varied.

In the very long run, the quantities of both inputs can be varied, and the production function can change. This case represents technological improvement. [Persky, 1983, p. 146, emphasis in original]

The evolutionary and path dependent schools would debate Persky's assessment that the VLR is the only place technological change can occur.

Additionally, in a weightless information and knowledge economy, no VSR exists in some cases because the production plan varies by the minute. Later in the chapter (2.5), the concept of time compression due to technological progress in hypercommunication and information processing will be discussed. As will be emphasized then, IT is responsible for greater flexibility in varying inputs as well as for increasingly shorter decision periods. Therefore, the cases of VSR and VLR are less apart in time than they once were. Not incidentally, inexpensive high-speed hypercommunications allow the instantaneous transmission of large amounts of information to anywhere reached by a high-speed infrastructure, permitting (but not guaranteeing) faster planning and decision making.

It might appear as though production agriculture might not benefit from time compression due to new technology the way other industries do. Crop seasons and animal cycles create relatively inflexible decision periods. However, intellectual property, communication, and information are weightless inputs whose share makes up an increasing proportion of total factor mix in agriculture. Indeed, biotechnologies and high-tech crop monitoring allow even production agriculture to achieve an unprecedented degree of control and flexibility over factors. Furthermore, technologies are appearing that are able to time compress agricultural production processes as well. One example is the case of biotech "super pigs" that grow forty percent faster to larger weights than before, using less feed and other inputs while decreasing piglet death rates [Hardin, 1999; Associated Press, 12/7/99].

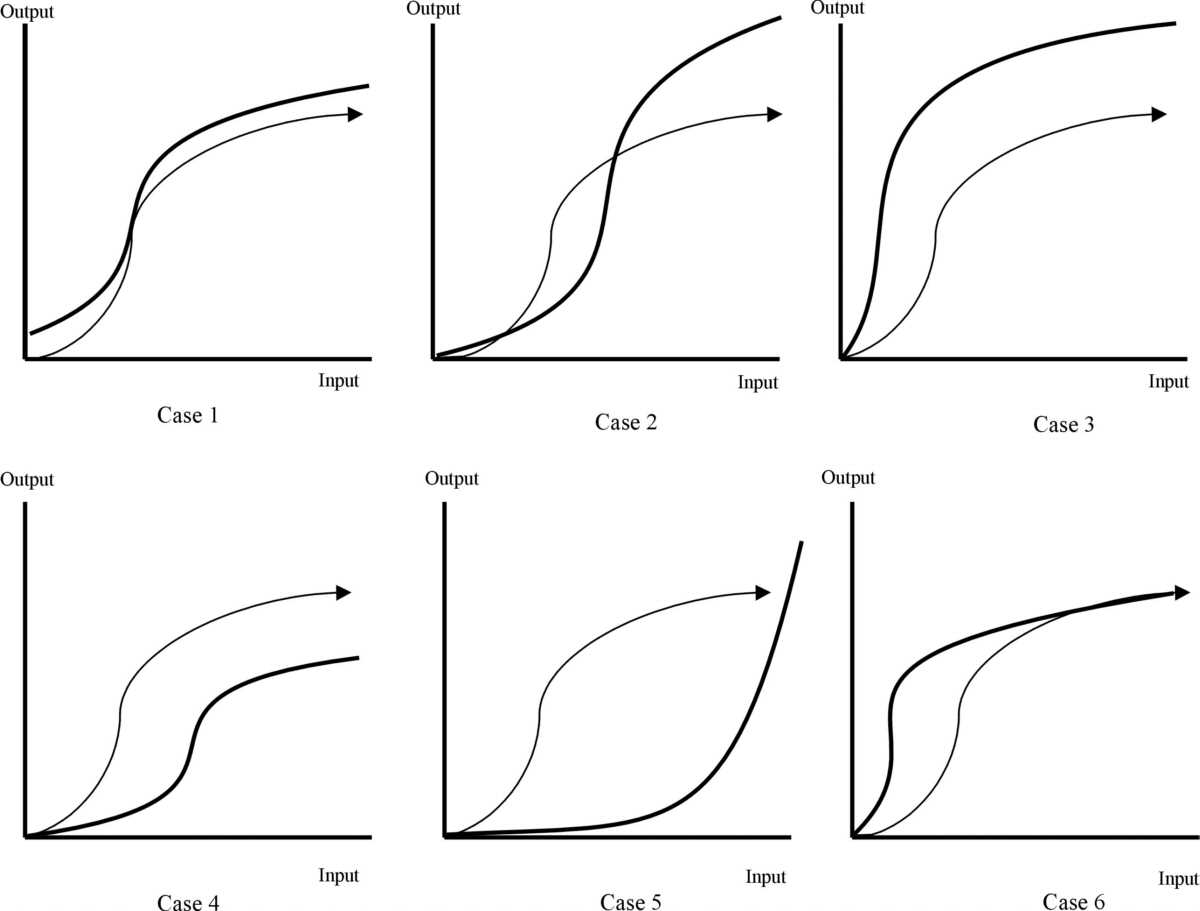

Consider how the conventional production function could vary due to a new technology. Graphically, the technical effect on production of a technological change can easily be shown through the production function in the single output, single input case. Figure 2-4 shows six cases of how a new technology can alter the technical side of production. Depicted is a generalized monoperiodic production function. Assume that the firm has perfect certainty as it compares the old and new production processes before the next production period.

The baseline for each case is a thin line (representing an identical base production function) ending with an arrow. In each case, a new technology's influence on production is shown by a bold line. Without more information than the production function alone, the probable economic behavior of a firm cannot be established. Assume that the only change between cases is technology as embodied in the production function.

Case one shows a new production function that is a parallel shift of production under the old technology. The MPPs (Marginal Physical Products) are identical and the only difference is a constant additional quantity of output at all input levels resulting from the new technology. By converting to the new technology, more units of output will be produced for the same amount of input, regardless of whether a small amount of input or a large amount is used. The fixed quantity of output produced by the new technology appears to be scale neutral (does not depend on how much input is used).

Case two depicts a new technology that produces less output for a given amount of input up until a point. After that point, more can be produced by the new technology than under the old one. Case two favors larger scales of operation. In case three the new technology is always and everywhere able to produce more output (given the same amount of input) than under the old technology. Case three is scale neutral, favoring adoption of the new technology at any scale of operation.

In case four the new technology always and everywhere produces less output given the same amount of input than under the old technology. Economically, it is hard to imagine a firm that would replace an old technology with a new technology that always produced less. The fifth case depicts a new technology that is better than the old technology only at a relatively large operational scale. Note that the new technology underperforms the old one until the MPP of the old is approximately zero, where the output of the new technology races above the old one. Case six shows that adoption of the new technology holds only for lower levels of inputs. Once the MPP of the old technology approaches zero, the two production functions are virtually identical. In this case, the new technology would tend to favor a smaller scale than the old one.

A slightly more general mathematical treatment brings in two inputs, so that production with the new technology may be better compared to the base-case production function. Now, four core concepts of technical production are considered: technical substitutability among factors (2.3.2.3), technical factor interdependence and separability (2.3.2.4), average and marginal returns to scale (2.3.2.5), and returns to scope (2.3.2.6).

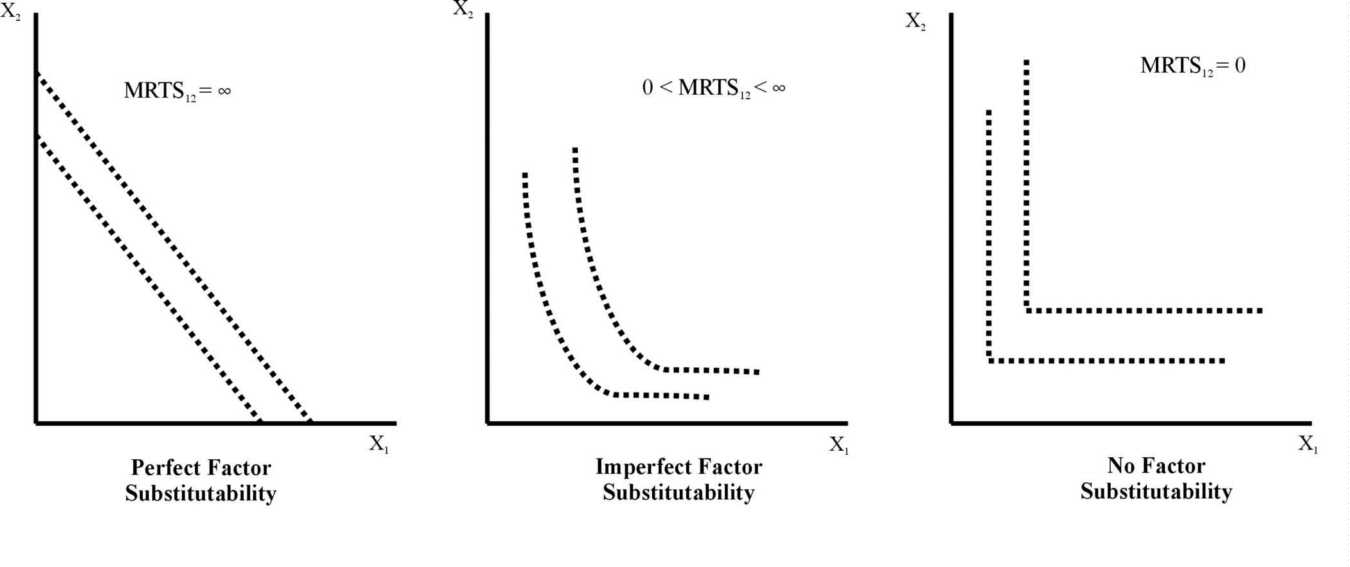

2.3.2.3 Technical factor substitutability

The effect of a technical change on factor substitutability is an important technical aspect of production. However, it can be measured in many ways. Beattie and Taylor admit that factor substitutability can be a misnomer. "Economists' use of the term, factor substitutability, to refer to isoquant patterns is a bit unfortunate--it only means that isoquants are convex to the origin" [Beattie and Taylor, 1985, p. 29]. Convexity can result from chemical interaction or other synergies (such as network externalities) between factors so that it is not necessarily true that one factor actually "substitutes" for another.

Technical factor substitutability hinges on the relationship

among inputs needed to produce a particular output level. Consider a two

input production function ![]() ,

let x1 and x2 be physical units of a factor of production.

The MPP of x1 is simply the first partial derivative,

,

let x1 and x2 be physical units of a factor of production.

The MPP of x1 is simply the first partial derivative, ![]() , likewise the MPP of x2 is

, likewise the MPP of x2 is ![]() .

The MRTS of x1 for x2 (MRTS12) is derived

from the total differential of the production function,

.

The MRTS of x1 for x2 (MRTS12) is derived

from the total differential of the production function, ![]() . If dy = 0 as it must along an isoquant, then

. If dy = 0 as it must along an isoquant, then ![]() .

The slope of any isoquant is given by

.

The slope of any isoquant is given by ![]() .

The MRTS12 is the absolute value of the ratio of MPPs,

.

The MRTS12 is the absolute value of the ratio of MPPs, ![]() .

The MRTS12 tells how many more units of x1 would

be needed to hold output constant, while taking away one unit of x2.

The MRTS and isoquant curvature are related to the technical substitutability

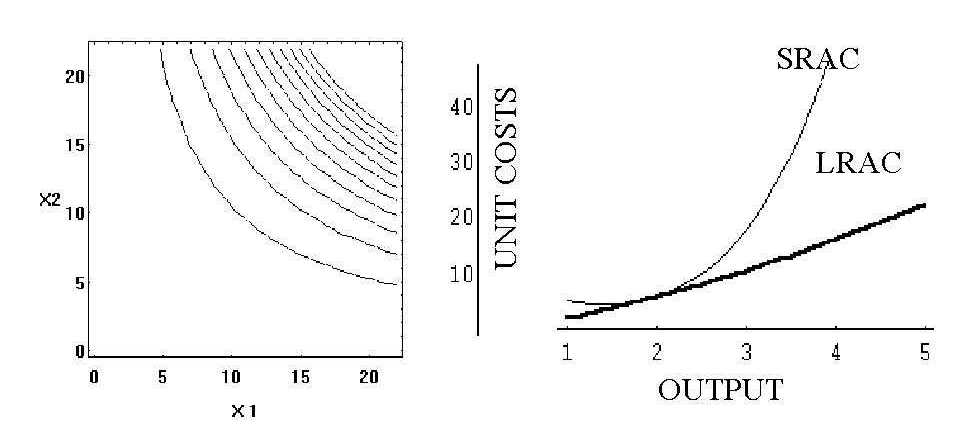

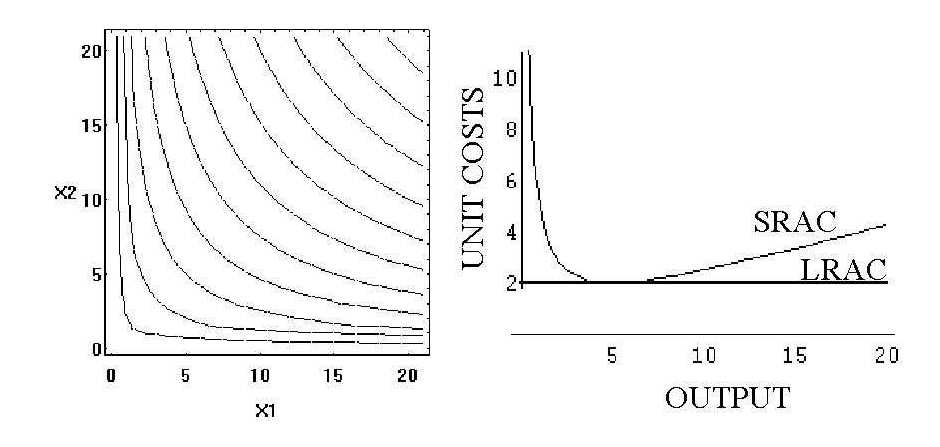

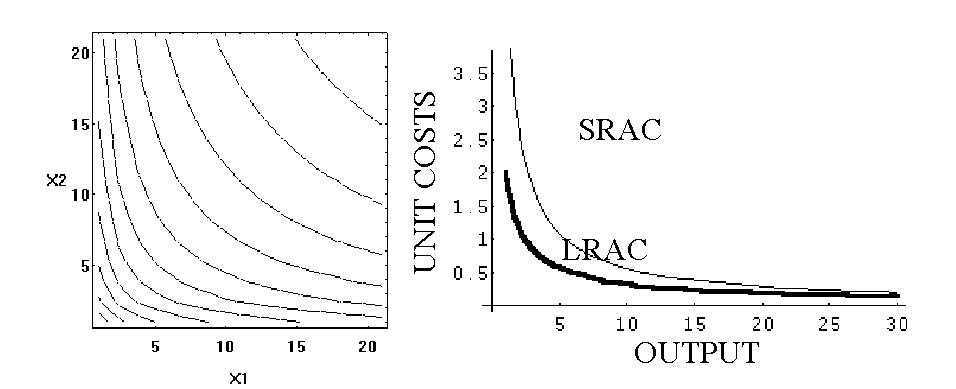

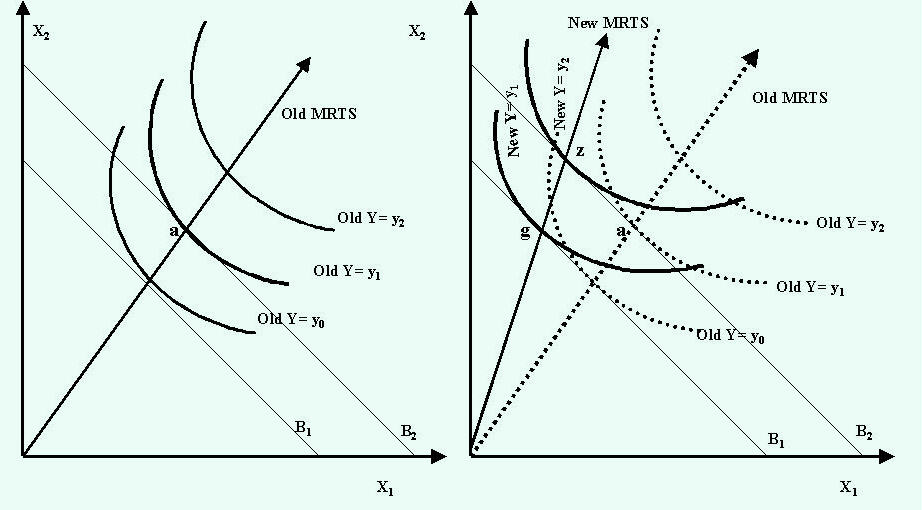

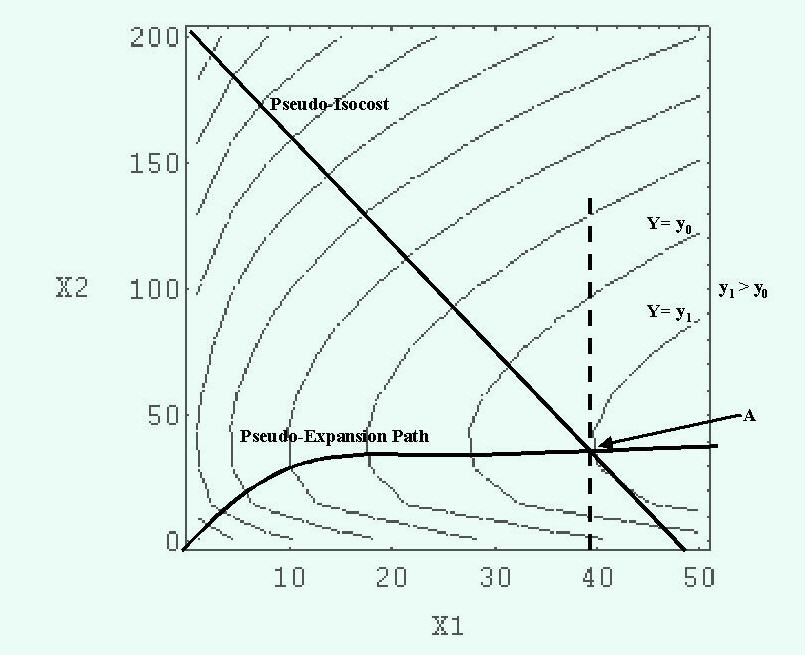

of factors as Figure 2-5 illustrates.

.

The MRTS12 tells how many more units of x1 would

be needed to hold output constant, while taking away one unit of x2.

The MRTS and isoquant curvature are related to the technical substitutability

of factors as Figure 2-5 illustrates.

Technological change can be brought in to the production

function more explicitly. In a conventional approach a three input production

function, ![]() could use T

as time (a proxy for technological change). A positive rate of technical

change given by

could use T

as time (a proxy for technological change). A positive rate of technical

change given by ![]() would

assume that all changes in output over time that are not the result of

varying x1 or x2 are due to progressive technical

change. However, as Solow points out, ". . . there is no reason why T

should not change by discrete jumps, or from place to place, or from entrepreneur

to entrepreneur" [Solow, 1967, p. 28]. This specification implicitly treats

technological change as an exogenous residual.

would

assume that all changes in output over time that are not the result of

varying x1 or x2 are due to progressive technical

change. However, as Solow points out, ". . . there is no reason why T

should not change by discrete jumps, or from place to place, or from entrepreneur

to entrepreneur" [Solow, 1967, p. 28]. This specification implicitly treats

technological change as an exogenous residual.

Alternatively, a production function could include inputs of a particular technology as explicit endogenous factors rather than as an exogenous technological change residual. The path dependent, evolutionary, and endogenous growth schools use such approaches to model non-conventional technological inputs such as information, knowledge, or communication in a production process. The idea is that technology can change along with other inputs so that the VLR-LR distinction is different from the conventional case. The approach is best applied to multi-output cases.

T could be physical units (or a vector of parameters) representing a technology. Then, a particular technology's effect on other inputs could be evaluated individually and jointly. However, the generality of such an alternative view of the production function can violate conventional assumptions such as perfect certainty, continuous differentiability, monotonicity, and concavity. Relaxing these assumptions can lead to intractable empirical results.

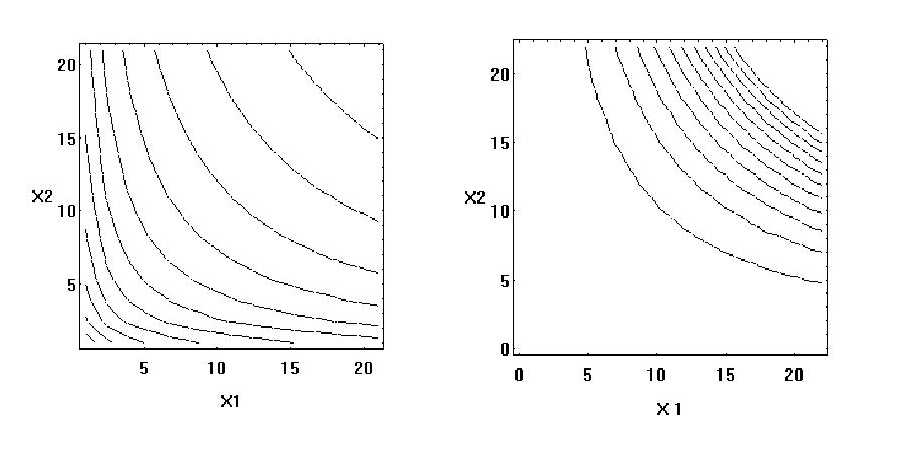

No graph can show every way that technical factor substitutability can be altered by technological change. This is since, as Chambers notes, "there is no correct answer or measure of the degree of substitutability between any two inputs" [Chambers, 1984, Ch.1, p. 18]. In an n-input case, the problem concerns the percentage change in input xi that would result from a one- percent change in the amount of input xj.

Several unit-free measures of the degree of substitutability among inputs exist including the direct elasticity of substitution, the Allen partial elasticity of substitution, the Samuelson total elasticity of substitution, and the Morishima elasticity of substitution. The direct elasticity is a short-run measure of substitutability between input i with input j, holding all other inputs fixed, while the Samulelson elasticity measures the substitutability of input i against all other inputs. The Allen elasticity of substitution is a measure of relative input change along an isoquant since it measures the elasticity of the ratio (x1/x2) with respect to the MRTS12 with output held constant. Diverse elasticities of substitution complicate comparisons of how a technological change affects input substitutability.

2.3.2.4 Technical factor interdependence, separability

The next technical concept of production, technical factor

interdependence, is another way technological change can influence production.

Economists often do not differentiate between technical factor substitutability

(where output is held constant) and technical factor interdependence

(where output is not held constant). Beattie and Taylor state that "Two

factors are technically independent if the MPP of one is not altered as

the quantity of the other is changed" [Beattie and Taylor, 1985, pp. 32-33].

In the two input case there are three technical factor interdependencies

given the production function ![]() .

Each depends on continuous second cross partial derivatives as follows:

.

Each depends on continuous second cross partial derivatives as follows:

Factors are technically independent when f12= 0, so that the MPP of one factor is not affected by changes in the physical amount of the other. The technically complementary case occurs if the cross partial f12 > 0. In the complementary case, increasing x1 raises the MPP of x2. The case of technically competitive inputs occurs when f12 < 0. There, increasing x1 reduces the MPP productivity of x2.

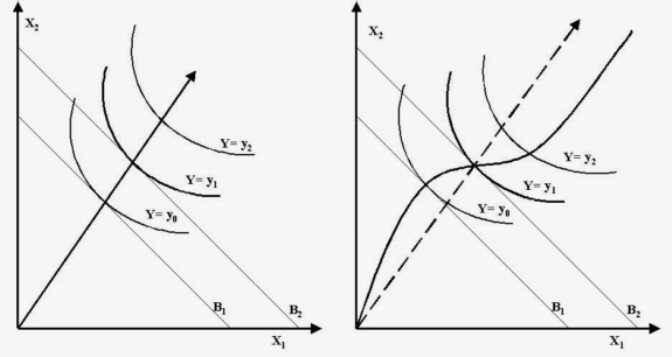

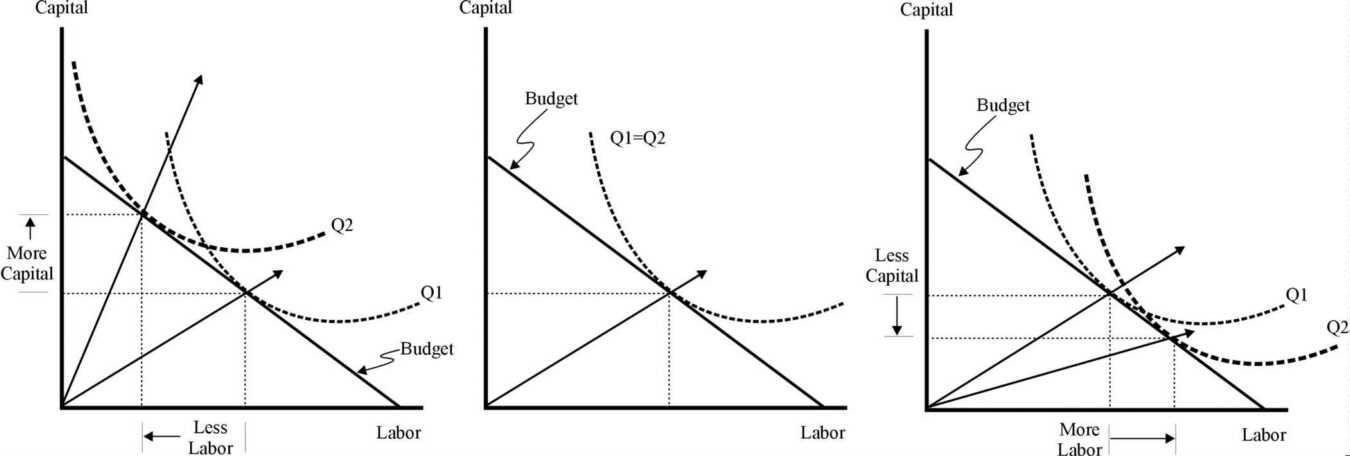

The technological change could be biased toward one factor

or be Hicks neutral. In the (Hicksian) factor neutral case, a new technology

leads to increased output without changing factor proportions. If the MRTS12

is independent of T, then technical change is factor-neutral. Isoquants

retain the same curvature and position, but represent larger values over

time because both factors in the same combination are able to produce more

output. Hicksian factor-neutral technical change requires that, given a