Chapter 4

Hypercommunications Convergence:

Services and Technologies

Historically, the telephone network carried public voice communications, private corporate networks delivered data, and broadcast networks delivered video. Each of these services was coupled with a specific form of infrastructure, such as copper pairs for telephone or coaxial for cable TV. Digitalization of voice, data, and video information . . . has allowed traditional boundaries to be crossed relative to services being provided. In other words, a single facility carries voice, data, and video. . . . With the digitization of information, the network needed for transport requires only digital transmission capabilities. Legacy networks designed for specific technologies are in the process of transformation to allow provision of all types of services. [Weinhaus, Stevens, Makeeff et al., 1998, p. 26]

The objective of Chapter 4 is to show what hypercommunication technologies and services are. However, distinguishing hypercommunication services from hypercommunication technologies can be a difficult task. As Weinhaus, Stevens, Makeeff et al. noted above, separate legacy (existing) communications networks are evolving into unified digital networks capable of transmitting all kinds of hypercommunication services, a process known as convergence. Convergence complicates the task of differentiating hypercommunication services from the network and delivery technologies that provide them.

Charles Sirois explains three trends that are altering traditional distinctions between services and technologies:

First, there is a deregulation trend. . . . The second trend is the feasibility of splitting telecommunications infrastructure from services. Where this occurs, it means that for every dollar spent on telecommunications services, the infrastructure portion will be smaller. In the past, the infrastructure was the service when you heard the dial tone after picking up the telephone. What . . . telcos provided was mainly a pair of copper wires and a number of switches. The infrastructure was the service. The telcos were only in the carriage business. They could not be in the content or message business.

Today it is possible to be a telecommunications service provider without owning one inch of fibre optic cable or even a switch. The necessary hardware can be leased from facilities-based operators like the telcos. So more and more you can have a split between infrastructure and services.

These two trends lead to a third one: fragmentation of the offerings to the end-user. We are not talking here of oligopoly. Rather, in the future there will be hundreds or even thousands of providers of telecommunications services (i.e. content). It will be a world of specialists, focusing on hundreds of niche markets. This is the world of narrow-casting . . . and the many service providers will have a choice of methods of transmission to reach their niches. [Sirois, 1996, pp. 198-199]

These three trends (deregulation, the service-technology distinction, and fragmentation) will become increasingly important to how agribusinesses buy hypercommunications. In this Chapter, the service-technology distinction and the fragmentation of offerings to business customers are covered. Regulatory issues (especially important to agriculture and rural areas) will be considered in Chapter 5.

Agribusinesses buy hypercommunication services from hypercommunication suppliers. However, if Sirois is right, in spite of convergence, "fragmented" services and prices (which vary by network technologies and content) will confuse agribusinesses. Furthermore, carriers use many different hypercommunication technologies to reach the locations agribusinesses need for communication. These technologies include transmission technologies, infrastructure technologies, interconnection facilitating technologies, or voice-data consolidation technologies. Each technology, in turn, may be composed of hardware, software, conduit, and protocols.

Specific technologies may seem unimportant to agribusiness managers when they need no technical knowledge of the underlying network in order to communicate. However, the strengths and weaknesses of particular technologies become important if there are frequent interruptions (downtime), if costs skyrocket, or if rural areas will not be served. Additionally, agribusinesses can choose technologies tailor made to specific strategies. Finally, agribusinesses also rely on capital purchases of hypercommunication CPE (Customer Premise Equipment). CPE technologies include computers, faxes, telephone systems, and other devices. CPE must be compatible with the existing equipment and software of both the agribusiness and its hypercommunication vendors. Additionally, an agribusiness may need hypercommunication services such as programming, web design, Internet promotion, repair, technical support, or network planning.

Basic knowledge of what hypercommunication services and technologies are could help an agribusiness manager simultaneously save money, improve communications with existing customers, plan future needs, and reach new customers. This Chapter provides descriptions of the major hypercommunication services and technologies that are available (or shortly will be) to Florida agribusinesses.

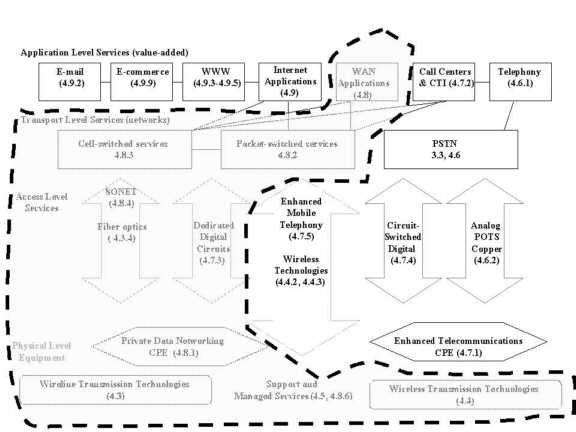

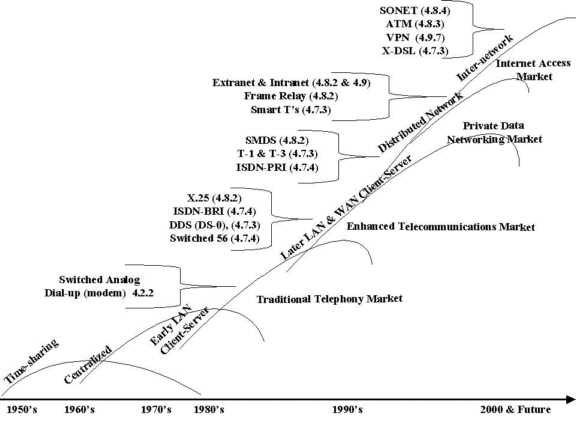

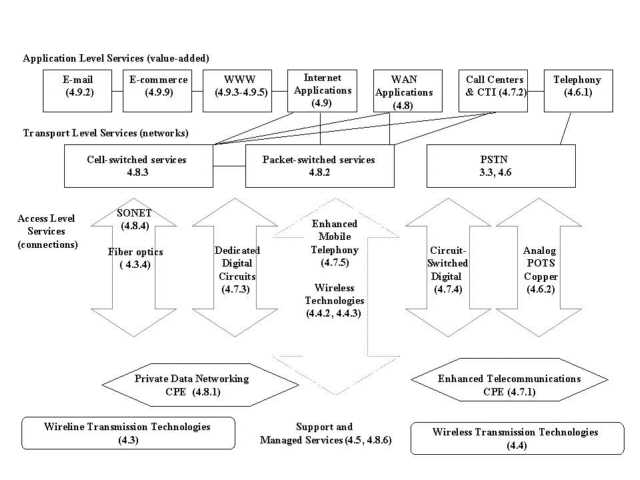

Organization of Chapter 4 is straightforward. Section 4.1 covers ways that hypercommunication services and technologies are converging into a single market. Section 4.2 discusses QOS (Quality of Service) and the many popular definitions of bandwidth. Bandwidth, data rate, throughput, and delay are often lumped together under a popular definition of bandwidth even though they are examples of individual QOS metrics that objectively appraise separate parts of hypercommunications quality and quantity. The next sections differentiate hypercommunication transmission technologies between wireline (4.3) and wireless (4.4). Section (4.5) touches on hypercommunication support services, facilitation, and consolidation (convergence-enabling) technologies. Then, hypercommunication services and technologies related to specific services are divided into four sub-markets: traditional telephony (4.6), enhanced telecommunications (4.7), private data networking (4.8), and Internet (4.9). The Chapter concludes with a summary comparing the usefulness of transmission technologies and service groups to agribusiness (4.10).

Chapter 4 uses technical sources (articles, texts, white papers, and standards) to provide overviews of the three global technological areas and four specific service sub-markets. Further details came from trade publications and informal discussions with industry sources. Where possible, deployment of services was examined firsthand in businesses around Florida. History provides the basis for constructing the three global technology sections and four specific service sub-markets. Now, each sub-market represents a share of the converging hypercommunications market.

There is a danger to approaching hypercommunications through historically defined sub-markets because that view emphasizes regulated monopolies such as telephone ILECs and cable TV providers. Both federal and state regulatory environments have changed dramatically in the past five years. Thinking about separate services and technologies (using either the interpersonal or mass communication model alone) does not apply now as it did when the 1934 Communications Act (the organic legislation to the 1996 TCA) became law. In the year 2000, the hypercommunications infrastructure consists of the PSTN, the Internet backbone, private data networks, along with "dark fiber" and other landline infrastructure (such as cable television systems). Additionally, terrestrial and satellite wireless networks form part of the hypercommunications infrastructure.

4.1 Hypercommunications Convergence

Although the hypercommunication model is replacing the formerly separated interpersonal and mass communication model with a myriad of interconnected choices, convergence is not yet a reality. Hence, while there is some danger in using sub-markets to characterize what hypercommunication services and technologies are, Chapter 4 is best organized around the current structure of hypercommunications. Convergence is the process that will modify today's sub-markets into tomorrow's converged marketplace. It is therefore appropriate to begin by naming dimensions of convergence that are affecting the technologies and sub-markets that form the basis for the rest of Chapter 4.

Virtually every segment of the world's economy (including agriculture) is affected by the convergence of basic telephony, enhanced telecommunications, the Internet, and private networking into hypercommunications. While the inevitability of convergence is taken for granted, the rate of convergence and the form it will take cannot be. Convergence would happen automatically except for institutional and attitudinal barriers including regulation, competition, speed of diffusion, and competing standards. Converge means "to move, turn, or be directed toward each other or toward the same place" [Webster's New World Dictionary, 1960, p. 323].

In a rapidly expanding marketplace with frequent introductions of new services and technologies, convergence means that further horizontal and vertical integration of the hypercommunication market will occur with profound implications for individual agribusinesses. Convergence has already been described as a process where two separate communications models (interpersonal and mass) and their separate telecommunications networks evolve into a single hypercommunications model that uses a mesh of networks. This view matches Alan Stone's definition of "boundary problems" due to the clash of old and new technologies. According to Stone, the first boundary problem was telephone-radio, followed by telephone-computer, TV-radio, etc [Stone, 1997]. Instead of entirely replacing the old technology such clashes can lead to new uses for the old technology. For example, radio had been expected to die because of television and the demise of movie theatres was expected because of VCRs. In both cases, the market was able to absorb the new technology without eliminating the old.

However, because of the diversity of hypercommunication services and technologies it is difficult to speak of boundaries in the same breath as convergence. Instead of discrete boundaries, convergence has analog dimensions. For agribusiness, hypercommunications convergence has five similar dimensions, each based on a clash between old and new. Each dimension is important to agribusiness because the result of the clash will determine capital costs and variable expenses.

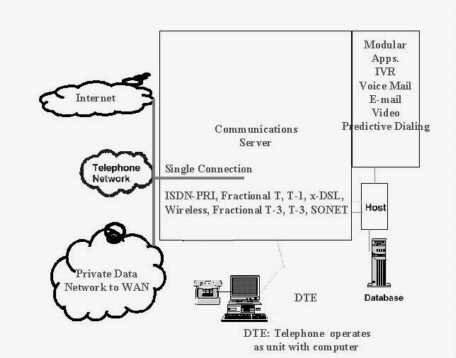

The first dimension of convergence is device-device convergence, the convergence of previously differentiable electronic hardware devices into multi-purpose units. The telephone, fax, television, radio, and computer are beginning to converge together into DTE (Data Terminal Equipment), or multi-functional user devices. As one newspaper article put it:

Convergence is the coming together of computer, broadcast, telecommunication and entertainment technologies. It fulfills the ultimate promise of the information revolution--the ability to receive [and process, store, manipulate] any information anywhere with a single [intelligent] terminal. [The Globe and Mail (Toronto, Ontario), June 26, 1994, p. B4]

Already, employees of an agribusiness may use several separate communications devices (telephone set, fax machine, desktop computer) or sit at a CTI (Computer Telephone Integration) station with fax, e-mail, computing, Internet, video, and voice capabilities. George Gilder argues that convergence is being driven by "the on rush of computer technology invading and conquering" the traditional distinct domains of television, films, consumer electronics, telecommunications, publishing and games [Globerman, Janisch, and Stanbury, 1996, p. 212].

The main question is whether the computer will completely replace the office telephone, fax machine, and copier. Device-device convergence is becoming increasingly important as agribusinesses equip offices and train employees. While there can be substantial savings to capital budgets from purchasing one device to do the job several previously did, a dangerous dependency on a single technology or connection can occur unless there are redundancies. For example (unlike a computer terminal), individual telephone sets do not become useless every time a computer spreadsheet crashes, nor do telephones suffer downtime during every LAN outage.

A second dimension of convergence is content-carrier convergence. Content-carrier convergence is also called confluence:

What is widely called convergence (but should more properly be called confluence) has resulted in the blurring of the traditional distinctions between telecommunications and broadcasting, and between content and carriage. [Globerman, Janisch, and Stanbury, 1996, pp. 212-213]

MS-NBC is one example. A broadcast carrier (NBC) and an ISP-software giant (MSN, MicroSoft WebTV) combined through a "strategic alliance" to create editorial content, advertising tie-ins, and common e-commerce opportunities designed to meld formerly diverse content services into commonly controlled programming and distribution. The Time Warner-Turner Broadcasting-AOL-Netscape mega-merger is another example. When a hypercommunications carrier creates content under content-carrier convergence, it can prevent or outmaneuver other firms from offering content or access. One example of how this works is through web portals. A web browser can be programmed to go to a particular home page (portal) upon session initialization. Wireless devices are factory programmed to exclusively access portals where the carrier has control over content. If carriers obtain enough market power to lock out competing content providers, consumers have fewer choices and agribusinesses can become dependent on a single source for news, information, and communications access. For some rural areas, the situation is already familiar. In many local areas of Florida, AOL is the only possible way to obtain Internet access, though this is less true than it was.

The third dimension of convergence, carrier-device convergence occurs when the hypercommunications carrier and the communications device used to communicate become the same. An extreme example of carrier-device convergence was used by pre-breakup AT&T. Telephone customers had to lease telephones from AT&T that were manufactured by AT&T's Western Electric subsidiary. A recent consumer action against AOL is another example. The plaintiffs charge that once Internet Explorer for AOL was installed, it became impossible for the computer to be used to access a competing OSP or ISP if the consumer switches providers. The inter-relatedness of hypercommunications technologies can mean that a service provider will be unwilling or unable to provide services if an agribusiness already owns a certain make of equipment or is located in a particular area. Substantial switching costs to change providers can occur in this way.

In the fourth dimension, regulatory convergence, as separately regulated industries come together, so will taxes, regulations, and governmental policy. Quoting George Gilder again, Globerman, Janisch, and Stanbury state what is behind regulatory convergence:

Also, 'convergence assaults century-old regulatory rules that have kept telecommunication and broadcasting in separate legal solitudes. The old distinctions were based on the types of wire, of radio signals, of information and of companies. These barriers no longer make sense.' In fact, convergence would not be a public policy issue if it did not cause conflict among previously separate regulatory regimes, telecommunications, and broadcasting. [Globerman, Janisch, and Stanbury, 1996, p. 213]

Because of delays introduced through the lobbying, legislative, regulatory, and legal processes, market adjustments typically occur so quickly that regulatory action can be superfluous. However, regulation may inhibit convergence from occurring or prevent service providers from entering rural areas until regulatory issues are resolved. Other areas of regulatory convergence such as taxation are covered in Chapter 5.

The regulatory task becomes more difficult as new services and technologies, (especially the unregulated Internet) create new regulatory territory.

Convergence also includes the ability to combine several technologies to produce new modes of communication. For example, the Internet or (network of networks) combines computers (largely PCs), modems, specialized software, existing telephone lines, packet switching, and a universal transfer protocol (TCP/IP). The result has been a very rapidly growing mode of communications, which in the past two or three years began to evolve from being text-based to sound (including a crude form of telephony) and video. When broadband capacity cable or wireless replaces the twisted pair of copper wires over the last mile, the Internet may be the epitome of convergence. [Globerman, Janisch, and Stanbury, 1996, p. 212]

The fifth dimension of convergence is competitive convergence (market convergence). This dimension is defined through market structure, conduct, and performance. When firms merge or technologies converge the number of firms is reduced. Hence, convergence

refers to any break-down of previous technological barriers among computer software, telephone, cable and entertainment industries. Those barriers arose from the limitations inherent in pre-computerized analog signaling. Convergence is a technological phenomenon, but its wider consequences are being felt by individual businesses, by regulators, and by consumers. [Globerman, Janisch, and Stanbury, 1996, p. 212]

There is an inherent tension between the rates of growth of pro-competitive influences and anti-competitive influences. Pro-competitive influences (deregulation, elimination of regulatory monopolies, uniform taxation across sub-industries, IPOs, spin-offs, interconnection, etc.) stand opposed to anti-competitive influences (technical convergence, mergers, re-regulation, and non-uniform taxation across sub-industries).

The changes convergence is bringing promise to be truly revolutionary according to Gilder:

'The computer industry is converging with the television industry in the same sense that the automobile converged with the horse, the TV converged with the nickelodeon, the word processing program converged with the typewriter, . . . and digital desktop publishing converged with the linotype machine and the letterpress.' [Quoted by Globerman, Janisch, and Stanbury, 1996, p. 212]

However, there is a difference of opinion of how soon the convergence revolution will occur as shown by two Internet Week headlines: "The One Pipe Approach Gains Momentum" and "Convergence Reality Check: Voice Data Unity Still a Pipe Dream". In the 1999 convergence reality check article, an Internet Week survey found that only eleven percent of IT managers surveyed already had converged networks while sixteen percent planned to unify their voice-data networks within one year. However, fifty-one percent had no convergence plans or were planning to wait at least three years.

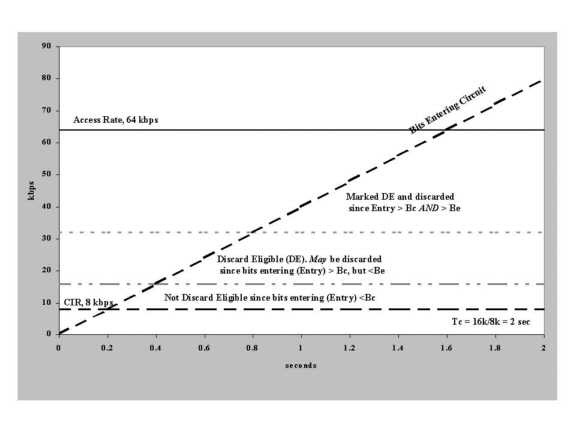

An important barrier to convergence is the lack of SLAs (Service Level Agreements) or signed contracts between buyer and seller setting out QOS guarantees. "The realization of a multiservice utopia will not happen until service guarantees become a fundamental part of the convergence landscape" [Morency, 1998, p. 23]. The increasing importance of hypercommunication SLAs means agribusinesses need to be intimately familiar with bandwidth and other QOS metrics that establish SLA terms. Bandwidth and QOS metrics are the weights and measures of hypercommunications, but are hardly as standardized as those agribusinesses are used to in other markets.

4.2 Bandwidth and QOS (Quality of Service)

No hypercommunications term is subject to more confusion or used more frequently than bandwidth. While an understanding of bandwidth is important to agribusinesses hoping to use hypercommunications services and technologies, bandwidth is not a complete description of the speed, overall quality, or value of a particular service or technology. Bandwidth-related measures are most often used to define and price hypercommunications services and technologies. However, excess reliance on one term obscures a more comprehensive set of characteristics called QOS (Quality of Service) metrics that are more important to agribusiness hypercommunication strategies. This section is designed to be a practical guide for agribusinesses hoping to understand bandwidth and other QOS so as to become more informed hypercommunication buyers.

This section and the following three (4.3 through 4.5) apply generally across the specific service and technology sub-markets discussed the last half of the Chapter from 4.6 through 4.9. To provide the right technical foundation, large amounts of supporting technical details are presented here in section 4.2. Readers who are already familiar with signal conversion, how modems work, and the subtleties of bandwidth, throughput, and data rate may go directly to (4.2.3). There, a general QOS model is introduced to provide an analytical framework of agribusiness hypercommunication networks. The reference model integrates QOS metrics and bandwidth with material from Chapter 3 such as the three core engineering problems of communication networks and the four technical objectives of network managers.

Other readers may need to take advantage of all four sub-sections in 4.2, being aware that tables 4-1 through 4-4 and figures 4-1 through 4-22 trace the main points, with the text available for additional support. In the first sub-section (4.2.1), bandwidth and five other QOS characteristics most often confused with bandwidth are discussed. The critical distinction between signals and messages and the difference between operational speed and capacity are explained. Then, a practical example of computer modem communication (4.2.2) underscores bandwidth's separateness from other QOS metrics. The last section (4.2.4) presents agribusinesses with a thumbnail sketch of the QOS dimensions used in buying and selling hypercommunication services and technologies.

4.2.1 The Relationship between Bandwidth and Speed

For several reasons, bandwidth has taken on an imprecise meaning that goes beyond its specific technical definition as a capacity measure (as introduced in Chapter 3). One reason for this is that the technical nature of hypercommunications tends to be confusing. Technical terms such as bandwidth become confused with closely related technical terms. A second reason for the overuse of bandwidth is that bandwidth is the unit most often used to price hypercommunications services and to describe infrastructure, networks, and individual connections. This multiplicity of uses causes instant misunderstanding since bandwidth is variously used as a stock measure, a flow measure, an accounting cost, and a capacity constraint. In short, bandwidth is used popularly to compare the speed, capacity, reliability, and quality of hypercommunication services, carriers, and technologies.

Furthermore, there is a lack of uniformity in "expert" opinion about what bandwidth is. As more sources are consulted, more variations in definition are encountered. For example, a well-received book aimed at business MIS and telecommunications managers defines bandwidth as "the speed with which data travels, measured in bits per second (bps)" [Bezar, 1995, p. 75]. However, in Bezar's glossary bandwidth becomes:

A term defining the information carrying capacity of a channel--its throughput. In analog systems, it is the difference between the highest frequency that a channel can carry minus the lowest, measured in hertz. In digital systems, the unit of measure of bandwidth is bits per second (bps). The bandwidth determines the rate at which information can be sent through a channel--the greater the bandwidth, the more information that can be sent in a given amount of time. [Bezar, 1995, p. 421]

The distinction between digital and analog discussed in 3.2.1 helps explain the multiple meanings of bandwidth.

However, the meaning of bandwidth also depends on the relationship among bandwidth, bits, and speed, a complex recipe with three main ingredients. First, a hypercommunications message (voice, data, or video) and the signal that carries it are different entities. Signals can be analog or digital while message content also can be analog or digital. Second, signal transmission may use broadband technology, carrierband technology, or baseband technology. Third, there can be a difference between the upstream and downstream rate and capacity (directional asymmetries) for a variety of scientific and technical reasons. These ingredients need to be examined before QOS can be understood.

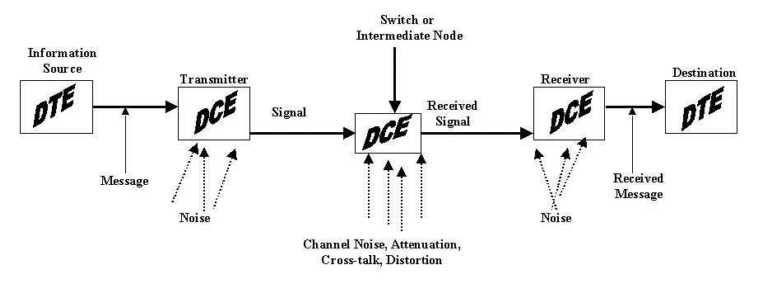

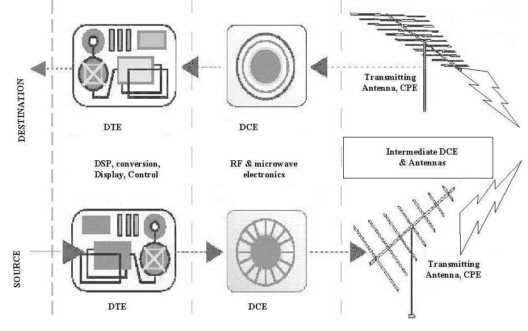

The first ingredient is the distinction between signal domain and message content. The signal-message distinction is rooted in Shannon's mathematical theory of communication, shown in Figure 4-1 [Shannon, 1948; Shannon and Weaver, 1949].

The information content of a message and the domain of the signal are two distinct entities. Messages may have either analog or digital information content (source domain) depending on the equipment (DTE) at the information source and destination. Information sources "represented by a physical quantity that is considered to be continuously variable and has a magnitude directly proportional to the data" are analog [GSA, FED-STD-1037C, p. 1996, A-14]. Analog message content refers mainly to voice telephone calls, though it can include audio, graphics, video, and readings from scientific sensors (such as pressure, temperature, and position). Digital information sources are "represented by discrete values or conditions (or) are discrete representations of quantized values of variables, e.g., the representation of numbers by digits perhaps with special characters and the 'space' character" [GSA, FED-STD-1037C, 1996, p. D-18]. Digital message content includes almost all data communications and Internet traffic. The advent of digital cameras, digital image scanners, CDs, etc. has created digital replacements for previously analog sources.

The signal is an electric current or electromagnetic wave used to carry an encoded representation of the message from the transmitter to the receiver. Signals may be digital or analog depending on the transmitting and the receiving equipment DCE (Data Communications Equipment) on each end as shown in Figure 4-1. According to Hill Associates, "Signaling is analog if the signal transmitted can take on any value in a continuum . . . Signaling is digital if the signal transmitted can take on only discrete states" [Hill Associates, 1998, p. 303.1.3, italics theirs]. Switches, routers, and other kinds of intermediate DCE must be in the same domain as the signal or signal conversions must occur. If necessary, signals are decoded into the appropriate source domain before reaching their destination.

Digital signals offer many advantages over analog ones. For example, many separate digital signals may be interleaved and sent together to permit several separate conversations on a single line (multiplexing). Digital messages can be encrypted before their transmission as signals to prevent eavesdropping and to provide security. Often, severely degraded digital signals may be reconstructed, providing perfect copies of the original source. However, excess interference can prevent an entire digital signal from reaching a source, while distorted analog signals would be garbled but received under similar conditions. Digital signals require more bandwidth than analog signals do, but they are still less costly to transmit [FitzGerald and Dennis, 1999].

Table 4-1 shows the four combinations of digital or analog information content and analog or digital signals. Since each combination has different physical characteristics, each kind requires specialized hardware. These combinations also apply to signal-signal conversions and intermediate transformations that can occur in the transport level of a communication network, especially over long distances. Table 4-1 and Figure 4-1 show that Shannon's (1948) "information source" and "destination" are now known by the more general term, DTE. In a purely discrete system DTE are digital. In a purely continuous system DTE are analog. In a mixed system, DTE can be digital or analog.

Common digital DTE devices include computers and certain digital telephones. These devices send and receive digital messages whether text files, e-mails, voice mail, or graphics and video. Common analog DTE devices include most telephones, microphones, speakers, and certain scientific instruments.

The receivers, transmitters, and switches of Shannon's communications system also are generalized by the term DCE (Data Communications Equipment or Data Circuit-terminating Equipment). DCE are specific to the signal domain (analog or digital) while DTE depend on the message domain as well. The two need not be separate devices from the user's point-of-view.

DCE transmit and receive each end of a hypercommunication (and often in between) in the appropriate signal domains. A common DCE example is a computer modem. A modem is a transmitter that modulates digital content into an analog signal on one end and a receiver that demodulates analog signals back into digital form to reach the destination. Similar DCE devices exist for other signal domain, source domain combinations. For example, codecs (coder-decoders) are circuits (or software) serving as built-in DCE used to make conversions within digital DTE.

Therefore, in some cases such as telephones (both analog and digital), DTE and DCE are in the same device. For example, an analog telephone contains a microphone to capture the analog voice source that is then converted by a transducer into electronic waves that are sent as analog signals.

Figure 4-2 (direction of communication read from left to right) depicts signal conversion or transformation for the four cases alluded to in Table 4-1. In a purely continuous system (upper right, Figure 4-2), analog messages are modulated onto analog signals (continuously varying representations of the source message) over a carrier wave as in the cases of POTS and traditional broadcast radio and TV. A carrier is signal with known characteristics that is modulated to carry information. Using a carrier, the receiver can extract the message because it knows the characteristics of the carrier wave. However, noise or unintended changes to the carrier will be interpreted as part of the information. The bandwidth (transmission capacity) of channels over which analog signals pass is the difference in Hertz (cycles per second) between the minimum and maximum frequencies.

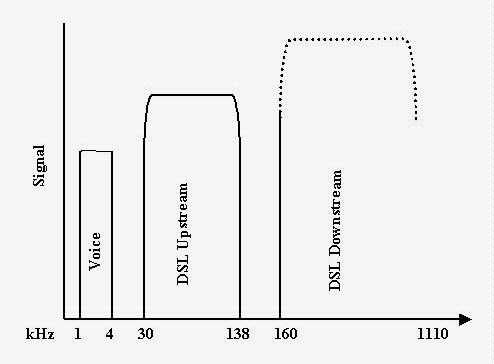

A purely discrete system (shown in the lower right of Figure 4-2) features a digital source carried by a digital signal (coded word representation of the source message) as in the case of Ethernet, ISDN, and DSL. With digital signal transmission, bandwidth is expressed in a bit rate (bits per second, bps). Bandwidth is expressed in Hertz (Hz, cycles per second) for analog-analog transformations.

However, the best unit to express bandwidth in is often confused between bps and Hz for the other two cases (mixed systems) when conversion (rather than transformation) occurs. In DAC (Digital source to Analog signal Conversion), shown on the lower left of Figure 4-2, the digital domain of the message is mapped onto an analog signal domain in order to reach the destination. In ADC (Analog source to Digital signal Conversion), shown in the upper left of Figure 4-2, the analog domain of the message must be mapped onto a digital signal domain. In such mixed systems, both Hz and bps may be used to describe bandwidth, depending on the context.

In ADC, analog messages may be converted into digital signals through codecs (coder-decoders) to become signal pulses. Typically, these pulses are modulated over a carrier pulse as in the case of digital wireless mobile telephone. Computer modems and cable modems perform both ADC and DAC. A modem modulates digital data (messages) into analog signals when it sends and demodulates analog signals to digital data when it receives. There can be several such signal conversions at intermediate DCE between the sender and receiver.

Analog signal modulation refers to alterations made by DCE in the characteristics of analog carrier waves, impressed on the amplitude (signal strength), phase (wave phase) and/or the base frequency of the wave. Analog signals (continuous waves) may be modulated (coded) in several methods including AM (Amplitude Modulation), FM (Frequency Modulation), PM (Phase Modulation), and QAM (Quadrature Amplitude Modulation).

Digital signals (discrete pulses) are modulated (encoded) through various kinds of PCM (Pulse Code Modulation) such as PAM (Pulse Amplitude Modulation), PDM (Pulse Duration Modulation), and Pulse Position Modulation (PPM). Digital signals are aperiodic (non-repeating patterns) so frequency cannot be used to describe them. However, a digital signal can be approximated by an n-th order series of harmonic sine waves. Each harmonic has its own amplitude, phase, and frequency. The minimum significant spectrum is the minimum frequency range (frequency spectrum) needed to represent the original signal and n-th order harmonics. The bandwidth of a signal is the width of the frequency spectrum it occupies.

When expressed in Hertz, the bandwidth of an analog medium for a digital signal provides a range of frequencies that can be transmitted. If this range is smaller than the minimum significant spectrum, more bandwidth is needed to allow the receiver to reproduce the original source signal.

When the end-to-end observed speed of communication between sender and destination DTE is considered, other characteristics beyond the bandwidth (capacity) of a single link or the bit rate of a given DCE are involved. These characteristics (summarized in Table 4-2) include throughput, data rate, delay, and jitter. Importantly, the focus of the first four characteristics in the table (each of which is often misleadingly called bandwidth) is primarily on digital bits.

When expressed in Hertz, bandwidth represents the raw capacity of a noiseless, analog channel before digital data are imposed. When expressed in bps, bandwidth represents the maximum attainable bit rate in a single direction of a noiseless link with no other traffic. The bandwidth or more precisely bit rate associated with a given bandwidth in Hz depends on the baud rate and coding rate. A sampling or baud rate is directly proportional to the bandwidth. The coding rate (number of levels in the code) multiplied by the sampling (baud) rate equals the bit rate, the rate at which DCE are designed to operate. Environmental noise can decrease the coding rate so that the difference between bit rate and bandwidth becomes larger. Noise and quantizing error can further in increase errors so that the gap between data rate and bit rate becomes larger.

The bit rate (operational speed of DCE in bits per second) that can be accommodated by any medium is proportional to bandwidth. Therefore, the greater the bit rate, the larger the bandwidth (capacity of a connection) needs to be. Thus, bandwidth typically exceeds the bit rate, which in turn exceeds the data rate.

The third characteristic, data rate (also known as the data signaling speed) measures "the aggregate rate at which data pass a point in the transmission path", typically expressed in bits per second (bps) [GSA, FED-STD-1037C, 1996, p. D-4]. Since the data rate is an error-free rate from DCE to DCE, it may be less than the bit rate and always below the bandwidth.

The next characteristic, throughput, can be expressed in two ways. Throughput is an end-to-end (DTE to DTE) measure that can be expressed as "pure throughput", a theoretical capacity (maximum attainable bps), or as an observed "throughput rate" in bps at time t, where bpst is an actual observation [Sheldon, 1998, p. 972]. Both pure throughput and the throughput rate (also called effective throughput) differ from bandwidth because they are end-to-end measures of the rate at which user information (not counting retransmission of errors or transmission of overhead) is processed and transmitted. Pure throughput is similar to bandwidth in that it is a capacity, but it is an end-to-end capacity, rather than the capacity of a point-to-point access line or transport link. For example, the compression of voice or data files improves throughput but does not change bandwidth, bit rate, or the next characteristic, data rate.

Throughput, because it is an end-to-end measure that includes compression but excludes error and overhead, cannot be compared directly to bandwidth, data rate, or bit rate. However, due to compression and in spite of overhead, an observed throughput rate (sometimes called information rate) often is greater than the data rate, bit rate, or even the bandwidth of a particular network segment between the sender and destination. Throughput is the speed measure experienced most by users.

Another QOS characteristic often erroneously confused with bandwidth, delay, is included in throughput. Delay (also called latency) is the actual length of time it takes a bit to travel across a transmission line [Sheldon, 1998, p. 256]. There are three kinds of delay: propagation delay, switching delay, and queuing delay. Propagation delay results from transmission media variables. For example, copper wire has a higher propagation delay than fiber optic cable, while satellite transmissions have higher propagation delays than line-of-sight wireless transmissions do. Propagation and switching delay do not depend on usage levels. However, queuing delay is zero at low use and rises due to congestion bottlenecks that result from high network loads (a systemwide ratio of effective throughput to capacity). Taken together, switching and queuing delay are often called throughput delay since they are variable. The number of hops a signal is switched over a network and the overall network load tend to vary from moment to moment.

The variation in delay, jitter is another way to indicate quality. Jitter refers to the variability of delay measures through time. Low jitter and delay are critical to voice conversations and real-time broadcasting. The highest quality connections are those with a tightly distributed average delay of less than 100ms. Poorer quality connections tend to experience longer delays and greater jitter. For example, satellite transmissions often average near 350ms, with a range from 150ms to 600ms. Pure throughput assumes no jitter and only propagation delay. Since effective throughput is an actual measure, it can reflect both switching and queuing delays. A series of effective throughput observations are needed to measure jitter.

Before proceeding to a computer modem example that clarifies the entries in Table 4-2, it is important to understand the second ingredient of the complex mixture of speed and QOS, the transmission (or signal) technology. First, the word broadband has to be defined. Broadband is used to refer to a range of capacity (or speed). Broadband also is used to describe a particular analog signal transmission technology. Egan characterizes the speed and capacity connotation as follows:

The term broadband is used to describe a high-speed (or high-frequency) transmission signal or channel. It is the functional opposite of narrowband, which connotes relatively low speed. While large-scale telephone network trunk lines always operate at broadband speeds, local phone lines connecting households and small businesses to the trunk line are limited to narrowband speeds. . . . The transmission speed of a broadband communications channel is usually measured in megabits per second (Mbps). . . . A low-speed narrowband channel, like today's basic phone line is usually measured in kilobits per second (kbps). . . . [Egan, 1996, p. 6]

The FCC defines broadband as an "elastic" definition that covers bandwidths of 200kbps and over [FCC, October 1999, p. 16-17]. The difference among broadband, narrowband, and wideband is subjective. Broadband networks most frequently are defined as "capable of multi-megabit speeds" [Sheldon, 1998, p. 112; Kumar, 1995, p. 185] or those with capacities greater than 2.048 Mbps [Klessig and Tesink, 1995, p. 1]. However, broadband is also taken to mean "any data communications with a rate from 45 to 600 Mbps" [Peebles, Keifer, and Ramos, 1995]. Wideband typically includes capacities greater than the typical (narrowband) analog telephone line of 4kHz but less than broadband capacity [GSA, FED-STD-1037C, 1996, pp. W-3-4].

Broadband also describes a specific signal technology where signals themselves (rather than bandwidth) are classified as baseband, broadband, and carrierband. This second use of broadband refers to a type of analog signal transmission technology (typically with a digital source domain) using shared lines. Two other signal transmission technologies are baseband and carrierband.

Broadband signal technology sends multiple analog signals over a range of frequencies (in channels, similar to radio frequencies) over shared conduit. Since noise tends to accumulate in such a scheme, amplifiers are used to regenerate attenuated signals. Because multiple channels are available, many messages may travel at once over a broadband transmission link without automatically exhausting available bandwidth (capacity). Broadband signal technologies distribute modulated data, audio, and video signals over coax, twisted pair, or fiber optic cable. Broadband is the easiest way to deliver a common signal to a large group of locations. Without the addition of electronics that limit eavesdropping, it is possible (with the right knowledge and equipment) to intercept broadband transmissions.

Broadband includes networks that multiplex multiple, independent networks onto channels in a single cable. This may be done through FDM (frequency division multiplexing) where two or more simultaneous and continuous channels are derived by assigning separate parts of the available frequency spectrum (bandwidth) to each channel [GSA, FED-STD-1037C, 1996, p. F-17]. Under FDM, signals that are coincident in time are separated in space so that a particular subscriber receives some of the total bandwidth of the connection that serves their location all of the time.

Broadband network technologies allow many networks or channels to coexist on a single cable. Broadband traffic from one network does not interfere with traffic from others because each network uses different radio frequencies to isolate signals by vibrating each signal at a different frequency as it moves over the conduit. Used in this sense, broadband is the opposite of baseband, which separates digital signals by sending them at timed intervals. A broadband subscriber receives analog signals over his own channel on a shared link while a group of baseband subscribers receives common digital signals over a link that is not shared.

Baseband signals are digital (always having digital source domains as well), requiring all of the available bandwidth in a single, shared connection. Broadband signals are analog (having either analog or digital sources) and move over unshared channels on a shared connection [Hill and Associates, p. 303.1.5, 303.1.7]. Carrierband signals are a hybrid of the two.

Baseband signals are used to connect stations on Ethernet LANs and in localized point-to-point or dedicated circuit communication (such as ISDN and HDSL). Baseband signals are transmitted without modulation on a carrier wave, having been digitally imposed on a single base frequency [FitzGerald and Dennis, 1999]. Baseband transmissions use repeaters to regenerate bi-directional attenuated signals. However, even when using repeaters, baseband transmissions are limited in distance compared to broadband. For this reason, baseband signal transmission is frequently used by CPE networks such as LANs.

Any guided medium can be used for baseband signal transmission. The baseband of a broadband signal is the original frequency range before modulation into a more efficient, higher frequency range. Baseband network technologies use a single carrier frequency range (channel) and require all stations attached to the network to participate in every transmission. However, only one digital baseband signal (using the entire capacity of the channel) at a time is permitted. Time Division Multiplexing (TDM) is used to allow users to share connections by taking turns so that all of the bandwidth is used some of the time. Under TDM, a digital signal that is coincident in space is separate in time.

Carrierband is a type of baseband technology where the signal is modulated before transmission over a baseband connection. Standard baseband transmission is un-modulated but is multiplexed to allow multiple transmissions to occupy the path at once. Carrierband technology uses signals that are modulated but not multiplexed so that the entire bandwidth of the channel is available in separate channels (for separate uses) for a single subscriber such as an agribusiness. Individual subscribers send and receive a mix of digital and analog signals over a dedicated (un-shared) link that can carry more than one kind of traffic at once. For this reason, carrierband is sometimes called single-channel broadband and is used in HSLN (High-Speed Local Networks) to link "mission critical" processors to each other or processors to peripherals [Maguire, 1997]. Carrierband technology is also used as a digital access level connection, such as in certain DSL services.

At the access level, cable TV broadband users share capacity for Internet access with dozens to hundreds of other subscribers over a broadband network. Telephone company digital lines such as DSL and ISDN serve individual subscribers only. Of the three signal technologies, agribusinesses are affected differently by each one. Baseband technologies are commonly part of Ethernet LANs at agribusiness offices. Broadband technologies support services that are offered by Cable TV and wireless firms, typically to small offices and farm residences. Carrierband technologies are sold to agribusinesses by ILECs, ALECs, ISPs, and others but are subject to distance limitations that will be covered in 4.3.

The third and final ingredient in the complex mixture of capacity, speed, and bandwidth is directionality or symmetry. For example, the upload and download bandwidth to bps relationship for a digital source to analog signal conversion depends on both the Nyquist Theorem [1924, 1928] and Shannon's Law [Shannon, 1948]. Furthermore, various sampling, symbolization, and encoding schemes are used by DCE to modulate the signal in order to impose or pack the message content into an electromagnetic pulse or onto a carrier wave. These concepts can introduce directional asymmetries that get specific attention in the next example regarding computer modem communications.

4.2.2 Computer Modems: Bandwidth and QOS

An example concerning the ubiquitous computer modem will further differentiate bandwidth from other QOS metrics. Modems are a particular kind of DCE designed only to work with analog signals and digital sources. Indeed, some say that the modem is a DCE that has reached maturity and is not part of the converged future. However, since modems are broadly representative of DCE (while computers represent DTE) the following example gives a simple lesson in bandwidth, speed, and analog-digital conversion that has broader applicability.

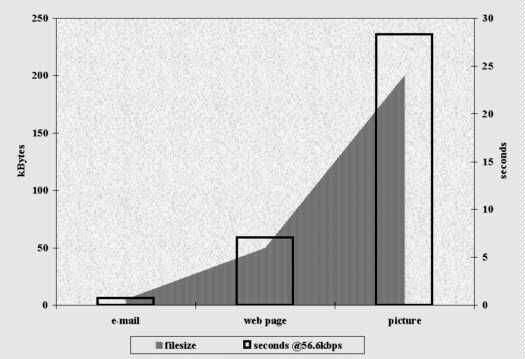

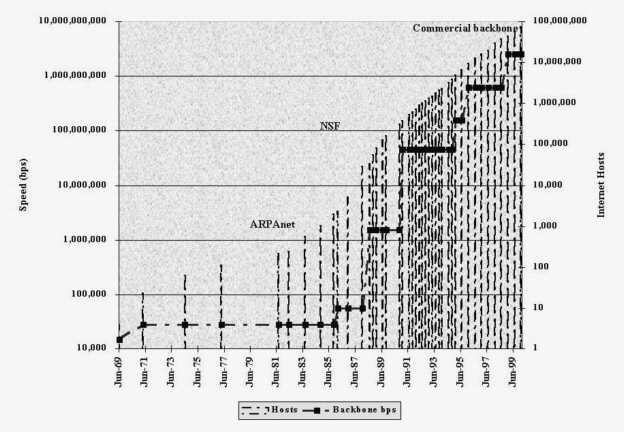

The modem is still a dominant form of connection to the Internet and data networking for households and for many agribusinesses as Figure 4-3 reveals. In 1999, Neilsen NetRatings found that fewer than six percent of U.S. households had Internet access faster than that offered by 56k modems [CyberAtlas, 2000].

Indeed, a majority of households (53%) own modems that are slower than 56 kbps. Additionally, modems that perform at relatively high data rates in urban settings may perform poorly or not at all in rural areas. Thus, the expected obsolescence of the modem will not occur for three to five years for many Florida agribusinesses because of infrastructure problems [FPSC, 1999]. Chapter 5 will provide more details.

The bandwidth of the analog voice channel (telephone line) as shown in Figure 4-4 is 3200Hz wide (3500Hz-300Hz). This bandwidth was engineered decades ago by AT&T's Bell Labs (when bandwidth was considered to be an extremely scare resource) as the absolute minimum needed to adequately represent the human voice.

Natural speech is concentrated between 75Hz and 8000Hz, though the human ear has a range of recognition from 75Hz up to 20kHz [EAGLES, 1997]. When the nationwide Bell System was built, filters were placed to block frequencies above 3500Hz from being carried on local lines. A modem attempts to transmit at the highest operational speed (bit rate) that it can given this bandwidth limitation, the equipment it connects with, and the amount of noise present in the line and introduced in the conversion process.

Analog-digital conversion (ADC) produces line noise as a byproduct and is, therefore, more sensitive to bandwidth limitations than DAC. It is for this reason that the so-called 56k modems (now generally known as V.90 standard modems) have a higher download than upload speed. Before this asymmetry of speed is covered, it is important to understand the steps in each conversion process.

ADC takes a continuous (in time and amplitude) waveform and converts it to a time-discrete, but amplitude-continuous series of pulses. There are three steps (sampling, quantizing, and coding) in ADC. The first step, sampling, begins the process of converting the waveform into pulses by sampling amplitude values every T microseconds (?s). During sampling, the waveform is scaled into sampling intervals where the sampling frequency, 1/T is also known as the modem's symbol rate.

According to the Nyquist Theorem, the symbol rate (also called the baud rate) used must be less than twice the bandwidth. So, for example, a 3200Hz (cycles per second) signal could be sampled with a frequency of up to 6400 symbols per second. Thus, T the size of a single sampling interval (symbol) is 156.25 ?s, which is the minimum constant interval at which the modem samples. In reality, 3200 baud is a commonly sampled rate because 6400 baud is unattainable in practice. Each sample becomes a separate PAM (Pulse Amplitude Modulation) pulse.

The Nyquist Theorem further specifies the levels to be used in the next step of ADC, quantization. Given bandwidth W, then the highest signaling rate C in a noiseless channel is given by C = 2W log2M, where M is number of coding levels [Maguire, 1997, Module 5, p. 13a]. The number of levels equates to the number of bits per quantization level. In quantizing, digits are assigned to the sampled signals by rounding off (quantizing) the PAM pulses using non-linear companding schemes. When 256 discrete amplitude levels are used to compand the original signal into a quantized sample, eight bits (log2 M) are necessary. The number of bits per level is log2 M, so that a 512 level representation contains exactly nine bits, etc.

The third step is coding. In coding, the amplitude levels are mapped into bits that can be understood by digital DTE or DCE. The standard coding rate is eight or nine bits. Given the 3200Hz bandwidth and an eight bit coding scheme (based on a 256 level quantization), the highest bps to be expected under the Nyquist Theorem from a noiseless telephone line would be 51.2 kbps.

However, two forms of noise reduce the maximum rate in practice. The first of these, quantizing noise, results from the second step and increases with the number of levels. Hence, it is not possible to add quantizing levels ad infinitum or the resulting line noise would cause data rates to fall. Once quantizing noise has been added to the line, it remains there (slowing the rate down) even after digital conversion has been accomplished. This is a particularly limiting case for sending (but not necessarily for receiving) data over a line. To discourage added noise, only the most robust levels can be used to code with so that in practice 256 levels may translate to 128 [3com Corp., 1999, p. 4]. Other forms of noise on lines (a particular problem in rural areas) affect speed in each direction.

Shannon's work on coding led to the development of Shannon's Law which states that the maximum speed C, equals W log2 (1+(S/N)) bps, where S/N is the un-standardized signal to noise ratio. The signal to noise ratio (SNR) is commonly measured in dB (decibels) where the SNR equals 10 log10(S/N) dB. The 33.6 kbps upload bit rate (maximum operational speed) advertised for both V.34+ and V.90 modems (over 70 percent of those in use) would require a SNR of 31-32dB for such a speed to be achieved. That SNR is far higher than that typically found, even on lines with a short distance to the serving CO. Thus, noise and the engineered bandwidth in Hz are the chief limitations on the usable capacity of analog line in bps.

To review, the baud rate multiplied by bits/baud (the coding rate) yields the bit rate (operational maximum) of the modem or other DCE. However, high line noise and the presence of noise from quantization itself place limitations on the achievable data rate. When a 28.8 kbps bit rate modem is advertised, it usually is a 3200 baud unit with a signaling (coding) rate of 9bits/baud. Higher speeds are obtained by increasing the baud rate, but bauds above 3429 are rarely obtained in practice due to quantization and/or line noise. Rural telephone lines are notoriously noisy, partially due to the age of the copper lines and also because noise is a function of the distance to the telephone CO.

DAC reverses the steps of ADC. First, the digital bits are modulated at a certain signal speed, governed as before by the Nyquist Theorem. Then, interpolation is used to reconstitute an analog profile of the digital signal pulses. Finally, the digital pulses are decoded into analog waveforms for analog transmission.

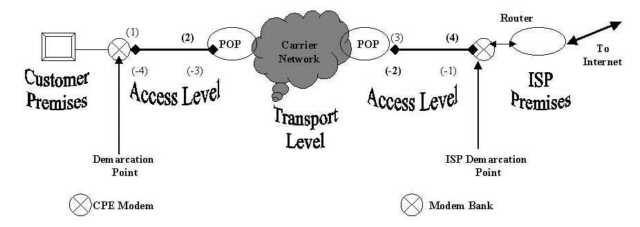

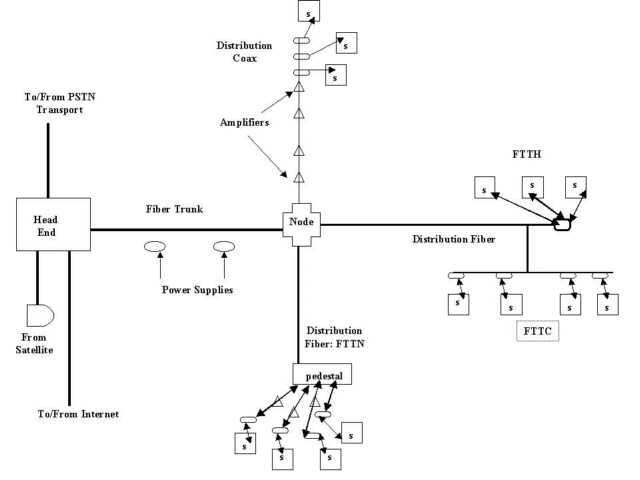

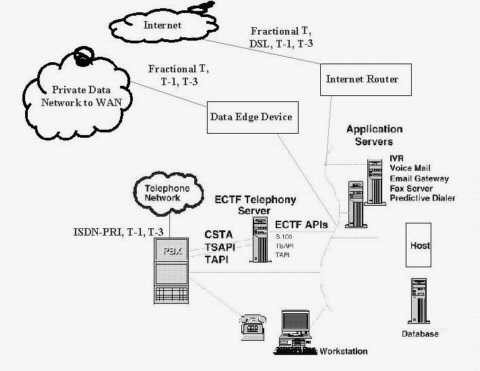

Importantly, physical laws preventing speeds greater than 33.6 kbps on upload are less constricting on the download side if certain conditions are met. An important reason is that quantizing noise is not present in DAC. To see this, consider how modems are used to connect with the Internet, a process that is illustrated in Figure 4-5.

Moving from left to right, a modem transmission (upload) follows a path from the customer premises (1) over the access level to the local serving central office (2) of the telephone company. While most calls to modems are local, they commonly are made to ISP numbers in a different telephone exchange with a different telephone CO (3), so the transmission flows over the telephone company's transport level to reach the ISP's CO. Then, the call flows over the ISP's telephone access level to reach a modem bank at the ISP premises (4).

An upload consists of no fewer than four signal conversions, labeled one through four. A download consists of no fewer than four conversions, labeled negative one through negative four. Of all eight conversions, only three (shown in bold as 2, -2, and -4) are ADC conversions that result in noise that would affect the signal in any direction. The last ADC (-4) occurs once the signals are no longer on the wire, so it does not create quantization noise on any line.

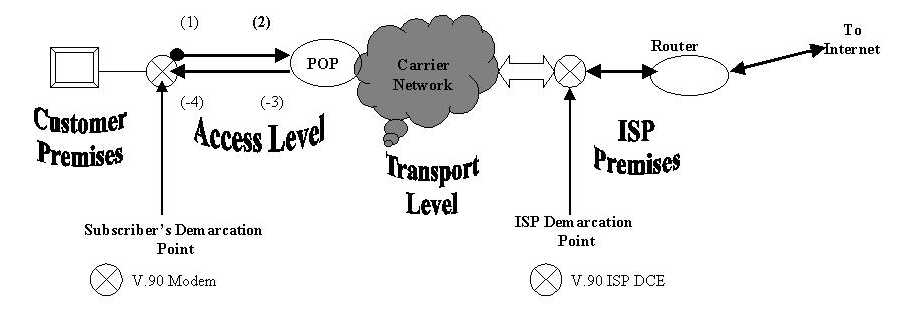

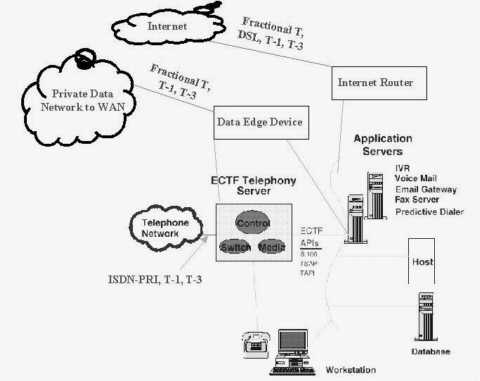

Another example, with a 56 kbps modem, shows the directional limitations of DAC and ADC. When the 56 kbps modem calls over a special digitally equipped telephone line and hooks up with compatible equipment, Shannon's Law and the Nyquist Theorem do not vanish. However, due to the absence of quantizing noise and the removal of ADC on the opposite end, download baud rates of up to 8000 may be negotiated with a seven bit per baud coding rate. The 56 kbps modem process is illustrated in Figure 4-6.

Figure 4-6 differs from the V.34+ (Figure 4-5) case in one obvious way. The ISP has purchased a special digital line (a channelized T-1 or an ISDN-PRI T-1) that allows the V.90 equipment at the ISP's premises to interact directly with the telephone company's transport network. Instead of a telco POP (Point of Presence) on the ISP end, a block arrow is drawn to show that the ISP connection is physically on the transport network. In addition, only one conversion (2) is in bold since that is the only ADC conversion that creates quantizing noise that would follow it to the Internet site at which uploading would occur. The download path is free from quantizing noise on the line. Of course, other kinds of line noise would still be present, creating an important reason that 56 kbps Internet access is spotty or even completely absent in many parts of rural Florida.

By now, it should be clear that bandwidth is both a practical and a theoretical measure. Shannon's Law and the Nyquist Theorem provide upper theoretical bounds on channel capacity, while modem advertisements claim a lower theoretical number (an operational bit rate), perhaps more realistic, but still abstract from reality. Line noise and the CPE (modem or other device) on the other side are additional variables. For this reason, modems and other DCE are designed to operate at many different bit rates (operational speeds) because of the variability involved. During the course of a session, modems (like other CPE) must synchronize rates with the bit rate and line conditions on the other end. Hence, during each session, a 56 kbps modem will dynamically adjust (negotiate) upload bit rates of from 300bps to 33.4 kbps (and many levels in between) and download bit rates from 56 kbps down to 300 kbps.

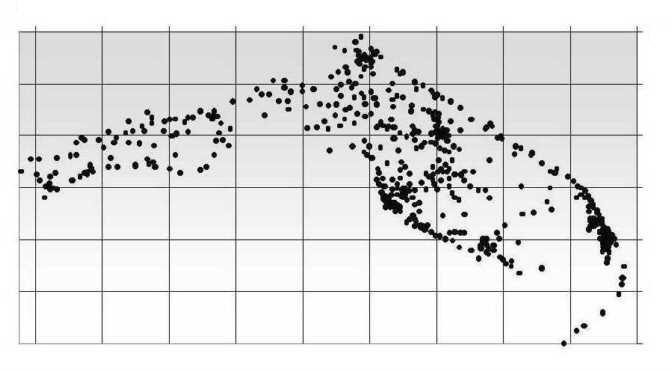

However, the data rate of an actual transfer varies from the advertised bit rate even under low noise conditions because of other sources of variability, the manufacturer, and model. Figure 4-7 shows test results for thirty-one modems Data Communications magazine lab tested over a six thousand-foot local loop under controlled conditions ["V.34 Modems: You Get What You Pay For." Data Communications magazine, 1995]. Note that the different brands of V.34-Plus (ITU standard) modems did not perform identically under the three tests, although line conditions were the same throughout. V.34 modems are designed to have upload bit rates of up 28.8 kbps and download bit rates of up to 33.6 kbps.

The top line shows the throughput for a (one-way) text download. Due to compression, throughput exceeds the advertised bit rate, the popularly defined "bandwidth" of the telephone line. The middle line of Figure 4-7 shows the two-way average throughput based on binary compressed files. Again, in every case but one, the observed throughput exceeds 28.8 kbps, but that is comparing an end-to-end throughput to the maximum operational data rate (advertised maximum bit rate). Finally, the bottom line shows the average two-way binary file transfer throughput. Notably, while every brand was advertised as a 28.8 kbps modem (able to transmit and receive), the top two had maximum average binary throughputs of 27.4 kbps. When the tests were repeated over different lines (30,000 foot rural local loops), results were as much as sixty percent below Figure 4-7 (when a transfer could occur at all) [Data Communications, 1995].

Figure 4-8 shows that the results for the most recent 56k modem standard V.90 indicate somewhat less variability among readings than the 1995 tests of Figure 4-7.

The one-way (download) text throughput rate (shown on the right y-axis) approached 120kbps on two of the modems shown. While no modem had a data rate of 56 kbps for downloads, the striped columns show that all were above 40kbps. On the upload side, most experienced just under or just over 30kbps data rates as shown by the solid columns.

When modems communicate over networks, the observed data rate and throughput also depend on network QOS variables. Figures 4-9 and 4-10 show how variability in telephone line noise, network congestion, and other factors prevent the advertised "bandwidth" of a modem from being observed in data rates in practice.

Instead of showing laboratory test averages, Figures 4-9 and 4-10 compare incoming data rates (experienced by the author) from the same web site (un-cached in memory) using the same modem and connection. Readings were taken minutes apart, using AnalogX NetStat Live version two software. In Figure 4-9, the average data rate was 13.5 kbps. For Figure 4-10, the average data rate was 17.9 kbps. However, in addition to the fact that in one case the same size file loaded many seconds faster, there were different accelerations in data rates as each transfer proceeded.

The results cannot be explained by any one factor. The differences may be due to an interaction among line noise, other simultaneous requests on the remote web site, the different routes taken by the individual packets of each transfer, ISP network load, and overall Internet congestion.

The computer modem examples have demonstrated several points. First, the theoretical bandwidth of a connection (in this case a telephone line), while often equated with the advertised bit rate (operational speed), differs from the throughput experienced by users and also from the measured data rate of the connection. Furthermore, differences due to manufacturer, line quality, and network conditions affect QOS metrics.

Until now, the focus has been on QOS metrics that are popularly mistaken for bandwidth. However, QOS metrics must be able to handle the full set of simple point-to-point and inter-networked connections of an agribusiness hypercommunications network (that may handle hundreds of simultaneous requests for voice, video, and data). Before more general QOS metrics (including many that are completely unrelated to bandwidth) can be explained, a reference model that is more general than the simple modem example is needed.

4.2.3 QOS Reference Model

At this stage, it is helpful to recall the three network engineering problems and the four objectives of network management from Chapter 3. Any network has combinatorial, probabilistic, and variational problems of engineering. These are sometimes loosely called possibilities, probabilities, and the positive and negative synergies between the two. The way an agribusiness and its hypercommunication vendors define and approach these three engineering problems determines how effectively communications occurs and how efficiently hypercommunications dollars are spent. Additionally, the way the four network objectives are achieved by the carrier influences the efficacy of the agribusiness's connection and the cost efficiencies of the carrier. While the modem example showed that not everything is under either party's control, a QOS reference model will help isolate who controls what.

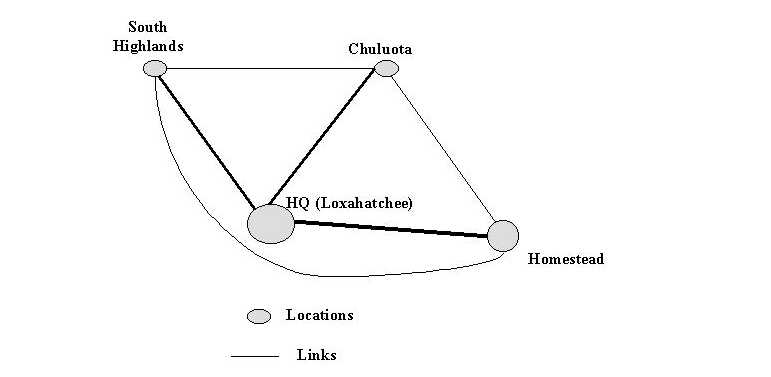

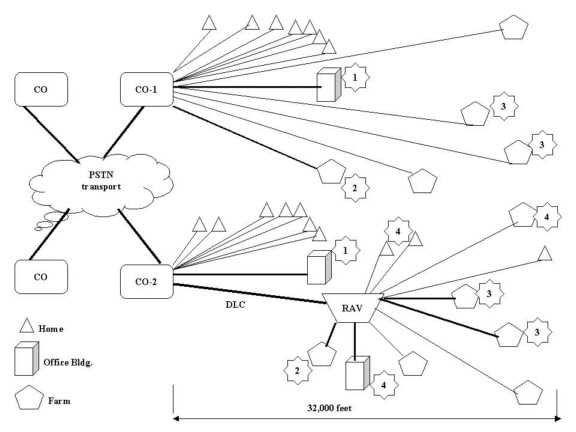

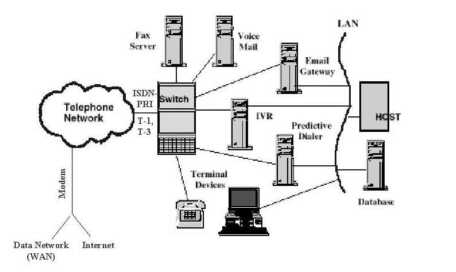

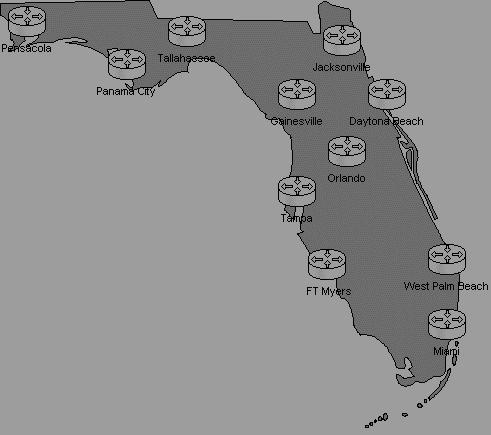

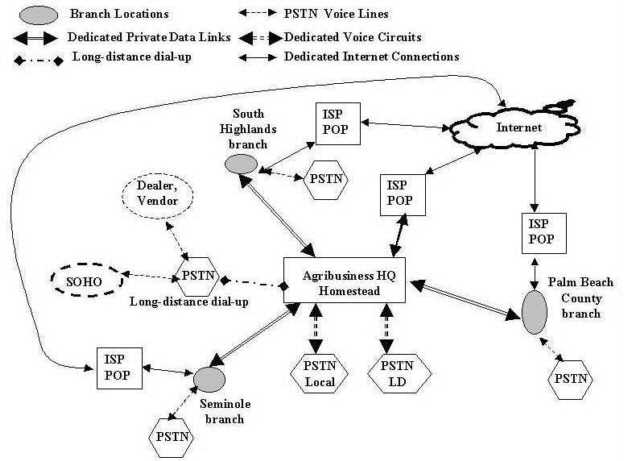

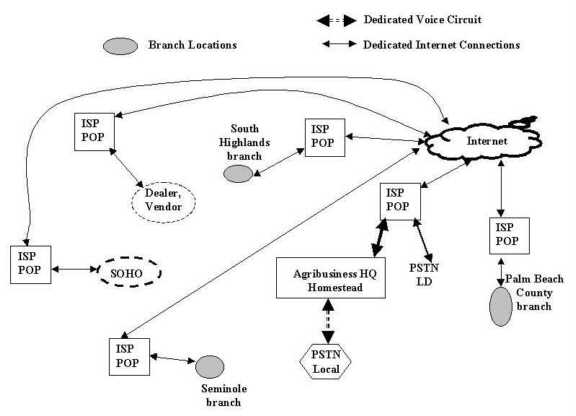

Instead of two modems, consider a more general agribusiness network such as that in Figure 4-11. Assume that the network is a true hypercommunications network that can carry voice, video, data, fax, e-mail, and Internet traffic. Communications occurs not only among and within offices, but also with customers, employees, and suppliers both nationally and internationally. Notice that the largest node (location) in the fictional agribusiness network depicted is at the headquarters in Loxahatchee, Palm Beach County. Another office is in Homestead, Miami-Dade County. Two smaller locations are in Chuluota, Seminole County and in Southern Highlands County. Suppose that six point-to-point links connect the hypercommunications traffic among offices. Each link could use one or more services and one or more technologies to carry traffic.

The HQ-Homestead, HQ-Chuluota, and HQ-South Highlands links are bold to indicate that a greater amount of traffic flows on these three main links than through the three others. To link any two locations in Figure 4-11, an end-to-end communications pipeline must be able to handle downstream, upstream, and two-way traffic. Users may communicate through computers, telephones, fax machines, or other DTE.

This is further illustrated by summarizing the elements of a single connection between two points as in Figure 4-12. The figure shows various CPE (Customer Premises Equipment) the agribusiness owns in Loxahatchee and in Homestead. On the Loxahatchee end, workstations, storage, and mainframe devices are shown on the uppermost part of the diagram. On the Homestead end, PCs are shown on the upper end of the local network. This configuration between the two locations suggests that traffic will mainly be downstream (from HQ) data traffic as data base information at HQ is accessed by Homestead users. However, data traffic between the two points could just as easily consist of an upstream flow at another part of the day when, for example, sales numbers or other reports are sent to HQ. Two-way traffic could occur between telephones at each location (as shown in the middle part of the local network on either end) or through a mix of computer and telephone traffic (shown in the lower part of the local network on either end). Figure 4-12 shows how varieties of devices (connected in local networks at both locations) allow a mix of traffic to flow between the locations.

However, Figure 4-12 is both too general and too specific to serve as a general model for use throughout the Chapter. It is too specific because (in addition to the direction of transmission) it mentions numerous user devices such as telephones, faxes, data storage, etc. Figure 4-12 is too general because it appears as though the communications pipeline between Loxahatchee and Homestead can only be a point-to-point link, rather than a more broadly defined network connection.

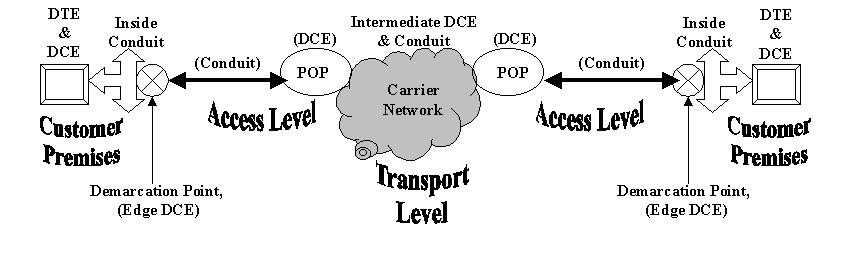

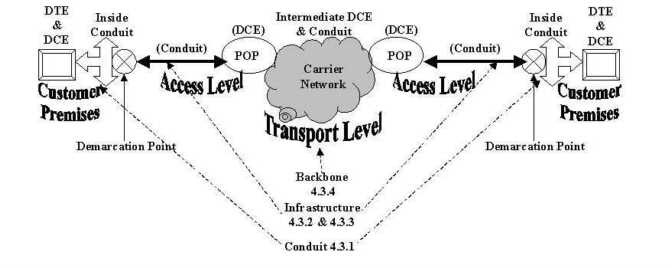

Figure 4-13 reveals six essential parts of a hypercommunications link between users at HQ and users at any other office without differentiating among services and technologies, or specifying network switching. The six parts are: carrier network (1), carrier POP (2), POP-edge access link (3), edge device (4), inside network (5), and user device or DTE (6).

It is easiest to work from the inside out of Figure 4-13 to describe the six essential elements. Assume that two-way, upstream, and downstream traffic may be carried between the two points, though each type may be handled differently depending on the service or technology. By formulating the model this way, it is clear that bandwidth is not the only QOS characteristic an agribusiness will be interested in, because bandwidth describes the separate capacities of parts (1), (3), and (5). Each essential element also represents a possible threat to the security and reliability of communications.

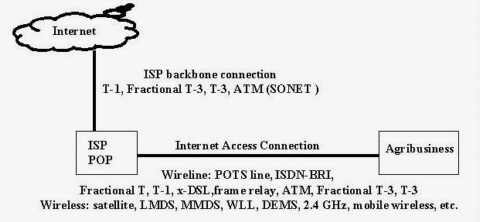

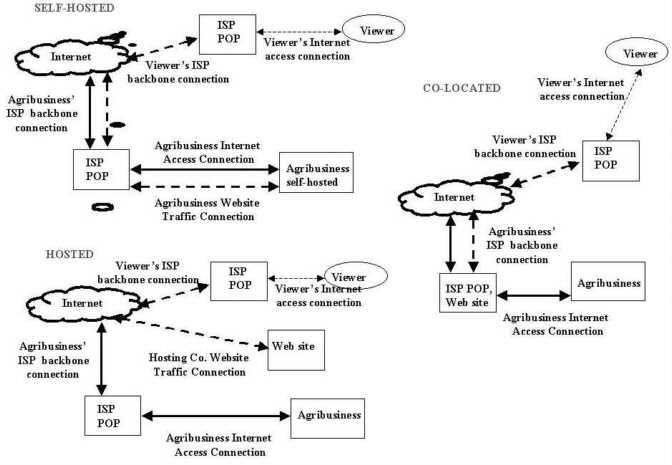

The first essential element of a hypercommunications link is the carrier network (1). The carrier network, often called a backbone, is depicted as a cloud because there are many different paths and switching technologies that may be used to transport traffic through the backbone. Usually, the backbone is national or international in scope, carrying traffic for thousands of carrier customers. Bandwidth of backbone networks can range from T-3 (45 Mbps) and upwards over fiber optic cable, satellite, or microwave pathways. While the bandwidth of the carrier's backbone is likely to be more than adequate for the needs of individual customers, congestion can occur if the backbone is oversold or mismanaged by the carrier. Importantly, this central network is also the path over which communications with the outside world occur, though the reference model now is focused on Homestead to Loxahatchee. Carrier networks are covered in more detail in several parts of Chapter 4 including 4.3.4 (data and voice transport), ATM (4.8.3), SONET (4.8.4), and Internet transport (4.9.1). In many cases, carrier networks are already converged networks that handle both voice and data together, though often through parallel structures.

The second essential part of the reference model is the carrier's local POP (Point of Presence). POPs are locations where connections serving a particular area terminate so that traffic can be placed onto the backbone. For example, the POP that serves Homestead might be located in Miami and the POP for Loxahatchee might be located in West Palm Beach. The wireline (or wireless) distance from an agribusiness location to a POP can determines if a particular service can be offered. While each POP is a potential bottleneck, the DCE at a POP typically can handle far more bandwidth than a single customer needs. However, if a POP is oversold or mismanaged by the carrier an agribusiness may not be able to communicate. Carrier POPs are discussed in the context of 4.3 (wireline transmission) and 4.4 (wireless transmission). They appear again as the discussion turns to specific services such as CO technologies that support enhanced telecommunications (4.7.2) and Internet access (4.9.1).

The third essential element is the POP to edge device pipeline (3) that transmits messages between the each local POP and its corresponding agribusiness location. This element is often called the local loop or "the last mile", even though it may be far longer than a mile. Depending on the technology or service, the last mile may be wireline or wireless and be called a link, local loop, circuit, or path. With broadband signaling, the capacity of the connection from the business' edge device to the POP is shared with other customers (like a telephone party line), while with baseband or carrierband signaling it is dedicated to a single user. Depending on which is the case, there may be a potential for congestion. Access conduit is discussed in 4.3.1 and under infrastructure in Chapter 5. Access loops (the physical connection used to support various services) are given further coverage in discussions of the telephone infrastructure (4.3.2) dedicated circuits (4.7.3), and of circuit-switched digital services (4.7.4).

Fourth, edge devices at each location (4) enable that location to connect to the pipeline. Inside a business' wiring closet, also called the MDF (Main Distribution Frame), an interface is needed to link the carrier's transmission network to the network inside the business. From this point outward, all equipment is CPE (Customer Premises Equipment) because it is physically located at the agribusiness's site and owned or leased by the agribusiness. Edge devices can be interfaces or ports as simple as a telephone jack, or as complex as a T-1 NIU (Network Interface Unit) or CSU (Customer Service Unit). Edge devices must be compatible with the outside carrier equipment and the inside CPE equipment. Edge devices are most important as specific enhanced telecommunications CPE (4.7.1) and circuits (4.7.3 and 4.7.4) are covered. Specialized edge devices are used for private data networking and Internet services as well.

Moving out from the edge device in Figure 4-13, the fifth essential element in the reference model is the inside (local) network at each location. The local network controls how communications travel from the edge device to the user's device as well as how communications between people at that location travel. The local network includes conduit (wiring and cabling), network hardware, and network software. Regardless of the carrying capacity of the external parts of the hypercommunications link, the management, traffic level, and capacity of the local network can prevent an agribusiness from using the bandwidth it pays for or achieving desired data rates. Local network hardware and software must be compatible with the edge devices and with the carrier for reliable service to be expected. A general local hypercommunications network must be distinguished from a local area computer network (LAN) described in 3.5.3 and 3.5.4. However, voice-data consolidation technologies (4.5.4) and call center technologies (4.7.2) can be used along with Internet technologies to create converged networks. Local conduit is discussed in 4.3.1 for the wireline case and in 4.4 and 4.8.5 for wireless cases.

The sixth and last essential element of the QOS reference model is DTE. DTE include telephones, computers, fax machines, and other hardware directly used by people at either end to communicate. DTE must be compatible with local network hardware and software. Each device has its own individual capacity to send and receive communications. User devices on each end must either be compatible or have intermediate CPE to allow interconnection. In some cases, a single malfunctioning user device can slow or completely obstruct communications across the link.

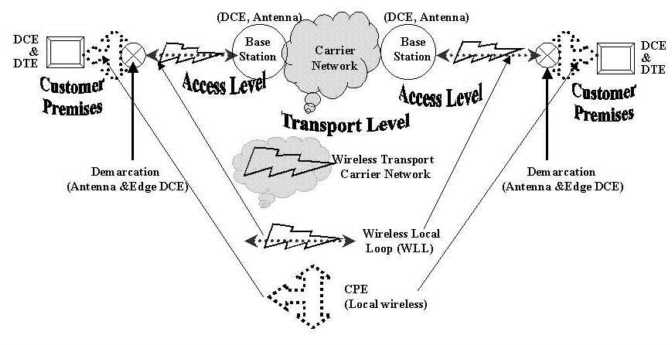

Figure 4-14 ties these six parts together into a general QOS reference model to be used for the rest of Chapter 4. Note the three levels of the reference model: customer premises, access, and transport. The customer premises level is entirely under the control of the agribusiness at each location.

The access level is the connection between the agribusiness and the carrier network from the edge device at the agribusiness to the network gateway at the carrier's POP. The transport level (as shown here) is a single carrier's network, but it may be several interconnecting networks owned and managed by separate carriers depending on the distance from receiver to sender.

4.2.4 Fifteen Dimensions of QOS

Now that the reference model is established, the chief QOS dimensions of interest to agribusinesses can be considered. QOS is one of many acronyms in hypercommunications, but is perhaps the most important being one of the twelve essential hypercommunication terms mentioned in Table 1-1.

At first glance, QOS seems to be a simple concept, having to do with measurable degrees of quality customer satisfaction. However, hypercommunication service quality is affected by many issues such as: system reliability and redundancy, customer service and billing, the usefulness and availability of technical support, as well as a number of engineering parameters, software-hardware bugs and idiosyncratic events. QOS concerns apply to all three levels of the reference model (access, transport, and network), but most SLAs cover the transport or transport and access levels only. This is since the carrier cannot be expected to control situations that are on customer premises.

Often, each area has many specific parameters that may or may not be measurable. Indeed, the method of measurement, software package, level of measurement, periodicity, and who will do the measuring are themselves important issues. However, there is not enough space here to delve into the methodology of measurement by dimension.

Table 4-3 introduces fifteen QOS dimensions of greatest importance to agribusinesses. The first six QOS dimensions (bandwidth, bit rate, data rate, throughput, delay, and jitter) have already been introduced in 4.2.1 and were covered briefly in the modem example in 4.2.2.

Sources: Maguire, 1997; Sheldon, 1998; FitzGerald and Dennis, 1999.

The seventh QOS issue, connection establishment delay, refers to the length of time it takes to establish a connection. Like many of the other dimensions, it depends heavily on the user's perception, the specific service, technology, and DCE and DTE. The simplest example is the length of time it takes for a modem to dial and establish a satisfactory connection to the Internet. It also is applicable to the length of time it takes a telephone call to complete. Some services (such as DSL, cable modem, and T-1 dedicated circuits) are "always-on" and hence, have no connection delay. The eighth QOS category, connection establishment failure probability, refers to the probability of getting a busy signal when a modem dials AOL, for example. In that case, there has been a failure to reach the Internet. For other services, the definition is similar.

The ninth dimension of QOS, network transit delay, refers to the average delay in ms that it takes for a bit to transverse the carrier's network. Unlike end-to-end delay (which is observed), network transit delay is a statistical average that does not count delay resulting from access loop or customer premises conduit and equipment. The carrier may not have control over those other forms of delay, but will have control over transit delay. Hence, most SLAs or carrier statistics that describe delay refer to transit delay (transport level) only. Similarly, most jitter statistics refer to transit jitter, rather than jitter based on end-to-end delay. It can be important as to whether the transit delay and jitter measurements are averages between two points, weighted averages across an entire network, or measured in some other way.

The tenth dimension, error rate, may be a residual measure (on average equal to zero) for some services and an efficiency measure for others. The specific transmission technology and switching method determine the importance of the error rate. In packet switching, the error rate is a background rate that may slow transfers, but switching redundancies and error checking routines are able to recover or correct errors in most cases. Even if the error rate is high across a network such as the Internet, the communication may appear to have been error-free from the user's perspective. Other errors include lost, misdirected, or duplicate e-mails.

Security, the eleventh QOS dimension, is perhaps the most difficult to measure. Security refers to whether others are able (or may be able) to intercept or copy messages or to gain access to network. A security failure can occur at the message, user, or system level. Security failures on any level can result from actions taken within a business, from inside the carrier's network, or from outside.